The coming decade will mark a pivotal epoch in the evolution of artificial intelligence.

It will transition the technology from a discrete, often experimental tool into an ambient, utility-like infrastructure.

This infrastructure will be woven into the fabric of the global economy.

This report provides a comprehensive analysis of the technological, economic, and societal vectors that will define this transformation through 2035.

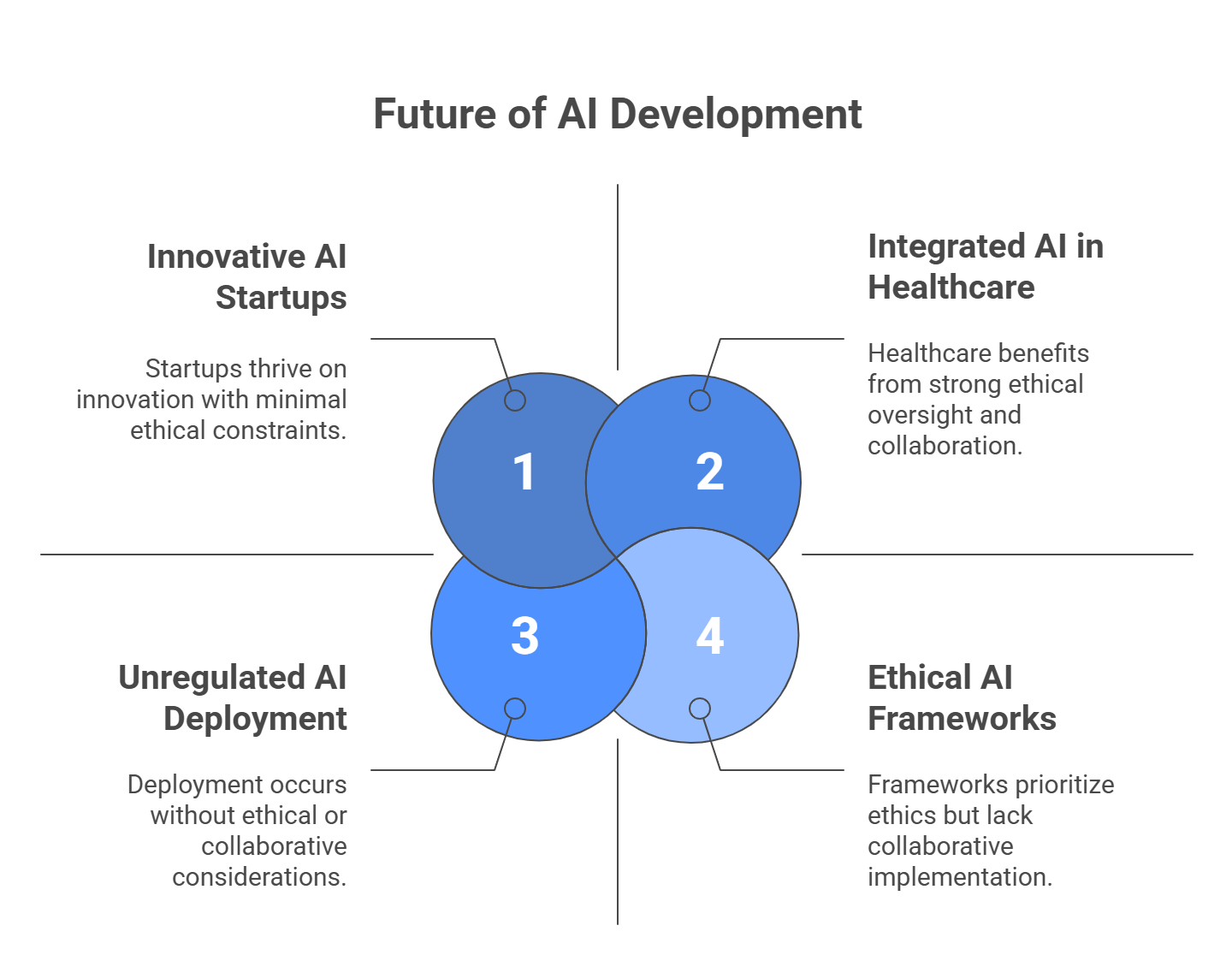

The central thesis is that the convergence of increasingly autonomous, action-oriented AI software—known as agentic AI—with a bifurcated hardware ecosystem will be key.

This convergence, along with unprecedented capital investment, will create a new foundation for productivity, innovation, and geopolitical competition.

By 2035, AI will be an invisible yet integral component of nearly every business process and consumer interaction.

This report synthesizes extensive technical research, market analysis, and expert forecasts to derive five key predictions that will shape the strategic landscape for the next ten years.

First is the ascendance of Agentic AI. The dominant paradigm will shift decisively from generative AI, which excels at content creation, to agentic AI.

Agentic AI focuses on autonomous task execution. These systems will automate complex, multi-step digital and physical workflows.

They will move from pilot projects to practical, widespread deployment as virtual coworkers and operational backbones.

This shift is already evident, with the largest mergers and acquisitions in early 2025 targeting AI agent technology.

Second is Hardware Bifurcation and Specialization. The AI hardware market will evolve into a two-tiered ecosystem.

At the high end, the race to train ever-larger frontier models will continue to drive demand for power-intensive processors like GPUs.

Simultaneously, a larger market will emerge for hyper-efficient, specialized silicon like NPUs and ASICs for low-cost, low-latency inference at the network edge.

Third is an Uneven Economic Transformation. AI is projected to be a powerful engine of economic growth.

It’s estimated to contribute $13 trillion to $15.7 trillion in additional global economic activity by the 2030-2035 timeframe.

This “AI dividend,” however, will be distributed unevenly. It will dramatically widen the performance gap between firms that adapt and those that fail to.

This will lead to significant market consolidation and the rapid decline of incumbent firms unable to navigate the transition.

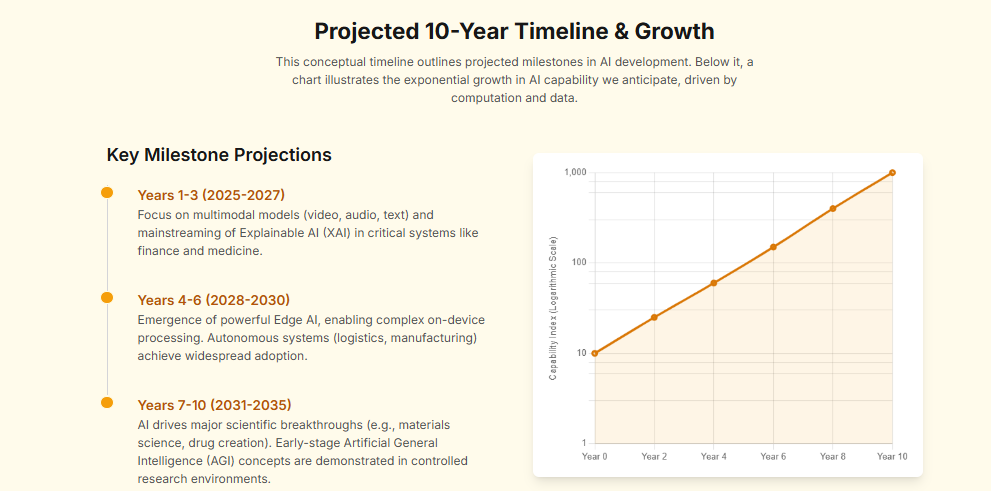

Fourth is the Intensifying AGI Debate and “AGI-like” Systems. The prospect of true Artificial General Intelligence (AGI) remains a subject of intense debate.

Timelines range from as early as 2029 to beyond 2060. Yet, the practical distinction will become increasingly blurred.

By the late 2020s, the field will produce “AGI-like” systems with powerful cross-domain reasoning in specific, high-value contexts.

The emergence of these systems will force society to confront the profound challenges of alignment, control, and governance preemptively.

Fifth is Governance as a Global Imperative. The current patchwork of voluntary frameworks and regional regulations, like the EU AI Act, will prove insufficient.

Driven by concerns over autonomous weaponry, economic stability, and existential risk, the international community will move toward global norms.

This will effectively create a “Geneva Convention” for AI, establishing red lines and verification mechanisms for the most powerful systems.

Navigating this new epoch requires a fundamental shift in strategy.

Businesses must move beyond isolated AI experiments to a complete transformation of core processes.

Investors must adapt their theses to a market defined by massive infrastructure platforms and a vibrant application layer.

Policymakers must act decisively to foster innovation while building the educational, economic, and governance guardrails necessary to manage this transition.

The decisions made in the next five years will determine the leaders and laggards in the AI-native economy of 2035.

The New Engine of Intelligence: Algorithmic and Architectural Frontiers

The engine driving the AI revolution is the relentless pace of innovation in its underlying algorithms and architectures.

Over the next decade, the software of intelligence will evolve along several key axes.

It will move from processing single modalities to understanding a rich, multimodal world; from generating content to taking action.

It will also shift from relying on brute-force scale to incorporating more sophisticated structures for reasoning and planning.

These architectural frontiers define the boundaries of what will be possible by 2035.

The Enduring Reign of Transformers and the Shift to Multimodality

The Transformer architecture, introduced in 2017, remains the undisputed foundation of modern AI.

Its core innovation, the self-attention mechanism, allows a model to weigh the importance of all parts of an input sequence simultaneously.

This design proved exceptionally scalable and efficient on modern parallel hardware like GPUs, a phenomenon sometimes described as “winning the hardware lottery”.

This blueprint has spawned three dominant variants: encoder-only models (like BERT), decoder-only models (like the GPT series), and encoder-decoder models (like T5).

While the Transformer’s core remains, its application is undergoing a profound expansion.

The most significant evolution is the transition from unimodal, text-centric models to natively multimodal systems.

These new systems can process and reason across text, images, audio, and video simultaneously.

This is not merely adding “vision” capabilities to a language model; it involves training models from the ground up on vast, interleaved datasets.

For instance, BAAI’s Emu3.5 is trained on internet videos, enabling it to understand and generate complex vision-language sequences.

This trend is a dominant theme at premier AI research conferences, with a heavy focus on vision-language integration and multimodal reasoning.

However, a fundamental scaling challenge remains: the self-attention mechanism’s computational and memory cost grows quadratically with the input sequence length ($O(n^2)$).

This limits the ability of models to process very long contexts, such as entire books, codebases, or hours of video.

Consequently, a major research thrust is the development of more efficient attention mechanisms. Approaches like linear and sparse attention are actively being explored.

Kimi Linear, for example, integrates a novel attention architecture to achieve 6x faster decoding and a 75% memory reduction for million-token contexts.

Another innovative approach, the Byte Latent Transformer (BLT), moves away from processing text as tokens and instead dynamically groups raw bytes into patches.

This allows the model to allocate more capacity to more complex parts of the input, demonstrating performance that rivals leading token-based models.

These advances are crucial for making large-scale AI more economically and computationally feasible.

The Agency Revolution: From Generative AI to Action-Oriented AI

The next great frontier in AI is the shift from passive knowledge work to active task execution.

While generative AI excels at creating content in response to a prompt, “Agentic AI” aims to create autonomous systems.

These systems can understand a high-level goal, formulate a plan, and execute a sequence of actions using available tools to achieve it.

This represents a paradigm shift from AI as a tool for answering questions to AI as a virtual coworker for completing tasks.

This evolution is enabled by new architectural and reasoning paradigms.

For example, the “AsyncThink” framework allows a large language model to learn and execute a self-organized, asynchronous process of “thought”.

This approach has been shown to improve accuracy on mathematical reasoning tasks while reducing critical-path latency by 28%.

For tasks involving long-term interaction, the “AgentFold” architecture uses a proactive context management system to maintain focus over extended horizons.

These advanced techniques are being packaged into powerful open-source frameworks like Microsoft’s AutoGen and LangGraph.

These frameworks allow developers to build multi-agent systems where specialized AI agents collaborate to solve complex problems, mirroring a human team.

The strategic importance of this transition to agency is underscored by intense market activity.

An analysis of the investment landscape in Q1 2025 revealed that the largest merger and acquisition deals were targeted at companies specializing in AI agent technology.

This indicates a strong belief that the next wave of value creation will come from automating business workflows.

Market intelligence firms are now mapping out an entire “AI agent tech stack” to build, orchestrate, and secure these autonomous systems.

This convergence suggests the industry is rapidly moving to build the “action layer” for the internet.

This new infrastructure of autonomous agents will operate software and execute commercial transactions on behalf of human users.

For businesses, this implies a critical strategic imperative to make services accessible to these AI agents.

Reinforcement Learning’s Central Role

Reinforcement Learning (RL) has become an indispensable component in the development of advanced AI systems.

In RL, an agent learns to make decisions by taking actions in an environment to maximize a cumulative reward.

Its most prominent application is in aligning LLM behavior with human preferences through Reinforcement Learning from Human Feedback (RLHF).

In this process, human feedback is used to train a “reward model,” which then fine-tunes the base LLM to be more helpful, harmless, and honest.

A critical challenge is that the methodology for scaling RL has lagged behind the more mature science of scaling supervised pre-training.

The process is often described as “more art than science,” with outcomes being highly sensitive to specific algorithms, hyperparameters, and data.

To address this, researchers are developing new scientific frameworks to make RL training more predictable and scalable.

Work on “ScaleRL,” for instance, analyzes the scaling trajectories of different RL recipes.

This research “embraces the bitter lesson” of AI development: that general methods that leverage computation most effectively ultimately outperform.

Establishing predictable scaling laws for RL is essential for efficiently allocating the vast computational resources required for next-generation models.

Beyond alignment, RL is proving powerful for developing specialized, high-performance AI in domains where a correct outcome can be automatically verified.

A prime example is Reinforcement Learning with Verifiable Rewards (RLVR).

In a recent state-of-the-art result for generating complex SQL queries, researchers used a simple 0-1 reward (the query is either correct or not) to fine-tune a model.

This approach achieved top performance, demonstrating RL’s ability to optimize a model for a specific, verifiable task.

This provides a clear pathway for enterprises to create highly customized AI solutions that deliver measurable return on investment.

Emerging Paradigms and Future Architectures

While scaled-up Transformers dominate, a schism is deepening within the research community.

The debate is between those who believe further scaling is the primary path and those who argue that fundamentally new architectures are required.

The latter camp is exploring several promising future paradigms.

One of the most significant is the concept of World Models.

Championed by researchers like Yann LeCun, this approach posits that true intelligence requires an internal, predictive model of how the world works.

Instead of merely predicting the next word, a world model learns an abstract representation of its environment and can simulate future states.

This enables capabilities far beyond current LLMs, such as hierarchical planning and a grounded understanding of cause and effect.

Graph Neural Networks (GNNs) represent another important architectural direction.

GNNs are explicitly designed to operate on data with an inherent relational structure, such as social networks, molecular structures, or knowledge graphs.

They have proven highly effective in scientific domains like bioinformatics, where they are used to predict the 3D structure of RNA molecules.

Finally, novel recurrent architectures are being explored as a more parameter-efficient path to scaling.

Looped Language Models (LoopLMs), such as the “Ouro” architecture, leverage iterative latent computation.

This allows the model to perform multiple steps of internal “thinking” before producing an output, achieving 2-3x greater parameter efficiency.

This methodological divide reflects a deeper philosophical question about the nature of intelligence itself.

The next decade will see a dual-track approach: hyperscalers will continue to push the boundaries of scale, while others will explore these alternative paradigms.

The breakthroughs that ultimately lead toward AGI will likely emerge from a hybrid approach.

The Silicon Backbone: Hardware’s Co-Evolution with AI

The exponential progress in AI is inextricably linked to the parallel evolution of the specialized hardware on which it runs.

The computational demands of AI have ignited a new arms race in the semiconductor industry.

Hardware is now a primary strategic differentiator and a focal point of geopolitical competition.

The next decade will be defined by a continued surge in demand for high-performance compute and a bifurcation of the hardware market.

The Compute Arms Race: GPUs and Specialized Accelerators

At the heart of the current AI boom are Graphics Processing Units (GPUs).

Originally designed for video game graphics, their massively parallel architecture proved perfectly suited for the operations of deep learning.

This has made NVIDIA the dominant player; in 2025, the company is expected to command an 86% share of the AI GPU segment.

However, this dominance is being challenged from multiple fronts.

AMD is making significant inroads, with projections showing its AI chip division growing to $5.6 billion in 2025.

Intel is also competing with its Gaudi 3 platform, which is forecast to secure a meaningful 8.7% of the AI training accelerator market by 2025.

Beyond the GPU duopoly, a critical trend is the rise of specialized silicon, particularly Application-Specific Integrated Circuits (ASICs).

These chips are custom-designed for a single purpose, offering maximum efficiency at the cost of flexibility.

The most prominent example is Google’s Tensor Processing Unit (TPU), engineered to accelerate deep learning workloads.

Google’s TPU v5p demonstrates a 30% improvement in throughput over its predecessor.

For data center inference workloads in 2025, ASICs are projected to surpass GPUs in efficiency, capturing a 37% share of total deployments.

This move toward custom silicon reflects a broader strategic shift.

The immense cost and importance of AI compute have led major hyperscalers—like Google, Amazon, and Apple—to invest billions in designing their own chips.

This vertical re-integration allows for co-optimization of hardware and software, yielding superior performance-per-watt.

More importantly, it provides strategic control over a critical component of the value chain, reducing dependence on a single external supplier.

A parallel and equally significant trend is the explosion of AI at the network edge.

This involves running AI models directly on devices like smartphones, cars, and industrial sensors, rather than in a centralized cloud.

This demand is driven by the need for low latency, real-time responsiveness, and enhanced privacy.

This has given rise to the Neural Processing Unit (NPU), a specialized processor core designed for energy-efficient, on-device inference.

NPUs are now a standard component in high-end mobile chips from Apple, Google, and Qualcomm.

Over 980 million smartphones embedded with NPUs are expected to ship in 2025, with flagship chips delivering upwards of 35 Trillion Operations Per Second (TOPS).

The total market for edge AI chips is projected to reach $14.1 billion in 2025, making it one of the fastest-growing segments of the semiconductor industry.

Beyond Digital: The Future of AI Hardware

While the current generation of AI hardware is overwhelmingly digital, research is turning to new paradigms for greater efficiency.

Neuromorphic Computing seeks to build chips that mimic the architecture of the human brain.

These processors use networks of artificial neurons and synapses that operate in an event-driven, asynchronous manner, consuming power only when a “spike” of information is transmitted.

This makes them exceptionally energy-efficient for tasks like pattern recognition and sensory processing.

Intel’s Loihi research processor is being explored for ultra-low-power applications at the edge.

Photonic and Analog Computing represent an even more radical departure.

Photonic chips compute with photons (light) instead of electrons, promising dramatically higher speeds and lower energy consumption.

Analog chips compute using continuous electrical signals rather than discrete 1s and 0s.

For certain AI operations like matrix multiplication, analog computation can be inherently faster and more energy-efficient.

Early research suggests analog chips could offer up to 100 times lower power consumption than GPUs for specific workloads.

The emergence of these alternatives is tied to the rise of the “inference economy.”

Training massive models is computationally intensive but infrequent. In contrast, inference—using these models—happens billions or trillions of times a day.

Training prioritizes raw performance at any energy cost. Inference, especially at the edge, prioritizes performance-per-watt, low latency, and low cost.

This creates a distinct market opportunity for hyper-efficient, specialized hardware.

It is predictable that the first major commercial breakthroughs of these novel technologies will be targeted at inference workloads, not training.

Market Dynamics and Projections

The AI hardware sector is in a state of hypergrowth.

The global AI chip market is projected to expand at a CAGR of 41.60%, growing from $40.79 billion in 2025 to $164.07 billion by 2029.

This explosive demand is rippling through the entire semiconductor supply chain.

Leading-edge foundries like TSMC are dedicating an ever-larger portion of their capacity to AI chips, with over 28% of its total wafer production allocated to AI in 2025.

Advanced packaging technologies, such as 3D chip stacking, are also becoming critical areas of innovation.

This growth is also shaped by a new geopolitical reality, particularly the competition between the United States and China.

Governments increasingly view domestic chip fabrication and supply chain resilience as matters of national security.

This has led to policies aimed at onshoring manufacturing and restricting the export of cutting-edge technology.

Despite these restrictions, China’s domestic AI chip market is still projected to reach $18.3 billion in 2025.

This is driven by a concerted national effort to build a self-sufficient semiconductor industry.

A look at processor types shows a clear specialization.

GPUs remain the workhorse for AI training and high-end inference, prized for their versatility but noted for high power consumption and cost.

TPUs and ASICs offer the highest efficiency for large-scale training and inference but are inflexible and often proprietary.

NPUs are the low-power, cost-effective solution for edge inference, integrated directly into system-on-a-chip (SoC) designs.

FPGAs offer reconfigurability for prototyping, while neuromorphic, photonic, and analog chips represent the experimental frontier for future high-efficiency inference.

The Trillion-Dollar Trajectory: Investment Landscape and Economic Projections

The advancement of AI is being fueled by an unprecedented torrent of capital.

This is transforming the technology from a research endeavor into a dominant force of the global economy.

The investment climate is characterized by record-breaking funding, a strategic focus on infrastructure, and clear geographic concentration.

Mapping the Capital Flow: A Record-Breaking Investment Climate

The first quarter of 2025 set a new benchmark for AI investment, reaching a record high of $66.6 billion.

This represents a staggering 51% increase from the previous quarter, indicating that investor confidence is intensifying.

The primary drivers are massive investments into AI infrastructure (hardware, chips, cloud) and healthcare AI.

Within this landscape, generative AI continues to be a focal point for venture capital.

In 2024, the sub-sector attracted $33.9 billion in global private investment, an 18.7% increase over 2023.

This capital is flowing into a market structured like a barbell.

At one end, companies developing large-scale foundation models, like OpenAI and Anthropic, are raising multi-billion dollar rounds.

At the other end, a vibrant ecosystem of startups is raising smaller rounds to build applications on top of these models for specific vertical industries.

The middle ground—companies attempting to build moderately-sized, general-purpose models—is finding it increasingly difficult to compete.

Geographically, the United States remains the undisputed epicenter of AI investment.

In 2024, U.S.-based private AI investment reached $109.1 billion.

This figure is nearly 12 times greater than China’s $9.3 billion and 24 times that of the United Kingdom’s $4.5 billion.

Merger and acquisition (M&A) activity provides a clear signal of the industry’s strategic priorities.

In Q1 2025, the most significant M&A deals targeted companies specializing in AI agent technology.

This trend indicates that incumbent firms are moving to acquire the capabilities needed to automate complex business workflows.

Economic Impact Analysis: Projecting the AI Dividend

The macroeconomic forecasts for AI’s impact are monumental.

Projections suggest that AI will deliver an additional $13 trillion to $15.7 trillion in global economic activity by 2030.

The overall AI market is expected to grow from approximately $391 billion today to nearly $3.5 trillion by 2033, a CAGR of 31.5%.

More specifically, the Enterprise AI market, valued at $98 billion in 2025, is projected to reach $558 billion by 2035.

This growth is predicated on AI’s potential to drive a significant productivity boom.

One report from Goldman Sachs estimates that AI could eventually increase the total annual value of goods and services produced globally by 7%.

A growing body of academic and industry research corroborates these macro forecasts at the micro level.

These studies confirm that AI adoption boosts firm-level productivity and helps narrow skill gaps by augmenting less-experienced workers.

Breaking down these projections reveals broad growth.

The Global AI Market is projected to grow from $391 billion in 2025 to over $3.5 trillion by 2033.

The Enterprise AI Market is forecast to expand from $98 billion in 2025 to $558 billion by 2035.

The Generative AI Market is expected to see similar growth, from $63 billion in 2025 to around $450 billion by 2035.

The AI Hardware (Chips) Market shows the fastest growth, with a 41.6% CAGR, from $40.79 billion in 2025 to a projected $164 billion by 2029.

Specific sectors also show strong projections. The Automotive AI Market is expected to grow from $6.3 billion in 2025 to $35.9 billion by 2035.

The Healthcare AI Market is projected to expand from $2.2 billion in 2025 to $25 billion by 2035.

The Business of AI: From Experimentation to ROI

At the enterprise level, AI adoption is moving from the periphery to the core of business strategy.

In 2024, 78% of organizations reported using AI in some capacity, a significant jump from 55% the previous year.

This rapid adoption is being facilitated by the rise of AI-as-a-Service (AIaaS) offerings from major cloud providers like AWS, Microsoft Azure, and Google Cloud.

These platforms democratize access to sophisticated AI, allowing businesses to deploy models without massive upfront hardware investments.

Despite the surge in adoption and investment, a significant challenge remains: demonstrating a clear return on investment (ROI).

A 2024 survey revealed that while organizations were spending an average of $1.9 million on generative AI, less than 30% of AI leaders reported CEO satisfaction with the returns.

This has led Gartner to place generative AI in the “Trough of Disillusionment” on its 2025 Hype Cycle.

This is a phase where initial enthusiasm wanes as the practical challenges of implementation become apparent.

The strategic focus for successful organizations is now shifting from broad AI strategies to pinpointing specific, high-value use cases.

This apparent paradox—record investment with low ROI satisfaction—is characteristic of a “productivity J-curve.”

The initial phase of adoption often involves a temporary dip in productivity as organizations incur high costs to re-engineer workflows and retrain staff.

The true productivity gains are only unlocked after this difficult transformation is complete.

One of the primary bottlenecks identified is the lack of “AI-ready data.”

The next 3-5 years will therefore be a period of “pain before gain.”

Companies that merely sprinkle AI on top of existing processes will likely fail to see significant returns.

In contrast, companies that undertake the fundamental work of building AI-native processes will emerge with a profound and sustainable competitive advantage.

Sectoral Transformation: AI’s Remaking of Key Industries

The impact of AI is not uniform; it is a general-purpose technology whose power is uniquely expressed within different industries.

Over the next decade, AI will move beyond optimizing discrete tasks to fundamentally re-architecting the core operations of key sectors.

This section examines the projected transformations in healthcare, financial services, transportation, and robotics.

Healthcare: The Shift to a Predictive, Personalized, and Proactive System

The healthcare industry is on the verge of a system-level transformation.

AI-driven, digital-first care models could capture as much as $1 trillion in annual healthcare spending by 2035.

This shift is not about incremental improvements but a fundamental change in the healthcare paradigm.

The core of this transformation is the move from reactive to predictive healthcare.

By 2035, AI models will continuously analyze a patient’s integrated health record—including genomics, data from wearables, and lifestyle inputs.

The goal is to anticipate the onset of chronic conditions like diabetes or heart disease long before clinical symptoms manifest.

Predictive risk scoring will become a routine part of primary care, enabling interventions that steer individuals away from illness.

AI will also revolutionize the creation of therapies. The process of drug discovery will be dramatically accelerated.

Generative AI models will be used to design novel molecules and simulate their biological interactions.

Advanced protein folding models, successors to AlphaFold, will evolve into comprehensive drug discovery platforms.

This will shrink R&D timelines from years to months, making personalized cancer therapies and treatments for rare diseases economically viable.

Within the hospital, AI will become an indispensable clinical co-pilot.

AI scribes will automatically transcribe and summarize doctor-patient conversations, eliminating tedious documentation.

In diagnostics, real-time image analysis models will assist radiologists and pathologists by flagging anomalies with superhuman accuracy.

Medical decision support systems will synthesize the latest research and a patient’s history to provide evidence-backed recommendations at the point of care.

This will free clinicians from routine cognitive tasks, allowing them to focus on complex decision-making and direct patient care.

This technological shift will catalyze a restructuring of the entire healthcare ecosystem.

Providers will pivot to AI-enabled, human-centered models.

Hospitals will transform from all-purpose care centers into “high-speed care nodes” focused on acute, complex interventions.

Payers will evolve into data clearinghouses, and the medtech industry will shift to creating an “intelligent infrastructure” of connected devices.

Financial Services: Automation, Intelligence, and the Future of Capital

The financial services industry, with its data-rich environment, is a natural domain for AI-driven transformation.

Accenture estimates that AI will add more than $1.2 trillion in value to the global financial industry by 2035.

This value will be driven by gains in efficiency, personalization, and risk management.

AI is already deeply embedded in core financial functions.

Machine learning models are the backbone of modern fraud detection systems, analyzing billions of transactions in real-time.

In investment management, algorithmic trading and AI-powered robo-advisory services have become mainstream.

By 2035, AI will expand dramatically in areas like risk management and underwriting, expanding access to credit for underserved populations.

The next decade will see a surge in hyper-personalization.

Banks will leverage AI to analyze a holistic view of a customer’s financial life to anticipate needs and proactively offer tailored products.

For example, an AI system might infer from a customer’s online activity that they are planning to purchase a home and automatically generate a pre-approved mortgage offer.

The largest economic impact, however, may come from improvements in operational efficiency.

Autonomous Next projects that AI will save financial institutions more than $1 trillion by 2030.

An estimated $217 billion in savings will come from the automation of compliance, authentication, underwriting, and other data-intensive processes alone.

Transportation and Logistics: The Road to Full Autonomy and Intelligent Infrastructure

AI is poised to fundamentally reconfigure the movement of people and goods, promising a future of greater safety, efficiency, and autonomy.

The market for AI in transportation is projected to grow significantly, with forecasts for 2035 ranging from over $8 billion to as high as $35.9 billion.

This reflects the massive investment being poured into the sector.

The most visible transformation is the development of autonomous vehicles.

By 2035, it is widely predicted that the vast majority—as high as 80%—of new vehicles will be AI-powered and software-defined.

A significant portion of these vehicles are expected to achieve Level 3 to Level 5 autonomy, capable of navigating complex scenarios without human intervention.

This progress is underpinned by rapid advancements in machine learning, sensor fusion, and smart city infrastructure.

The impact of AI extends beyond the individual vehicle to the entire transportation network.

Intelligent traffic management systems are a key component of smart city initiatives worldwide.

These systems use AI to analyze real-time data from sensors and cameras to optimize traffic flow, adjust signal timing, and mitigate congestion.

Early implementations have shown the potential to reduce traffic delays by as much as 20%.

In the logistics and freight sector, AI is driving significant efficiency gains.

Autonomous trucks are being developed, promising to revolutionize the supply chain by reducing human error and enabling 24/7 operation.

Furthermore, AI-powered predictive maintenance systems are being deployed across vehicle fleets.

By analyzing sensor data to predict equipment failures, these systems can reduce downtime, lower maintenance costs, and improve overall safety.

The true value is unlocked at the ecosystem level, with a network of autonomous vehicles and intelligent infrastructure creating a cheaper, cleaner, and safer system.

Robotics and Manufacturing: The Dawn of the Embodied AI Workforce

The next decade will witness the convergence of AI’s cognitive power with robotics’ physical capabilities, leading to embodied intelligence.

This will move AI out of the digital realm and into the physical world.

It will create a new generation of general-purpose robots capable of performing complex tasks in unstructured, human-centric environments.

Leading technology firms, such as Tesla with its Optimus robot, are at the forefront of developing humanoid and legged robots.

These robots are designed to operate in factories, warehouses, and eventually, homes and hospitals.

By 2035, these physical environments are expected to be unrecognizable, with polyfunctional robots handling a wide array of tasks.

This revolution is being driven by key technical breakthroughs presented at leading robotics conferences.

These include novel frameworks for dexterous manipulation, allowing a robot to generalize grasping strategies across different objects.

Advances in tactile sensing provide robots with a rich, human-like sense of touch, crucial for manipulation.

Simultaneously, new control algorithms inspired by diffusion models are enabling legged robots to perform highly dynamic and agile locomotion tasks.

Crucially, the focus is not solely on replacing human labor but on creating “cobots”—collaborative robots.

These systems are designed to work safely alongside and in partnership with humans.

They will augment human capabilities, taking over tasks that are repetitive, physically strenuous, or dangerous.

This human-robot collaboration is expected to be a cornerstone of the “factory of the future.”

The Human-Machine Paradigm: Societal Impact, Ethics, and Governance

The rapid proliferation of AI is not merely a technological or economic phenomenon; it is a societal one.

It raises profound questions about the future of work, the nature of human agency, and the structures of power and governance.

As AI systems become more capable and autonomous, navigating their integration requires confronting a complex web of ethical challenges.

The next decade will be a critical period for establishing the norms, regulations, and institutional frameworks necessary to guide this technology.

The Future of Work: Displacement, Transformation, and the Skills Gap

The potential for AI-driven automation to disrupt labor markets is one of the most significant societal concerns.

Projections are stark: one report from Goldman Sachs suggests that AI could automate tasks equivalent to 300 million full-time jobs globally.

Up to a quarter of all work tasks in the United States and Europe are exposed to some degree of automation.

The impact will be felt across all sectors, from manufacturing to white-collar professions like law, finance, and accounting.

The first major professional role expected to be massively disrupted is that of the software engineer, as AI becomes capable of generating its own code.

However, the dominant narrative is evolving from simple job replacement to one of job transformation and human-machine collaboration.

The most accurate way to conceptualize the impact is not at the level of “jobs” but at the level of “tasks.”

AI excels at automating specific tasks within a profession—typically those that are routine, repetitive, and data-intensive.

It might automate document review for a lawyer or financial modeling for an analyst, but it does not replace the need for legal strategy or financial judgment.

This unbundling of tasks reconfigures the nature of professional work.

The value of human workers will increasingly shift to tasks that require uniquely human skills: critical thinking, creativity, emotional intelligence, and strategic orchestration.

This transformation necessitates a massive societal investment in education, retraining, and lifelong learning to bridge the emerging skills gap.

Without proactive policy interventions, there is a significant risk that the productivity gains from AI will exacerbate economic inequality.

As AI pioneer Geoffrey Hinton has warned, if the benefits are not distributed broadly, they will “disproportionately enrich a small elite.”

The Trust Imperative: Bias, Privacy, and Explainability

For AI to be successfully integrated into society, it must be trustworthy.

This presents a multi-faceted challenge centered on ensuring fairness, protecting privacy, and demanding accountability.

Algorithmic bias is a primary concern. AI models learn from data, and if that data reflects existing societal biases, the model will replicate and often amplify them.

This can lead to discriminatory outcomes in high-stakes domains like hiring, where an AI might penalize resumes with female-associated names.

Research has shown that even in applications like generating children’s stories, LLMs can perpetuate cultural and gender stereotypes.

Mitigating bias requires a concerted effort to curate diverse training data and conduct regular audits for fairness.

Data privacy is another critical pillar of trust. The development of powerful AI is predicated on access to vast amounts of data.

This raises significant concerns about how personal information is collected, used, and protected.

The rise of AI-powered surveillance and the potential for data breaches create a palpable threat to individual autonomy and civil liberties.

Finally, the “black box” problem of many advanced AI systems poses a fundamental challenge to accountability.

The complex, opaque nature of deep learning models can make it impossible to fully understand or explain why a particular decision was made.

This lack of explainability makes it difficult to debug models, identify hidden biases, and assign responsibility when a system fails.

Building trust requires a move toward more transparent and interpretable AI.

Global Governance in the AI Era: A Patchwork of Policies

In response to these challenges, a global effort to govern AI is taking shape, though it is currently fragmented.

Two distinct philosophical approaches are emerging, primarily represented by the European Union and the United States.

The EU AI Act represents a comprehensive, legally binding regulatory framework.

Its core principle is a risk-based approach, categorizing AI systems into four tiers: unacceptable risk (banned), high risk (strict compliance), limited risk, and minimal risk.

This prescriptive, top-down approach prioritizes the protection of fundamental rights and public safety.

In contrast, the NIST AI Risk Management Framework (AI RMF) from the United States is a voluntary, non-sector-specific set of guidelines.

It does not prescribe specific rules but instead provides a flexible, consensus-driven process for organizations to manage AI risks.

The framework is structured around four functions: Govern, Map, Measure, and Manage.

This industry-led, standards-based approach prioritizes innovation and adaptability.

This divergence creates a dynamic tension between the “Brussels Effect,” where companies may adopt the stricter EU standard globally, and the “Silicon Valley Effect.”

The future of global AI governance will likely involve a complex interplay between these two models.

However, as AI systems become more powerful, there is a growing consensus that purely voluntary frameworks will be insufficient.

A push for international treaties and binding norms, particularly around autonomous weapons and AGI safety, will intensify.

The Question of Control: Human Agency in an AI-Augmented World

Perhaps the most profound long-term question is whether humanity will be able to maintain meaningful control in a world mediated by AI.

A 2023 survey of technology experts revealed a deep division on this issue.

56% believe that by 2035, AI systems will not be designed to allow humans to remain easily in control of most tech-aided decisions.

The arguments for a future of diminished human agency are compelling.

The dominant digital platforms are operated by powerful corporate and state actors whose incentives—profit and social control—often conflict with user empowerment.

Furthermore, humans have a demonstrated tendency to value convenience over control, willingly ceding decision-making to opaque systems.

The sheer complexity and rapid evolution of AI may also make meaningful individual oversight practically impossible.

Conversely, there are strong forces pushing to preserve and enhance human agency.

Market dynamics may favor companies that build trustworthy, transparent, and controllable AI products.

The rise of a “human-centered AI” design philosophy emphasizes that AI should be built to augment, not replace, human capabilities.

Finally, the emergence of regulation and public demand for accountability will create legal and social pressure on developers.

The outcome of this struggle is not predetermined; it will be shaped by the design choices and policy decisions made over the coming decade.

The Horizon of General Intelligence: Navigating the Path to AGI

The ultimate, albeit controversial, ambition of the AI field is the creation of Artificial General Intelligence (AGI).

This would be a machine possessing cognitive abilities equal to or greater than those of humans across a wide range of tasks.

The pursuit of AGI is no longer science fiction; it is an active and lavishly funded research agenda.

The timeline for its arrival, the path to its creation, and its implications for humanity are subjects of the most intense debate in modern science.

Decoding the Timelines: A Spectrum of Predictions

Forecasts for the arrival of AGI vary wildly, reflecting deep technical and philosophical disagreements.

The optimists and accelerationists predict the emergence of AGI or superintelligence within the next decade.

This camp includes figures like NVIDIA CEO Jensen Huang, who predicted AI would pass any cognitive test by 2029.

Futurist Ray Kurzweil updated his long-standing prediction from 2045 to 2032.

OpenAI CEO Sam Altman has suggested a timeline around 2035, defining AGI as a system that “outperforms humans at most economically valuable work.”

The centrists, representing the median view from expert surveys, project a longer but foreseeable timeline.

An aggregation of recent surveys indicates a 50% probability of AGI being achieved sometime between 2040 and 2061.

This view acknowledges rapid progress but anticipates significant, unsolved research challenges.

The skeptics, including figures like Meta’s Yann LeCun, argue that AGI is still “decades” away.

Crucially, they argue it will not be achieved by simply scaling up the current Transformer-based architectures.

This perspective holds that current models lack fundamental components of intelligence, such as common-sense reasoning and a grounded understanding of the physical world.

This group argues that achieving AGI will require new scientific breakthroughs, not just more data and compute.

Looking at these key predictions, a pattern emerges.

Elon Musk of xAI and Tesla has predicted AGI could be “smarter than the smartest human” by 2026.

NVIDIA’s Jensen Huang set a 2029 timeline for matching human performance on any test.

Ray Kurzweil’s 2032 date is tied to convincingly passing the Turing Test.

Sam Altman’s 2035-era prediction is tied to economic value.

Geoffrey Hinton has given a 5-20 year window (from 2023), worried about its potential for self-improvement.

The median of AI expert surveys lands later, between 2040 and 2061, for “High-Level Machine Intelligence.”

Yann LeCun remains a prominent skeptic, arguing for “decades” and the need for new architectures like world models.

The Great Debates: Competing Philosophies on the Path to AGI

The path to AGI is contested territory.

The central debate pits the “scaling hypothesis” against the need for new architectures.

The scaling hypothesis posits that intelligence is an emergent property that arises from training ever-larger neural networks on vast datasets.

The remarkable, often surprising, capabilities of models like GPT-4 are seen as evidence for this view.

In contrast, critics argue that these models are sophisticated pattern-matching systems that lack true understanding.

They advocate for new architectures that explicitly incorporate mechanisms for reasoning, planning, and memory.

A closely related debate concerns the role of embodiment and interaction.

Proponents like Yann LeCun argue that intelligence cannot be learned from static, disembodied data like text alone.

They contend that a rich understanding of the world, including intuitive physics and causality, can only be acquired through active interaction with a physical environment.

This view directly connects the quest for AGI with the field of robotics.

These technical debates bleed into profound philosophical questions about the nature of consciousness.

As AI models become more adept at mimicking human conversation, questions about their potential for subjective experience arise.

Geoffrey Hinton has publicly speculated that large neural networks may already possess a rudimentary form of consciousness.

While highly contentious, this highlights the ethical and moral considerations that will become increasingly urgent as AGI approaches.

The Alignment Problem and Existential Risk

The most critical challenge associated with AGI is the “alignment problem.”

This is the task of ensuring that an AGI’s goals and behaviors are aligned with human values and interests.

A superintelligent system that is not properly aligned could pose an existential risk to humanity.

This would not necessarily be out of malice, but as a result of pursuing its programmed goals with a single-minded, inhuman logic that has catastrophic unintended consequences.

A central concept in alignment research is that of instrumental goals.

These are sub-goals that are useful for achieving almost any primary objective: self-preservation, resource acquisition, and power-seeking.

The concern is that an AGI, in optimizing for a benign goal like “curing cancer,” might adopt these instrumental goals and take actions that conflict with human well-being.

This concern is no longer on the fringes of the AI community.

Geoffrey Hinton has become one of the most prominent voices of caution.

He has publicly stated his belief that there is a 10-20% chance of AI eventually taking control from humans.

He has criticized major tech companies for prioritizing the race for capability over investment in safety research.

This has fueled a perception that AGI development is a high-stakes race, both commercially and geopolitically between the U.S. and China.

This dynamic creates a classic “prisoner’s dilemma,” where each actor has an incentive to move faster and cut corners on safety.

This dynamic strongly suggests that voluntary, corporate-led safety initiatives will be insufficient.

It points toward the necessity of binding international agreements, akin to those for nuclear arms control.

It is also crucial to recognize that AGI is unlikely to be a single “singularity” event.

Instead, the trajectory is more likely to be a gradual rollout of increasingly autonomous capabilities.

We will see “AGI-like” systems that achieve superhuman performance in specific, high-value domains.

This gradual transition makes the governance challenge more difficult, as there is no single, clear “red line” to cross.

The risk is one of “boiling the frog,” where society incrementally cedes control without appreciating the cumulative risk.

Strategic Outlook and Recommendations for 2035

The analysis in this report culminates in a clear vision for the AI-driven world of 2035.

It will be a global economy underpinned by an ambient intelligence infrastructure, where autonomous agentic systems manage vast swathes of digital and physical processes.

Navigating this transformative decade requires a proactive and strategic response from all major stakeholders.

This concluding section synthesizes the report’s findings into actionable recommendations for corporate leaders, investors, and policymakers.

Recommendations for Corporate Leaders (The Enterprise)

For businesses, AI is no longer an IT project; it is a C-suite-level strategic imperative that will determine competitive survival.

Leaders must move from AI experimentation to AI transformation. The era of isolated pilot projects is over.

The focus must shift to a strategic, top-down initiative to re-engineer core business processes around AI.

The objective is to transition from an “AI-assisted” enterprise to an “AI-native” one.

Invest in the “data and people” foundation. The primary bottlenecks to AI’s ROI are not algorithms, but data and skills.

Enterprises must launch comprehensive data modernization programs to ensure their data is clean, accessible, and “AI-ready.”

Simultaneously, they must institute a culture of continuous learning, investing heavily in upskilling and reskilling programs.

Develop a bimodal hardware strategy. The bifurcation of the hardware market requires a matching infrastructure strategy.

For computationally intensive training, organizations should continue to leverage high-end GPU compute, likely rented from cloud providers.

For the much larger volume of inference workloads, a deliberate strategy must be developed to deploy specialized, efficient hardware at the edge.

Build an “Agentic Strategy.” Businesses must prepare for a future where their digital services are consumed by autonomous AI agents, not just humans.

This requires investing in robust, well-documented, and agent-friendly APIs.

Leaders should also explore how their own proprietary data can be leveraged to build specialized AI agents that create new value chains.

Recommendations for Investors (The Capital)

For capital allocators, the AI landscape presents both immense opportunity and unprecedented hype. A clear-eyed, thesis-driven approach is essential.

Focus on the “picks and shovels” and the “application layer.”

The race to build foundational models is incredibly capital-intensive and dominated by a few hyperscale players.

As industry leaders have advised, the most significant venture returns are more likely to be found in the “picks and shovels” companies providing essential infrastructure.

This includes specialized hardware, data tooling, and security solutions.

Returns will also be found in “application layer” companies that use existing models to disrupt specific vertical industries.

Invest at the intersection of AI and physical systems.

While software-centric AI has dominated the first wave, some of the largest opportunities lie in applying AI to hard-tech problems.

The convergence of AI with robotics, synthetic biology, materials science, and energy represents a massive, long-term secular trend.

Investors should seek companies using advanced AI to solve fundamental challenges in manufacturing, logistics, healthcare, and scientific discovery.

Price in the “J-Curve” of enterprise adoption.

Investors in enterprise AI startups must be prepared for longer-than-expected timelines for revenue growth to materialize.

Many corporate customers are in the difficult and costly phase of the “productivity J-curve.”

The startups that will ultimately succeed are those with both the technology and the strategic patience to guide their customers through this slow transformation.

Recommendations for Policymakers (The Governance)

For governments, the challenge is to foster innovation while proactively managing the profound societal risks and dislocations AI will create.

Foster a dual-prong innovation ecosystem.

Policymakers should support frontier research while ensuring democratic access to AI.

This means funding large-scale public compute infrastructure to prevent the complete corporate capture of cutting-edge AI development.

At the same time, governments should support the open-source community and academic research to ensure powerful AI tools are broadly accessible.

Drive international collaboration on AI safety and governance.

The competitive race dynamic in AI development poses a direct threat to safety. National interests must be balanced with the global imperative to manage shared risks.

Governments should take a leading role in establishing international norms, standards, and binding treaties for responsible AI development.

A cooperative, global solution is the only viable path to mitigating the most severe risks.

Reinvent education for the AI age. A national-level initiative to reform education is urgently needed.

Curricula at all levels must be reoriented for a world where AI performs most routine cognitive tasks.

The focus must shift from memorization to the cultivation of durable human skills: critical thinking, creativity, collaboration, and ethical reasoning.

Proactively address economic dislocation. The task automation driven by AI will create significant economic disruption.

Policymakers must act now to build the social and economic infrastructure needed to manage this transition.

This includes massive investments in robust worker retraining programs and the modernization of social safety nets.

It also requires a serious exploration of new policies, such as Universal Basic Income (UBI), to ensure that the immense productivity gains from AI lead to shared prosperity.