The legal world is on the cusp of its most significant transformation in a century. The force driving this change is not a new law or legal theory, but a technological revolution: the rise of the AI legal agent. These are not just smarter search bars or document templates; they are sophisticated, autonomous systems capable of reasoning, planning, and executing complex legal and compliance tasks.

For legal professionals, from solo practitioners to global law firm partners, understanding this technology is no longer an option—it is a critical necessity for maintaining competitive relevance and upholding the highest standards of professional responsibility.

This comprehensive guide serves as your expert briefing on the world of legal AI agents. We will deconstruct the technology that powers them, navigate the burgeoning market of providers, analyze the profound economic impact on the practice of law, and confront the gauntlet of ethical risks and liabilities. Prepare to meet the agentic advocate, your future digital colleague.

Part I: The Technological Foundations of Legal AI Agents

To effectively leverage—and control—AI agents, one must first understand their inner workings. These systems represent an evolutionary leap from passive tools like chatbots or simple automation bots. They are designed for autonomous, goal-oriented action.

Anatomy of an AI Legal Agent

An AI agent’s architecture is built for independent problem-solving. It consists of several core components working in a continuous cycle:

- Perception Model: The agent’s senses. It ingests data from myriad sources—document management systems, client emails, regulatory databases, and user commands—to understand its operating environment.

- Reasoning Engine: The brain of the operation. Using powerful Large Language Models (LLMs), this component analyzes the perceived information, assesses risks, simulates outcomes, and formulates a strategic plan.

- Action Execution: Where thought becomes action. The agent executes tasks based on its reasoning, whether drafting a contract, sending a notification, or automating a complex workflow.

- Feedback Loop: The mechanism for learning and improvement. The agent monitors the results of its actions, compares them to the desired goals, and adapts its future behavior based on what it learns. This ensures it becomes more effective over time.

This architecture enables a dynamic workflow where an agent can take a high-level goal, such as “Ensure this marketing campaign complies with FTC advertising standards,” and autonomously break it down into a series of tasks, gather the necessary information, and execute the plan until the goal is achieved.

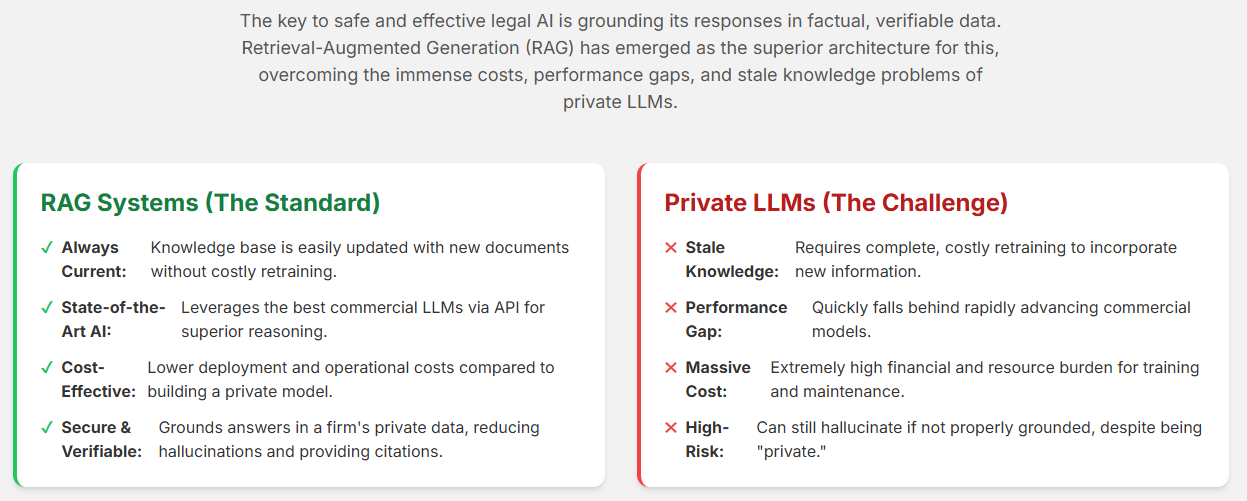

The Power of Grounding: Why RAG is Non-Negotiable in Law

The raw power of AI agents comes from Large Language Models (LLMs). However, general-purpose LLMs have a critical flaw for legal work: they can “hallucinate” or invent facts. The industry’s solution to this existential threat is Retrieval-Augmented Generation (RAG).

RAG is an architecture that grounds an LLM’s response in a trusted, private data source. Instead of answering from its vast but potentially flawed memory, the system works as follows:

- Index: A firm’s private documents (contracts, policies, case files) are indexed and stored in a secure “vector database.”

- Retrieve: When a user asks a question, the system searches this private database for the most relevant information.

- Generate: It then feeds that retrieved information to the LLM with a strict instruction: “Based only on the provided text, answer the user’s question.”

This process ensures that the AI’s answers are accurate, verifiable, and grounded in the firm’s own knowledge and data. It is the single most important technology for mitigating risk. While building a private, custom LLM was once considered an option, the immense cost, difficulty in keeping it updated, and performance gap compared to leading models have made RAG the clear choice for security, accuracy, and cost-effectiveness.

Core Capabilities and Advanced Applications

AI agents are already transforming daily legal work with a suite of powerful capabilities.

Foundational Use Cases:

- Document Automation: Generating first drafts of contracts, briefs, and emails.

- Advanced Legal Research: Understanding natural language queries to find conceptually similar precedents that keyword searches would miss.

- Contract Lifecycle Management (CLM): Tracking agreements from drafting to renewal, automatically flagging risky clauses against a firm’s playbook.

- Compliance Monitoring: Proactively scanning for regulatory changes and ensuring internal processes adhere to rules like GDPR or HIPAA.

Advanced & Agentic Features:

- Predictive Outcome Analytics: Forecasting litigation outcomes by analyzing historical case data.

- Jurisdictional Logic Handling: Adapting clause recommendations and risk analysis to the specific laws of a given jurisdiction.

- AI-Powered Workflow Orchestration: Managing entire multi-step processes, like client onboarding, from a single command. Platforms like Harvey’s “Workflows” and Aline’s “AI Playbooks” are leading this charge.

- Sentiment Analysis: Gauging the tone of deposition transcripts or witness communications to find subtle inconsistencies.

Part II: The Market Landscape: Providers, Platforms, and Projects

The legal AI market is a dynamic ecosystem of polished commercial platforms and innovative open-source projects. Choosing the right path depends entirely on an organization’s resources, risk tolerance, and specific needs.

Commercial Innovators and Their Offerings

A handful of well-funded, domain-specific companies lead the commercial market. Each has a unique focus and value proposition.

Platform |

Primary Target Audience |

Core Offering/Differentiator |

Key Features |

Underlying Technology Emphasis |

Integration Points |

Harvey |

Elite Law Firms, Global Professional Services |

High-end, domain-specific AI for complex, bespoke legal work. |

Assistant (Q&A), Vault (Secure Analysis), Knowledge (Research), Customizable Workflows. |

Agentic Workflows, Domain-Specific Models. |

Microsoft Word, Strategic Alliance with LexisNexis. |

Aline |

In-house Corporate Legal Teams |

All-in-one, secure Contract Lifecycle Management (CLM) platform. |

Aline Associate (AI Agent), AI Playbooks (Custom Rules), AI Repository, AlineSign. |

RAG, AI Playbooks for rule-based guidance. |

Salesforce, HubSpot, Google Drive, Box, MS Sharepoint. |

Luminance |

Law Firms and Corporate Legal Departments |

“Legal-Grade™ AI” trained on 150M+ documents for high accuracy. |

Distinct products for Corporate (CLM), Diligence (M&A), and Discovery (eDiscovery). |

Supervised & Unsupervised Machine Learning, Agentic AI. |

Microsoft Word, Outlook, Salesforce, VDRs. |

Legora |

Top-tier Law Firms |

Collaborative AI deeply integrated into lawyer workflows. |

Tabular Review (Grid View), Word Add-in, Conversational AI Assistant, Agentic Research. |

Collaborative AI, Agentic Queries. |

Microsoft Word, Internal/External Databases. |

This landscape shows a split between broad “horizontal” platforms like Harvey, designed for large, multi-practice firms, and specialized “vertical” platforms like Aline, which solve a specific workflow (in-house CLM) with deep precision.

The Open-Source Frontier

In parallel, a community of developers is building open-source legal AI tools like LawGlance and AskLegal.ai. These projects offer unparalleled customization and transparency at no licensing cost. However, they come with significant risks: a lack of dedicated support, potential security vulnerabilities, and unclear data provenance. For now, open-source legal AI is a space for experimentation and niche applications, not a replacement for enterprise-grade, secure commercial tools for handling sensitive client matters.

Part III: The Value Proposition: Efficiency, Cost, and Competitive Advantage

Adopting AI is a strategic business decision with a compelling economic upside. However, it also presents a disruptive challenge to the legal profession’s traditional business model.

A Comparative Analysis of AI vs. Traditional Legal Work

The data on AI performance is striking.

- Speed: In one study, an AI reviewed five NDAs in 26 seconds, a task that took a human lawyer 91 minutes.

- Accuracy: In contract review, AI has demonstrated 94% accuracy compared to 85% for human lawyers.

- Productivity: AI can save lawyers an average of four hours per week, potentially generating $100,000 in new billable time per lawyer annually by freeing them up for higher-value work.

However, this doesn’t mean AI is replacing lawyers. It is augmenting them. AI excels at data processing and pattern recognition, while human lawyers retain the irreplaceable strengths of strategic thinking, emotional intelligence, negotiation, and, most importantly, judgment. AI can predict an outcome, but only a human can exercise the judgment to decide what to do with that prediction.

The Economic Impact on Legal Practice

For smaller firms, AI is a great equalizer, offering the capabilities of a large team for the cost of a monthly subscription ($50-$225 per user) instead of a new associate’s salary. It allows firms to compete on speed and sophistication.

This very efficiency, however, creates the “AI efficiency paradox.” The legal industry’s dominant business model, the billable hour, rewards spending more time on a task. AI is designed to do the opposite. As AI automates work that once took dozens of hours, firms that rely on time-based billing will see their revenues shrink. This is a powerful catalyst forcing the industry to accelerate its shift toward Alternative Fee Arrangements (AFAs) and value-based billing, where firms are rewarded for the outcome delivered, not the hours logged.

Part IV: The Gauntlet of Risks: Liability, Ethics, and Professional Responsibility

The power of AI is matched by a formidable array of risks. Navigating these dangers is the single most important challenge for any lawyer or firm adopting this technology.

The Crisis of Confidence: Inaccuracy and Hallucinations

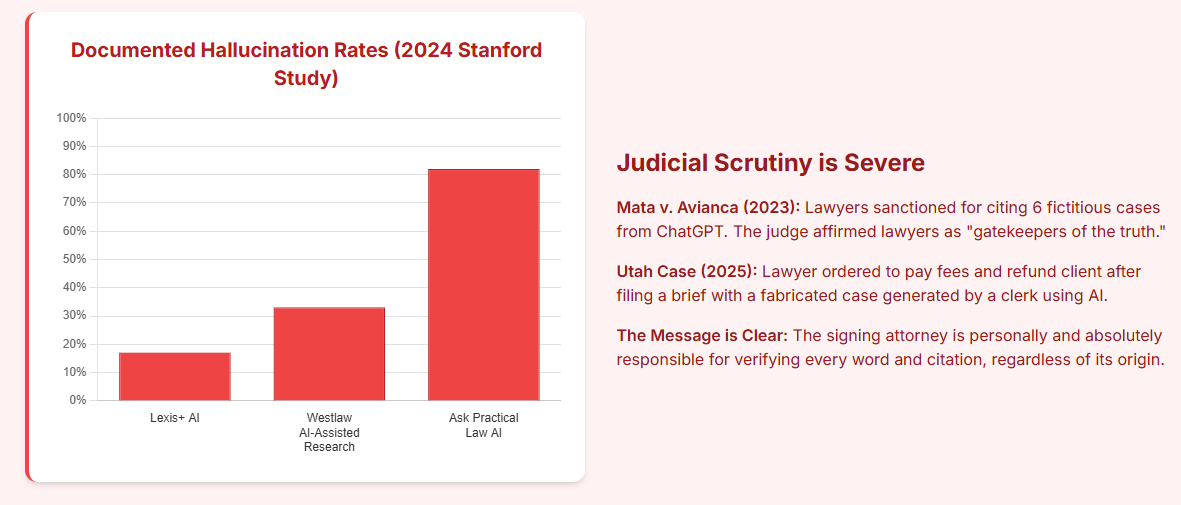

The most publicized danger is AI’s tendency to “hallucinate” or confidently invent facts, including fake case citations. This is not a random bug but a result of technical limitations like the “context window” (how much information an AI can process at once) and the “lost-in-the-middle” problem (where AI struggles to recall info from the middle of long documents).

The courts have shown zero tolerance for this. In cases like the infamous Mata v. Avianca, lawyers have faced significant sanctions, fines, and public embarrassment for filing briefs containing AI-generated falsehoods. The emerging standard is one of strict liability: the signing attorney is absolutely and personally responsible for verifying the accuracy of every word in a filing, regardless of how it was generated.

The Sanctity of Confidence: Data Privacy and Attorney-Client Privilege

A lawyer’s duty of confidentiality (ABA Model Rule 1.6) is paramount. Inputting confidential client information into a public AI tool like ChatGPT is a catastrophic error. These conversations are not privileged. They create a discoverable record that can be subpoenaed and used against a client. Lawyers have a duty to vet the security of any AI vendor and advise their clients not to discuss legal matters with public AI chatbots.

A Comprehensive Ethical Review

Existing rules of professional conduct apply forcefully to AI. A failure to understand their application is a failure of professional responsibility.

ABA Model Rule |

Core Requirement |

Specific AI Implication/Risk |

Recommended Mitigation Strategy |

1.1 Competence |

Maintain technological competence. |

Lack of understanding of AI limitations leads to flawed work product. |

Engage in continuous education on AI. Thoroughly vet AI tools before use. |

1.5 Reasonable Fees |

Fees must be reasonable. |

Billing for time saved by AI constitutes overbilling. |

Adopt value-based billing. Only charge for actual time spent verifying AI output. |

1.6 Confidentiality |

Protect all client information. |

Inputting client data into public AI tools can waive privilege. |

Use only enterprise-grade, secure AI platforms. Obtain client’s informed consent. |

3.3 Candor to Tribunal |

Be truthful to the court. |

Submitting filings with AI-generated “hallucinated” cases. |

Mandate 100% human verification of all AI-generated content before filing. |

5.1 & 5.3 Supervision |

Supervise subordinate lawyers and staff. |

Junior lawyers or staff misusing AI, creating liability for the supervising lawyer. |

Establish a clear, firm-wide AI Use Policy and provide mandatory training. |

UPL |

Do not assist in the unauthorized practice of law. |

Deploying client-facing AI that provides unsupervised legal advice. |

Restrict client-facing AI to general information or administrative tasks. |

Part V: Governance and Future Trajectory

Governing bodies are racing to keep pace with technology, and the market is on a trajectory of explosive growth.

The Regulatory Response: Bar Association Guidance

The American Bar Association (ABA) and state bars in Florida, California, New York, and others have issued guidance. While specifics vary, the core principles are consistent: lawyers bear ultimate responsibility for AI-generated work, human oversight is mandatory, confidentiality is paramount, billing must be transparent, and technological competence is an ongoing duty.

State |

Official Guidance Document |

Key Requirement on Confidentiality |

Key Requirement on Disclosure to Client/Court |

Key Requirement on Billing |

Florida |

Ethics Advisory Opinion 24-1 |

Obtain client’s informed consent before using third-party AI with confidential data. |

Disclosure may be required for informed consent. |

Must not result in clearly excessive fees. |

California |

Practical Guidance |

Do not input confidential information into AI lacking adequate security protections. |

Consider disclosing AI use to clients. |

Cannot charge hourly for time saved by AI. |

New York |

Task Force Report on AI |

Be mindful of client privacy when using AI engines. |

Recommends disclosure in certain contexts. |

Fees must remain reasonable. |

North Carolina |

Formal Ethics Opinion 1 |

May input client info into secure third-party AI; must exercise independent judgment. |

Advanced consent needed for substantive tasks. |

Must not inaccurately bill for time saved. |

Pennsylvania |

Joint Formal Opinion 2024-200 |

Do not input confidential info into insecure AI; must verify AI output. |

Educate clients and seek informed consent. |

Fees must be reasonable. |

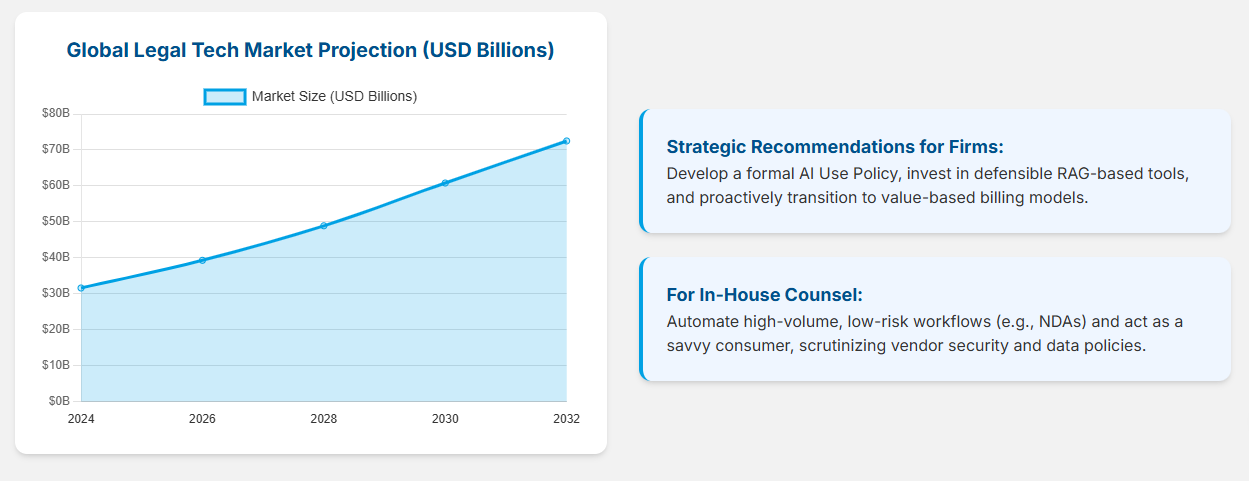

Market Projections and Strategic Outlook

The global legal technology market, valued at over $31 billion in 2024, is projected to more than double to over $70 billion by 2032. This growth is driven by the demand for efficiency and the power of generative AI. The future will see the rise of multi-agent systems—teams of specialized AIs working together—and the deeper integration of AI as the core “operating system” for a law practice.

Concluding Strategic Recommendations

The era of the agentic advocate has arrived. To navigate it successfully, all legal professionals must act with strategic foresight.

- For Law Firms: Immediately develop a formal AI Use Policy. This is a risk management necessity. Mandate 100% human verification, prohibit the use of public AI tools with client data, and prioritize investment in defensible, RAG-based platforms. Begin the strategic shift away from the billable hour toward value-based pricing.

- For In-House Counsel: Target AI for high-volume, low-risk workflows like NDAs and vendor contracts. Implement AI Playbooks to empower business teams while ensuring compliance. Be a savvy consumer and rigorously vet all AI vendor contracts for security, liability, and data rights.

- For All Professionals: The path forward requires a dual commitment: embrace the transformative potential of AI to enhance productivity and client service, but do so with an unwavering dedication to the ethical diligence and professional judgment that will always remain the hallmark of a great lawyer.