The Architect’s Guide to JSON Prompting: Mastering LLMs for Production-Ready AI

The rise of Large Language Models (LLMs) like GPT-4o and Gemini has been nothing short of transformative. Yet, for all their creative power, developers face a critical challenge: their inherent unpredictability. In the world of software engineering, where reliability and consistency are king, the stochastic, sometimes “hallucinatory” nature of generative AI can be a major roadblock. How do you integrate a brilliant but inconsistent collaborator into an automated, mission-critical workflow?

The answer lies in changing the conversation. We must move beyond ambiguous, free-form text and embrace a new paradigm of precise, programmatic control. This is the world of JSON prompting, a technique that transforms an LLM from an unpredictable artist into a dependable system component. By structuring your entire request—instructions, context, and constraints—into a JavaScript Object Notation (JSON) object, you create an unambiguous contract with the model.

This comprehensive guide will serve as your blueprint for mastering this essential skill. We will explore why structured communication is the key to unlocking reliable AI, dive deep into practical implementation patterns, compare how leading platforms handle structured data, and navigate the advanced frontiers of security and efficiency. By the end, you’ll understand how to architect your instructions and transition AI from a fascinating novelty into an indispensable part of your technology stack.

Section 1: The Foundations of Structured Communication

To build reliable systems, we must start with a solid foundation. This means understanding both the “what” and the “why” of JSON prompting. We’ll begin with a quick refresher on JSON itself before defining how this ubiquitous data format is being repurposed to programmatically instruct LLMs.

A Primer on JSON (JavaScript Object Notation)

At its core, JSON is a lightweight, text-based format for data interchange. Born from JavaScript but now language-independent, it’s the lingua franca of modern APIs and web services. Its power lies in its simple, human-readable structure, which is based on two primary concepts:

- Objects: A collection of key-value pairs enclosed in curly braces

{}. Keys are strings in double quotes, followed by a colon, and then the value. For example:"name": "John Doe". - Arrays: An ordered list of values enclosed in square brackets

[]. For example:["History", "Algorithms"].

A value in JSON can be a string, number, boolean (true or false), another object, an array, or null. This simple set of rules allows for the representation of complex, nested data structures.

Here is a simple JSON object representing a user profile:

{

"name": "John Doe",

"age": 30,

"isStudent": false,

"courses": [

{

"title": "History of AI",

"credits": 3

},

{

"title": "Advanced Algorithms",

"credits": 4

}

],

"address": null

}

This clean, predictable format is why JSON dominates data exchange on the web. It’s also why LLMs, trained on vast quantities of web data and code, have a native affinity for its structure.

Defining JSON Prompting for LLMs

JSON prompting is the practice of structuring your entire request to an LLM as a well-formed JSON object. Instead of writing a conversational paragraph, you provide a structured data object where every piece of the request is explicitly labeled.

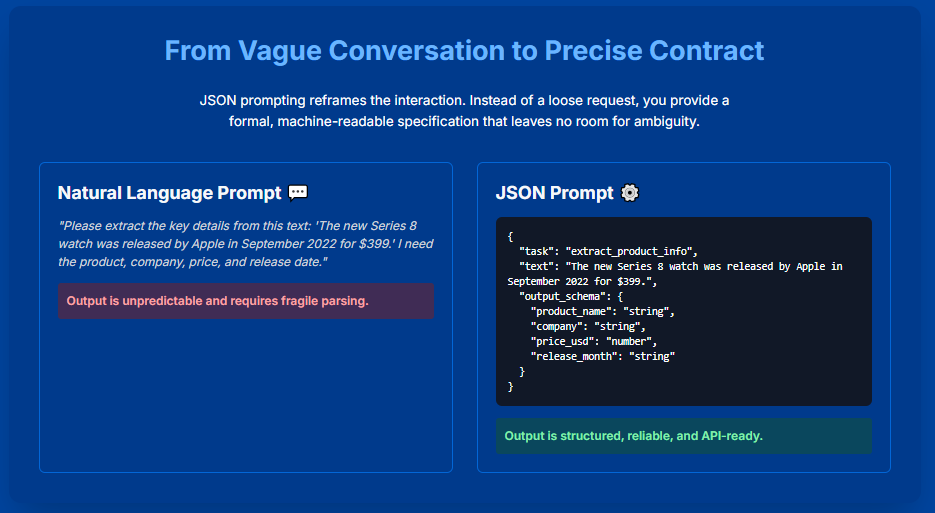

Let’s compare the two approaches.

Traditional Natural Language Prompt:

“Write a short, scientific summary of a study showing that atorvastatin reduces LDL cholesterol by 30%. Include the product name, the study reference (Smith et al., JAMA Cardiol, 2024), and keep it suitable for UK healthcare professionals.”

This prompt is functional but fragile. The model has to infer the distinct pieces of information from the prose.

Equivalent JSON Prompt:

{

"task": "generate_claim_support_summary",

"context": {

"claim": "Reduces LDL cholesterol by 30%",

"study_reference": "Smith et al., JAMA Cardiol, 2024",

"product": "atorvastatin",

"audience": "Healthcare professionals",

"tone": "Scientific",

"jurisdiction": "UK"

},

"output_format": {

"type": "paragraph",

"length": "short"

}

}

The difference is profound. The JSON version is an explicit blueprint. The task is clearly defined, the context is neatly organized, and the desired output is specified. This trade-off—less conversational warmth for a massive gain in precision—is the key to building reliable, automated systems with AI. It treats the LLM less like a magic box and more like a dependable API endpoint.

Section 2: Why Structure is a First-Class Citizen for LLMs

Adopting JSON prompting isn’t just a stylistic choice; it’s a strategic one. It works because it aligns perfectly with the needs of software engineering and the very nature of how LLMs are trained.

Engineering Predictability and Reliability

In production software, inconsistency is a bug. An application that relies on an LLM’s output needs that output to be in a predictable, parsable format every single time. Natural language prompts often fail this test, leading to a “parsing nightmare” where developers must write fragile code to handle variations in the model’s response.

By defining the desired output format in the prompt (often via a JSON schema), you create a formal contract with the model. This dramatically reduces ambiguity and compels the model to behave like a reliable API. Studies and real-world applications show this can increase format compliance from a shaky 80% to over 99%, eliminating the need for complex error-handling logic and allowing developers to build automated workflows with confidence.

Aligning with the Model’s “Worldview”

LLMs are not just trained on novels and articles. Their training data includes billions of lines of source code, API documentation, configuration files, and other structured data. JSON, therefore, is not a foreign language to them; its patterns are deeply embedded in their neural pathways.

When you provide a prompt in a structured, code-like format, you activate a more computational and less ambiguous mode of processing in the model. It recognizes a formal specification it has seen countless times before. This allows it to perform a high-fidelity data transformation task, respecting data types, constraints, and required fields with much greater accuracy. You are, in essence, speaking to the model in one of its native tongues.

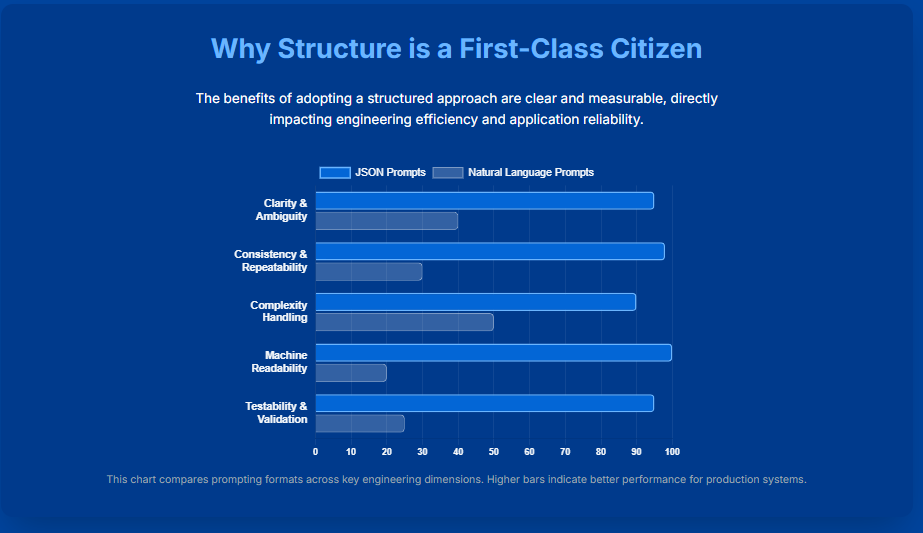

A Comparative Look at Prompting Formats

While JSON is a powerful default, it’s helpful to compare it to other formats to understand its specific strengths and weaknesses.

Aspect |

Natural Language Prompts |

JSON Prompts |

Clarity & Ambiguity |

High potential for ambiguity; instructions are mixed with prose. |

Instructions are explicitly labeled, dramatically reducing ambiguity. |

Consistency |

Low repeatability; small wording changes can cause large output changes. |

Highly repeatable; the same structured input yields predictable responses. |

Machine Integration |

Output is unstructured text, requiring complex and brittle parsing. |

Output is machine-readable by default, ready for direct API integration. |

Testability |

Difficult to create automated tests for vague text-based I/O. |

Easy to validate both prompt and output against a formal schema. |

Creativity |

High flexibility; excels at open-ended, creative tasks. |

The rigid structure may stifle creativity in non-data-oriented tasks. |

While JSON is often the best choice for structured tasks, YAML’s readability makes it great for human-maintained prompt templates, and XML’s explicit tagging can be highly effective for separating different blocks of context within a single prompt. The choice depends on the specific trade-offs between developer ergonomics, performance, and the target LLM’s capabilities.

Section 3: Core Applications and Implementation Patterns

JSON prompting isn’t a single technique but a versatile methodology that can be applied in several powerful patterns. Mastering these patterns is key to building sophisticated AI applications.

Pattern 1: Data Extraction and Classification

This is the most common use case. The goal is to take unstructured text and transform it into a clean, structured JSON object.

- Implementation: You provide the LLM with the source text and a JSON template of the desired output, often with empty or placeholder values. The model’s task is to read the text and populate the template.

- Example (Real Estate Listing):

- Input Text: “Amazing 3 bedroom apartment located at the heart of Manhattan, has one full bathrooms and one toilet room for just 3000 a month.”

- JSON Prompt Structure:

JSON

{ "rental_price": "rental price", "location": "location", "nob": "number of bathrooms" } - Expected LLM Output:

JSON

{"location":"Manhattan","nob":"1","rental_price":"3000"}

This pattern scales from simple entity extraction to complex classification tasks, such as analyzing a medical report to populate a deeply nested JSON object with over 200 distinct data points.

Pattern 2: Structured Content Generation

This pattern inverts the flow. Instead of defining the output format, you use a JSON object as a rich, structured input blueprint to guide content generation.

- Implementation: You create a JSON object that encapsulates all the key components and constraints for the desired content (e.g., an email, a product description). You then ask the LLM to synthesize this data into a final product.

- Example (Personalized Email):

- JSON Input Blueprint:

JSON

{ "recipient_name": "Alicia", "job_title": "Head of Data Science", "company": "HealthAI", "pain_point": "Struggling to scale LLM pilots", "offer": "Custom compliance layer with easy API integration", "tone": "Professional but friendly" } - Instruction: “Write a concise, personalized outreach email using the provided context.”

- JSON Input Blueprint:

This technique is incredibly powerful for generating consistent, personalized content at scale, from marketing copy to AI-generated images where a JSON blueprint can ensure character and style consistency across multiple generations.

Pattern 3: Holistic Model Configuration

This is the most advanced pattern, where the JSON object acts as a complete, self-contained configuration file for the LLM’s entire task. It controls the model’s persona, reasoning process, and behavioral constraints.

- Implementation: The prompt is a hierarchical JSON object with top-level keys like

role,task,context,constraints,examples, andoutput_format. - Example (Support Ticket Classifier):

JSON

{ "role": "You are an experienced customer support ticket classifier...", "task": "Categorize incoming support tickets into Technical Issue, Billing Inquiry, or Feature Request.", "context": "Our company handles customer support for a variety of SaaS tools...", "examples": [ {"input": "I can't log in.", "output": "Technical Issue"}, {"input": "My invoice is wrong.", "output": "Billing Inquiry"} ], "constraints": "Only output the ticket category. If unsure, default to 'Technical Issue'.", "output_format": {"type": "string", "enum": ["Technical Issue", "Billing Inquiry", "Feature Request"]} }

This holistic approach gives the model a specialist persona, a clear objective, relevant background, examples to learn from, and strict rules to follow, significantly boosting reliability and accuracy for complex tasks.

Section 4: Navigating the Ecosystem and Advanced Techniques

While the principles are universal, implementation details differ across major platforms like OpenAI, Google, and Anthropic. Furthermore, building production-grade systems requires advanced techniques for schema enforcement and error handling.

Platform-Specific Implementations

- OpenAI (GPT-4o+): Offers guaranteed schema adherence via

response_format: { "type": "json_schema", ... }. This is a game-changer, as the API guarantees the output will not only be valid JSON but will also conform to a provided JSON Schema. It’s the most direct approach. - Google (Gemini): Provides a similar feature with its

responseSchemaconfiguration. By defining a schema, you constrain the model’s generation, forcing it to return JSON that validates against your rules. - Anthropic (Claude): Achieves structured output through its powerful Tool Use framework. You define a “tool” with a JSON Schema for its inputs and then force the model to “call” that tool. The resulting JSON object containing the tool’s arguments is guaranteed to match the schema. This agentic approach is more general-purpose but equally reliable.

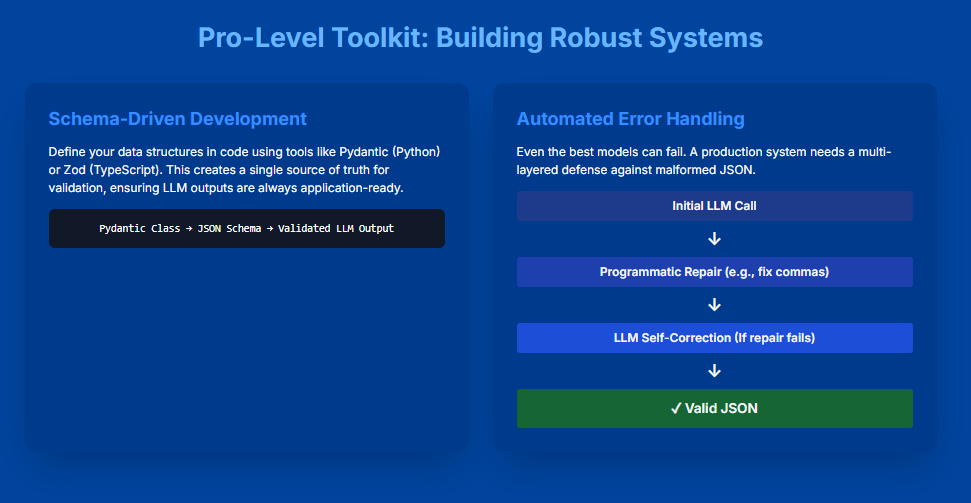

Schema-Driven Development and Error Handling

For production systems, you must plan for failure.

- Schema-Driven Development: Use data validation libraries like Pydantic (Python) or Zod (TypeScript) to define your schemas in code. These libraries can automatically generate the JSON Schema for the API call and then parse and validate the model’s response at runtime, ensuring end-to-end type safety.

- Programmatic Repair: Use libraries like

json-repairto automatically fix common syntax errors (e.g., trailing commas, missing quotes) without needing another expensive LLM call. - LLM Self-Correction: If programmatic repair fails, feed the malformed JSON back to the LLM with a new prompt: “Fix the following invalid JSON.” The model is often surprisingly good at correcting its own mistakes.

- Retry Loops: The simplest strategy is to wrap the API call in a loop. If parsing fails, just try the original prompt again. The stochastic nature of LLMs means a second attempt might succeed.

Conclusion: From Ambiguous Dialogue to Architectural Design

The shift from conversational prompts to structured JSON inputs represents a crucial maturation in applied AI. It’s the moment we stop “talking” to models and start programming them. By embracing the principles of structured data, schema enforcement, and robust error handling, we transform LLMs from unpredictable novelties into reliable, scalable, and indispensable components of modern software architecture.

This discipline is no longer optional; it is essential. Mastering the architecture of your instructions is the definitive skill for any developer looking to build the next generation of AI-powered applications. The future of AI is predictable, reliable, and built on a foundation of well-structured code—and that starts with your prompt.