Part I: The New Automation Paradigm

The enterprise landscape is undergoing a profound transformation, driven by a fundamental shift in how organizations approach operational efficiency and value creation. The era of simple task automation is giving way to a new paradigm of intelligent automation, where systems not only execute commands but also learn, adapt, and make decisions. This evolution represents more than an incremental upgrade; it is a strategic re-evaluation of the relationship between human capital, business processes, and technology. Understanding this shift is the first step for any leader aiming to harness the full potential of artificial intelligence (AI) to build a more resilient, efficient, and innovative enterprise.

A summary of the article in the form of a podcast

Section 1: From Programmed Rules to Intelligent Decisions

The term “automation” has long been associated with the mechanization of repetitive, manual labor. In the digital age, this concept was embodied by traditional automation technologies that execute predefined, rule-based tasks. However, the integration of artificial intelligence has created a new class of automation that is fundamentally different in its capabilities, scope, and strategic impact. Distinguishing between these two paradigms is critical for making informed investment and implementation decisions.

Defining the Spectrum of Automation

Automation exists on a spectrum of intelligence, from rigid, pre-programmed systems to dynamic, self-learning ones. At one end of this spectrum lies traditional automation, which has been a cornerstone of industrial and business process optimization for decades.

Traditional Automation (Rule-Based): This form of automation relies on specialized technology and software to complete specific, repetitive tasks that remain unchanged over time. These systems operate on a foundation of explicit, predefined rules and instructions. A prime example is

Robotic Process Automation (RPA), a software-based approach that automates digital tasks within an organization’s existing applications. RPA bots excel at high-volume, predictable activities such as filling out forms, extracting data from structured documents, or recording transactions. The primary benefits of traditional automation include increased productivity by offloading mundane work, creating more efficient linear workflows, improving accuracy for repetitive functions, and decreasing operational costs. However, its core limitation is its rigidity; it is designed to perform the exact same task, in the exact same way, every time.

AI-Powered Automation (Intelligent Automation): This represents a significant evolution, moving beyond mere execution to incorporate intelligence. AI automation, also known as intelligent automation, involves layering artificial intelligence capabilities on top of existing automation technologies like RPA. The defining characteristic of this paradigm is the system’s ability to learn, adapt, and make decisions without constant human intervention. Unlike its traditional counterpart, AI-powered automation can analyze context, recognize patterns, and even predict future outcomes. It is intentionally designed to handle complexity and variability, allowing it to manage dynamic and interpretive tasks that were once the exclusive domain of human workers. This capability to dynamically respond to new information is what separates it from rule-based systems.

Comparative Analysis: A Fundamental Shift in Capability

The distinction between traditional and AI-powered automation becomes clearer when examining their core capabilities side-by-side. The differences are not merely incremental; they represent a fundamental shift in how systems interact with data, make decisions, and evolve over time.

- Decision-Making: Traditional automation executes tasks based on a static set of “if-then” rules. It is not capable of autonomous decision-making. An RPA bot processing insurance claims, for example, will follow a rigid script. AI automation, in contrast, adds a layer of contextual awareness and intelligent decision-making. It can analyze real-time data and spot patterns to make more accurate predictions and choices. For instance, an AI-powered inventory management system does not simply reorder stock when levels dip below a fixed threshold. Instead, it might factor in historical sales data, seasonal trends, upcoming holidays, and even local weather forecasts to dynamically adjust inventory levels and pricing, optimizing for both availability and cost.

- Learning and Adaptability: This is arguably the most significant differentiator. Traditional automation systems operate on fixed rules and require manual updates and reprogramming to accommodate any changes in a process. AI automation, conversely, leverages machine learning and deep learning to adapt to new conditions automatically. It continuously learns from data and improves its performance over time without explicit human programming. Consider an invoice processing system. A traditional, template-based RPA solution can only handle invoices that match its pre-set formats. An AI-powered solution, however, can learn to process a wide variety of invoice layouts, identify anomalies, and even predict potential errors, all while becoming more accurate with each new invoice it processes. This inherent capacity for self-improvement makes AI automation far more resilient and scalable.

- Data Handling: Traditional automation is largely confined to working with structured data—information neatly organized in rows and columns, such as in a spreadsheet or database. This is a major limitation, as a vast majority of enterprise data is unstructured, including emails, customer feedback, social media comments, images, and handwritten notes. AI automation, powered by technologies like Natural Language Processing (NLP) and computer vision, excels at interpreting and acting upon this unstructured data. For example, an AI-powered customer support system can use NLP to understand the intent and sentiment behind a customer’s email, offering a personalized and relevant response, whereas a traditional system might only be able to route the query based on simple keywords.

- The Rise of Agentic AI: The latest evolution in this space is Agentic AI. An AI agent is a program that can autonomously plan and perform a sequence of tasks to achieve a specified goal. Unlike a traditional automation tool that follows a predefined, linear workflow, an AI agent is given a goal (e.g., “book a trip to New York for next week within a $2,000 budget”) and a set of tools (e.g., access to flight and hotel booking websites, a calendar API). The agent then autonomously designs and executes the steps needed to accomplish this goal, dynamically changing its approach based on the situation. This capability makes agentic AI particularly well-suited for adapting to unpredictable and changing scenarios in real-time, opening up automation possibilities for complex domains like personalized mental health guidance, dynamic market trend analysis, or simulating job interviews.

The following table provides a concise, executive-level summary of these fundamental differences, clarifying the distinct capabilities and strategic value of each automation paradigm. This comparison is essential for leaders to move the conversation from “should we automate?” to “what kind of automation is required to solve this specific business problem?” and to justify investments accordingly.

Dimension |

Traditional Automation (e.g., RPA) |

AI-Powered Automation (Intelligent Automation) |

Core Function |

Execute predefined, static scripts. |

Learn from data, adapt to change, and make decisions. |

Decision-Making |

Follows explicit, hard-coded rules. Incapable of independent judgment. |

Contextual, dynamic, and predictive. Can make autonomous decisions based on patterns and real-time data. |

Data Handling |

Limited to structured data (e.g., spreadsheets, databases). |

Processes structured, semi-structured, and unstructured data (e.g., emails, images, text, voice). |

Adaptability |

Requires manual reprogramming by developers to handle process changes. |

Self-improves over time through machine learning, adapting to new data and evolving requirements. |

Exception Handling |

Fails or requires human intervention when encountering deviations from the script. |

Learns from exceptions and can dynamically adjust workflows to handle them. |

Typical Use Cases |

Data entry, form filling, receipt generation, transaction recording. |

Fraud detection, customer intent analysis, supply chain optimization, medical diagnosis support, generative design. |

Strategic Value |

Task-level efficiency and cost reduction for mundane work. |

Process-level optimization, enhanced decision-making, and business model transformation. |

Evolution |

Static, rule-based bots. |

Evolving towards autonomous, goal-driven agents (Agentic AI). |

Table 1: Comparative Analysis of Traditional vs. AI-Powered Automation. Sources:

Section 2: The Technology Stack of Intelligent Automation

Intelligent automation is not a single technology but an ecosystem of interconnected capabilities. At its heart are several core AI disciplines that work in concert to provide the “intelligence” layer. Understanding this technology stack is essential for designing robust, effective, and scalable automation solutions. The stack ranges from foundational learning algorithms to advanced generative models that are pushing the boundaries of what is possible.

Machine Learning (ML) and Deep Learning: The Engine of Intelligence

Machine learning is the foundational component of modern AI and the primary engine driving intelligent automation. It is a subset of AI that leverages algorithms and statistical models to enable systems to learn from and adapt to data without being explicitly programmed. Instead of being told what to do, an ML model is trained on data, from which it identifies patterns, makes predictions, and optimizes its decision-making process. Deep Learning is a more advanced subset of ML that uses neural networks with many layers (hence “deep”) to analyze highly complex, non-linear patterns in vast datasets. It is the technology behind major breakthroughs in areas like image recognition and natural language understanding.

The primary methods of machine learning used in automation include:

- Supervised Learning: In this approach, the model is trained on a labeled dataset, where each input is paired with a correct output label. The algorithm learns to map inputs to outputs. This is highly effective for classification and prediction tasks. For example, an email system can be trained on a dataset of emails labeled as “spam” or “not spam” to learn how to automatically filter incoming messages.

- Unsupervised Learning: Here, the model is given unlabeled data and must find the intrinsic structure and hidden patterns on its own. This is used for tasks like clustering, where the goal is to group similar data points together. A marketing automation tool might use unsupervised learning to automatically segment a customer base into distinct personas based on their purchasing behavior, without any prior labels.

- Reinforcement Learning: This method involves an AI “agent” that learns to make decisions by interacting with an environment. It learns through a process of trial and error, receiving “rewards” for good actions and “penalties” for bad ones, with the goal of maximizing its cumulative reward over time. This is particularly useful for optimizing dynamic systems, such as an algorithmic trading bot learning the most profitable trading strategy or a robot learning the most efficient path through a warehouse.

Natural Language Processing (NLP): The Bridge to Human Communication

Natural Language Processing equips AI systems with the ability to understand, interpret, generate, and interact with human language, both written and spoken. Given that a vast amount of business information is locked in unstructured text, NLP is a critical technology for unlocking automation opportunities. It serves as the bridge between human communication and machine execution.

Key applications of NLP in automation include:

- Intelligent Chatbots and Virtual Assistants: Tools like Siri and Alexa, as well as enterprise-grade chatbots, use NLP to understand user commands and questions, providing coherent and contextually relevant responses. This automates a wide range of customer service and internal support tasks.

- Sentiment Analysis: NLP algorithms can analyze text from customer reviews, social media posts, or support tickets to determine the underlying sentiment (positive, negative, neutral). This allows companies to automate the process of monitoring brand health and identifying customer satisfaction issues at scale.

- Document Processing and Summarization: NLP can automatically read and understand the content of long documents, such as legal contracts or research papers, and extract key information or generate concise summaries. This dramatically speeds up information retrieval and analysis workflows.

Computer Vision: The Eyes of the Machine

Computer vision is the field of AI that enables machines to perceive, recognize, and interpret visual information from the world, including images and videos. It essentially gives “eyes” to automated systems, allowing them to interact with and understand the physical and digital visual environment.

Applications in automation are widespread:

- Quality Control in Manufacturing: Computer vision systems can be installed on assembly lines to automatically inspect products for defects, such as cracks, misalignments, or cosmetic flaws, with a level of speed and precision that surpasses human capabilities.

- Medical Image Analysis: In healthcare, computer vision algorithms assist radiologists by analyzing medical images like X-rays, CT scans, and MRIs to help detect early signs of diseases such as cancer or pneumonia.

- Automated Data Extraction: Computer vision, often combined with Optical Character Recognition (OCR), can “read” scanned documents like invoices or receipts, automatically extracting relevant data fields (e.g., invoice number, amount, date) and inputting them into financial systems, thus automating a tedious data entry process.

- Security and Surveillance: Video analysis is used to monitor security footage, automatically detecting anomalies, identifying unauthorized individuals, or tracking objects of interest.

Generative AI and Large Language Models (LLMs): The Creative Force

The most recent and perhaps most transformative addition to the automation technology stack is Generative AI. This represents a paradigm shift where AI moves from simply analyzing or classifying existing data to creating entirely new, realistic, and coherent artifacts, including text, software code, images, music, and product designs. This creative capability is primarily powered by

foundation models, which are massive AI models trained on broad, unlabeled datasets that can be adapted for a wide range of tasks.

At the heart of much of today’s generative AI are Large Language Models (LLMs), such as OpenAI’s GPT series. These are deep learning models with billions of parameters, trained on vast swaths of the internet, giving them an unprecedented ability to understand and generate human-like text and code.

The role of Generative AI and LLMs in automation design is profound:

- Advanced Natural Language Interfaces: LLMs enable the creation of highly intuitive interfaces where users can command complex automation workflows using conversational, natural language. Instead of clicking through menus, a user could simply state, “Generate a quarterly sales report for the European market and email it to the executive team,” and an LLM-powered agent could parse this request and execute the necessary actions.

- Automated Code and Test Generation: LLMs can generate high-quality code snippets, entire functions, and even test scripts based on natural language descriptions. This dramatically accelerates the software development lifecycle, reducing the time and effort required to build and test automation solutions.

- Hyper-Personalized Content Automation: Generative AI can create personalized marketing copy, customer support responses, and product recommendations at a scale and level of detail previously unimaginable, tailoring each interaction to the individual user’s history and context.

- System Design Considerations: Building a production-grade application with LLMs requires more than just clever prompt engineering. A robust architecture is necessary, often involving Retrieval-Augmented Generation (RAG), where the LLM is provided with relevant, factual information from a private knowledge base (like a vector database) to ground its responses and reduce the risk of “hallucinations” or factual errors. The full system design includes components for user input processing, an inference pipeline for model execution, scalable serving infrastructure to handle requests, and continuous monitoring and logging to evaluate performance and enable retraining.

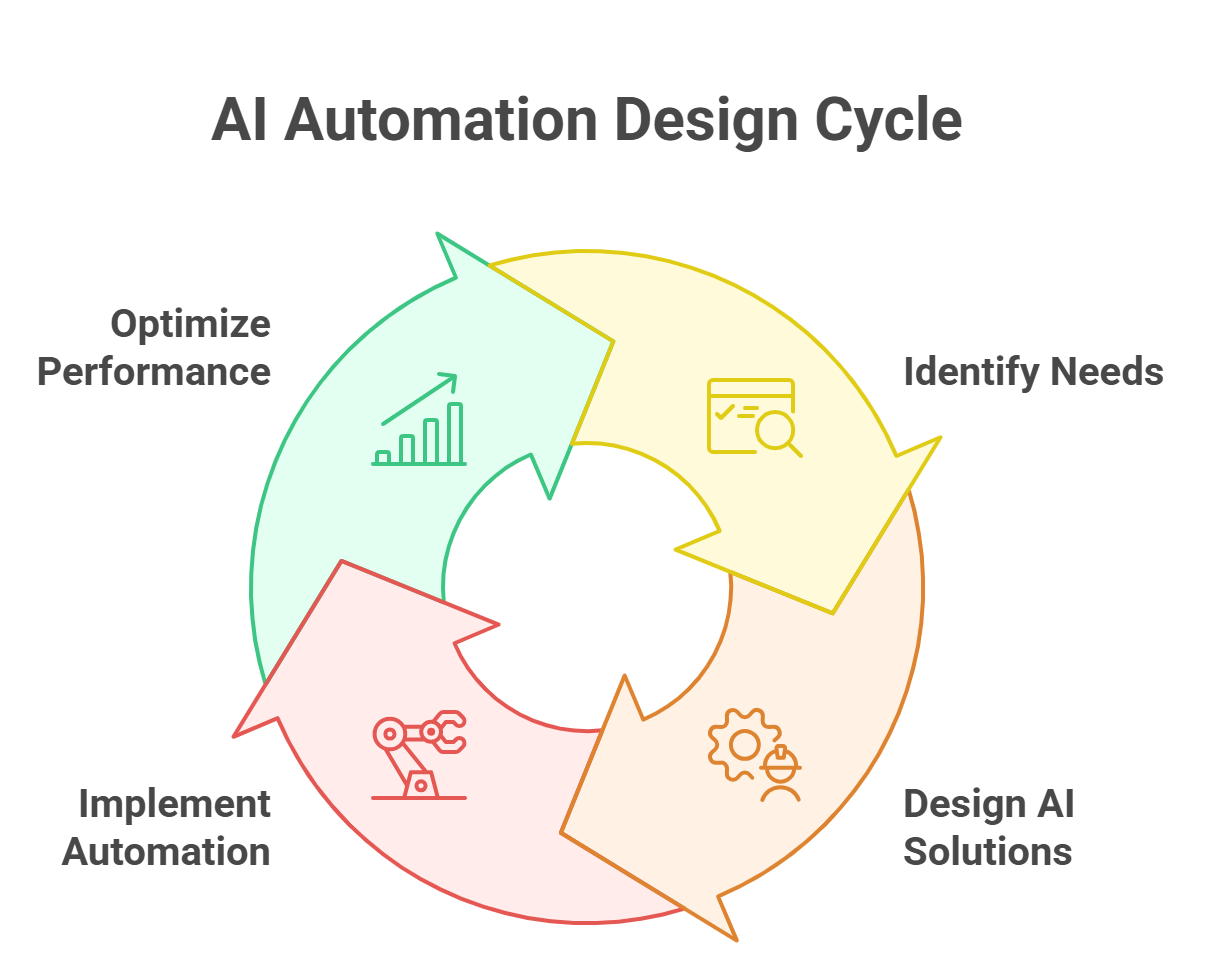

Part II: A Strategic Framework for Designing AI Automations

Successfully implementing AI automation is less a matter of technical prowess and more a function of strategic discipline. Deploying AI without a coherent framework often leads to disconnected projects, squandered resources, and a failure to capture meaningful business value. A robust strategic framework provides the necessary structure to guide an organization’s AI journey, ensuring that every initiative is purposeful, well-governed, high-impact, and methodically executed. This part outlines a comprehensive, four-phase framework designed for leaders to navigate the complexities of AI automation, moving from high-level vision to a concrete implementation roadmap.

Section 3: Phase 1 – Aligning AI with Strategic Vision

The foundational phase of any successful AI automation strategy is to ensure that technological ambition is firmly tethered to core business purpose. Without this alignment, organizations risk falling into the trap of pursuing “AI for AI’s sake,” resulting in technically impressive but commercially irrelevant projects. This initial phase is not about selecting tools or algorithms; it is about asking fundamental strategic questions from the top down.

Moving Beyond “AI for AI’s Sake”

The first and most critical step is to define a clear and compelling purpose for leveraging AI that directly supports the organization’s overarching mission, vision, and value proposition. This requires executive leadership to move beyond the hype and articulate how AI will serve as a “mission multiplier.” The process should begin with a workshop or strategic session focused on foundational questions :

- What is our core mission, and how can AI amplify our ability to deliver on it?

- What are the most critical needs of our customers, and how can AI help us meet them more effectively or in entirely new ways?

- If we could free up a significant amount of every employee’s time (e.g., five hours per week), what strategic initiatives could we pursue that are currently out of reach?

Answering these questions ensures that the AI strategy is not an isolated IT project but an integrated component of the broader business strategy, designed to create tangible value for the organization and its stakeholders.

Defining Clear, Measurable Objectives

Vague aspirations like “improving efficiency” are insufficient. To secure and maintain leadership buy-in and to accurately measure success, AI objectives must be specific, measurable, achievable, relevant, and time-bound (SMART). Examples of well-defined objectives include “reduce invoice processing time by 30% within six months” or “increase customer retention by 20% over the next fiscal year by implementing a personalized recommendation engine”. These clear targets provide a benchmark against which the return on investment (ROI) of an AI initiative can be objectively evaluated, which is crucial for justifying current and future resource allocation.

Conducting Feasibility Studies

Once a strategic vision and clear objectives have been established, a preliminary feasibility study is necessary before committing significant resources. This assessment serves as a reality check, evaluating the viability of the proposed AI initiative from multiple perspectives. Key areas to evaluate include:

- Technical Feasibility: Can the problem be effectively solved with current AI technology?

- Data Availability and Quality: Do we have access to the necessary volume and quality of data to train a reliable model?

- Cost and Resources: What is the estimated budget for tools, infrastructure, and talent? Will we need to hire external expertise or partner with an AI development company?

- Expertise: Do we have the in-house skills to build, deploy, and maintain this solution?

This initial diligence helps to de-risk the project, ensuring that the organization pursues opportunities that are not only strategically aligned but also practically achievable.

Section 4: Phase 2 – Establishing Governance and Ethical Guardrails

AI is not just another software tool. Its capacity for learning and autonomous decision-making introduces unique and significant risks related to bias, privacy, security, and accountability. Therefore, establishing a robust governance framework is not an optional compliance exercise but a non-negotiable prerequisite for any responsible AI implementation. An organization that treats AI as an unregulated technology is exposing itself to unmitigated legal, financial, and reputational damage.

Many organizations perceive governance and ethics as a bureaucratic hurdle that stifles innovation. However, a comprehensive analysis reveals the opposite to be true: a well-defined, proactive governance framework acts as a strategic accelerator. The unique risks associated with AI—such as algorithmic bias, privacy violations, and security vulnerabilities—can lead to project failure, legal penalties, and severe brand damage if left unmanaged. In the absence of clear guidelines, development teams often operate in a state of uncertainty, hesitant to experiment for fear of crossing an unstated ethical or legal line, which paradoxically slows down innovation. A proactive governance framework explicitly defines the “rules of the road,” clarifying what data is permissible to use, which use cases are off-limits, and how risks should be managed. This clarity removes fear and uncertainty, empowering teams to innovate confidently

Key Pillars of an AI Governance Framework

A comprehensive AI governance framework should be built on several key pillars, creating a blueprint for responsible and ethical AI usage.

- Purpose and Scope Definition: The framework must begin by clearly defining its purpose and scope. This involves cataloging all AI systems currently in use and their functions, identifying all applicable regulations (e.g., GDPR, the EU AI Act), and involving all relevant stakeholders (e.g., legal, compliance, IT, business units) in the conversation. Critically, this step includes conducting a formal risk assessment for every AI tool the organization plans to implement, even seemingly harmless applications like public generative AI services.

- Establishment of Guiding Principles: The organization must define its core principles for responsible AI. These principles act as guardrails for all future AI-related decision-making. Key questions to address include:

- What specific applications of AI are considered off-limits for our organization?

- What is our framework for managing AI-related cybersecurity risks?

- How will we protect sensitive client, employee, and proprietary data when using AI systems?

- What are our standards for transparency and explainability in AI-driven decisions?.

- Accountability and Oversight Structure: Ambiguity in ownership is a significant risk. The framework must establish clear lines of accountability for each stage of the AI lifecycle, from design and data collection to deployment and ongoing monitoring. This often involves the creation of a dedicated AI review board or an ethics committee composed of cross-functional leaders who oversee the implementation of the governance policy and review high-risk projects.

- Bias and Fairness Mitigation: This is one of the most critical ethical challenges. AI models are only as fair as the data they are trained on, and real-world data often contains historical and societal biases. An AI trained on historical hiring data from a male-dominated industry, for example, could inherit and amplify a bias against female candidates. The governance framework must therefore mandate processes to address this risk. This includes auditing training datasets for representation, testing models for performance disparities across different demographic groups, and actively involving people from diverse backgrounds and affected communities throughout the design and testing process.

- Transparency and Explainability Commitment: The framework should codify a commitment to making AI decisions as transparent and understandable as possible. This not only builds trust with users and stakeholders but is also becoming a key requirement in emerging AI regulations.

Rollout and Training

A governance framework is useless if it remains a document on a shelf. The final component is a concrete plan for operationalizing these principles. This involves a comprehensive rollout and training program for all employees, not just the technical teams. The training should cover the organization’s ethical principles, guidelines for responsible AI use, data privacy obligations, and the specific success criteria for AI projects. Creating dedicated channels for communication, such as a Slack channel or regular workshops, allows employees to share learnings, ask questions, and stay informed about approved tools and evolving best practices.

Section 5: Phase 3 – Identifying and Prioritizing High-Impact Opportunities

With a strategic vision and governance framework in place, the organization can move to the creative and analytical process of identifying and prioritizing specific AI automation opportunities. This phase involves a structured approach to brainstorming and vetting potential projects to ensure that resources are focused on initiatives that will deliver the most significant value.

Two Tiers of Opportunity

It is useful to categorize potential AI opportunities into two distinct tiers, which helps to balance short-term wins with long-term transformation.

- Tier 1: Productivity Transformation (Improving the Current): This tier focuses on internal efficiencies and operational improvements. These opportunities are often identified from the “bottom-up,” coming from teams and individuals who are closest to the day-to-day processes. The goal is to automate or augment tasks that are repetitive, manual, high-volume, or prone to human error. Examples include automating data entry from invoices into an accounting system, using an AI chatbot to handle common HR inquiries, or generating standard weekly reports. These projects are typically easier to implement, often leveraging off-the-shelf tools, and can deliver quick, measurable wins that build momentum and support for the broader AI strategy.

- Tier 2: Business Transformation (Creating the New): This tier focuses on “big swings”—opportunities that could fundamentally change how the organization creates and delivers value. These ideas are typically “top-down” and require executive-level strategic thinking. The goal is to envision how AI could reinvent a product, create a new service, or transform the entire business model. This involves thinking about how AI can provide the organization with “superpowers” to achieve things that were previously impossible.

A Structured Brainstorming Approach

To effectively source ideas for both tiers, a structured brainstorming approach is recommended.

- For Productivity Opportunities: Engage teams across the organization with targeted questions:

- What are the most time-consuming, low-value manual tasks you perform regularly?

- Looking at our current strategic goals, which ones are being held back by manual processes that AI could accelerate?

- What commercially available, “off-the-shelf” AI tools (e.g., Microsoft Copilot, ChatGPT for Enterprise, Grammarly) could save your team time right now?.

- For Transformation Opportunities: At the executive level, a “Protect, Expand, Transform” framework can guide strategic thinking:

- Protect: How can we use AI to defend and strengthen our existing value proposition? (e.g., use automation to lower operational costs and pass savings to customers, making our offering more competitive).

- Expand: How can we use AI to expand the value we offer to our current customers? (e.g., add new AI-powered features to an existing product, or offer a new analytical service based on customer data).

- Transform: How can AI enable us to completely transform our business model or enter new markets? (e.g., launch a new service for a new customer segment that is only possible with AI, or shift from a product-based model to a data-driven subscription model).

Prioritization Criteria for Strategic Opportunities

A flood of ideas is the desired outcome of brainstorming, but not all ideas are created equal. To move from a long list to a prioritized portfolio, each opportunity should be vetted against a set of strategic criteria. A truly strategic AI opportunity should meet the following tests :

- Solvable with AI: The problem must be technically suited to an AI solution. It should involve patterns, data, or processes that AI can effectively learn from and automate.

- Strategically Important: The initiative must address a critical business need or a significant pain point. It should be essential for the organization to solve this problem or create more time for higher-value work.

- Genuinely Transformative: The solution should offer a fundamentally new or different way of achieving an outcome. It should not be just a marginal improvement but a reinvention of a process or a solution to a previously unsolvable problem.

- High-Impact: The potential return on investment must be significant. Impact can be measured in various ways, including hours saved, costs reduced, revenue generated, errors eliminated, or new capabilities enabled.

Applying these criteria helps to filter out low-value or technically infeasible projects, ensuring that the organization’s resources are focused on the AI automations that will have the greatest strategic impact.

Section 6: Phase 4 – Building the Implementation Roadmap

The final phase of the strategic framework involves translating the prioritized opportunities into a concrete, sequenced, and actionable implementation roadmap. This roadmap serves as the master plan for execution, providing clarity on what will be done, in what order, and by whom. It is the crucial bridge between high-level strategy and on-the-ground software development and deployment.

The “Now, Next, Later” Sequencing Framework

A simple yet highly effective method for organizing the roadmap is the “Now, Next, Later” framework. This approach allows for the sequencing of initiatives based on a combination of their priority, complexity, and resource requirements. Opportunities are plotted onto a grid, providing a clear visual representation of the implementation plan over time.

Categorizing Initiatives for Clarity

To effectively place initiatives within the “Now, Next, Later” timeline, it is helpful to first categorize them by their nature and complexity. This adds another layer of organizational clarity to the roadmap.

- Everyday AI: This category includes off-the-shelf AI tools and applications (e.g., enterprise subscriptions to generative AI assistants, AI-powered grammar checkers, or transcription services) that require minimal setup and can be implemented quickly. These are prime candidates for the “Now” column, as they can deliver immediate productivity gains and help build a culture of AI adoption.

- Custom AI: This category encompasses bespoke AI solutions that need to be built or heavily customized for a specific business purpose. They often require significant development effort and deep integration with existing enterprise systems like ERPs or CRMs. These projects typically fall into the “Next” or “Later” columns, depending on their strategic importance and complexity.

- Process or Policy Change (No AI): During the opportunity identification phase, it is common to uncover potential improvements that do not actually require AI. These might be simple process optimizations or policy changes that can save time or reduce friction. These should be captured on the roadmap as valuable, often low-cost, quick wins that can also be placed in the “Now” column.

- Total Transformation: This category is reserved for the large-scale, “big swing” projects that aim to reinvent a core business process or model. Due to their high complexity, significant resource requirements, and long timelines, these initiatives are almost always placed in the “Later” column, often dependent on the learnings and capabilities built from earlier projects.

The Critical “Build vs. Buy” Decision

A key strategic decision point within the roadmap is whether to build a custom AI solution in-house or buy a pre-existing one from a vendor. This is not a one-size-fits-all choice and requires careful consideration.

- Buying Off-the-Shelf: This approach offers faster deployment, lower upfront costs, and access to vendor expertise and support. It is often the best choice for “Everyday AI” and for standard business functions where a customized solution provides little competitive advantage.

- Building Custom Solutions: Building a bespoke AI model offers the highest degree of flexibility, control, and potential for creating a unique competitive advantage. According to Gartner, the need for this flexibility is driving a trend toward in-house development, with a prediction that by 2028, 30% of GenAI pilots that move to large-scale production will involve custom builds rather than off-the-shelf software. However, organizations must conduct a sober assessment of the high costs, deep technical skills, and complex integration requirements before committing to building. A custom build is only justified when the AI solution is core to the company’s unique value proposition.

Assigning Ownership and Tracking Success

A roadmap without accountability is merely a wish list. To become an effective management tool, every single initiative on the roadmap must have two critical components assigned to it:

- A Champion/Owner: A specific individual must be assigned clear ownership and responsibility for driving the initiative forward.

- Key Performance Indicators (KPIs): A set of clear, measurable KPIs must be defined to track the progress and, ultimately, the success of the initiative. These KPIs should tie directly back to the objectives defined in Phase 1.

By summarizing the top “Now” opportunities, assigning owners, estimating the required investment, and defining success metrics, the strategic plan is transformed into a deployable and manageable portfolio of AI projects.

Part III: Engineering for Trust and Collaboration

The long-term success of any AI automation initiative is not determined by the sophistication of its algorithms alone. Ultimately, value is only realized when humans trust, adopt, and effectively collaborate with these intelligent systems. Therefore, engineering for trust and collaboration must be a central pillar of the design process. This involves a deliberate focus on the user experience (UX) of AI, a commitment to transparency through explainability, and a strategic integration of human oversight. This part delves into the principles and practices required to build AI automations that are not only powerful but also understandable, controllable, and synergistic with their human counterparts.

Section 7: Designing for the Human Experience (UX for AI)

The principles of good user experience design are even more critical when applied to artificial intelligence. When users interact with a system that makes autonomous decisions, issues of trust, control, and understanding come to the forefront. A human-centric approach to AI design is essential for fostering adoption and ensuring that the technology empowers rather than frustrates its users.

Start with the User, Not the Algorithm

The most fundamental principle of UX for AI is that the user’s needs and context should drive the technology choice, not the other way around. The design process should not begin with the question, “What can we do with this cool new algorithm?” but rather, “What is the user’s current workflow, what are their pain points, and how can AI genuinely add value or reduce friction?”. In some cases, a thorough analysis of the user’s problem may reveal that a simpler, non-AI solution is more effective, easier to build, and easier for the user to understand. Avoiding the implementation of AI for its own sake, or for trendiness, is a hallmark of mature, user-centric design.

Fostering Trust Through Transparency and Expectation Setting

Trust is the currency of AI adoption. Users are more likely to trust and rely on a system they understand. Two key practices are essential for building this trust:

- Clearly Identify AI Interactions: Users should always know when they are interacting with an AI versus a human or a simple rule-based system. Design systems like IBM’s Carbon recommend using clear visual indicators or “AI labels” to mark AI-generated content or features. This simple act of transparency prevents confusion and helps manage user expectations.

- Set Realistic Expectations: The term “AI” is often used as a marketing buzzword, leading to wildly inaccurate user expectations. It is crucial to be upfront and clear about what the AI can and cannot do. Communicating the system’s capabilities and, just as importantly, its limitations during the user’s initial interactions is a key best practice. A strategy of under-promising and over-delivering is far more effective for building long-term trust than making “magical” claims that the system cannot fulfill.

Explainable AI (XAI): Opening the Black Box

Many advanced AI models, particularly deep learning networks, operate as “black boxes”—they can produce highly accurate predictions, but their internal decision-making process is opaque even to their creators. This lack of transparency is a major barrier to trust and accountability, especially in high-stakes applications.

Explainable AI (XAI) is a set of processes and methods designed to make an AI’s reasoning understandable to human users.

Key XAI techniques include:

- Feature Importance and Attribution Methods: These techniques identify and highlight which input features had the most significant influence on the AI’s output. For example, a loan application AI might explain its denial by stating, “This decision was most influenced by your credit history and debt-to-income ratio”. This gives the user a clear, actionable reason for the outcome.

- Counterfactual Explanations: These methods explore “what if” scenarios, showing the user what minimal changes to the input would have led to a different outcome. For instance, the loan AI could state, “You would have been approved for this loan if your annual income were $5,000 higher”. This empowers the user by making the decision-making criteria tangible and understandable.

- Progressive Disclosure: Not all users need or want the same level of detail. A best practice is to provide explanations in layers. The interface can offer a simple, high-level explanation upfront (the “What” and “Why”) with the option for users to click and drill down into more technical details (the “How”). This approach caters to both general users who need a quick justification and expert users, like data scientists, who may need to conduct a deep audit.

Ensuring User Control and Graceful Degradation

A core principle of human-centric AI design is that the technology should augment and amplify human abilities, not render humans as passive spectators. Users must remain in control. This means designing systems that allow for human intervention, providing clear mechanisms to override an AI’s decision, correct its mistakes, or provide feedback to improve its future performance.

Furthermore, AI systems are not infallible. They will encounter situations where they are uncertain or make errors. A well-designed system anticipates this and is built to degrade gracefully. When the AI’s confidence in a prediction is low, it should not present the result with the same certainty as a high-confidence prediction. Instead, it should clearly communicate its level of uncertainty—perhaps through a visual indicator or a change in tone—and provide an easy and obvious pathway to escalate the task to a human for review and final judgment. Designing for failure is as important as designing for success.

Section 8: The Human-in-the-Loop (HITL) Imperative

While the ultimate goal of automation is often to reduce manual effort, the most effective, accurate, and ethical AI systems are rarely fully autonomous. Instead, they are designed as collaborative systems that strategically integrate human intelligence at critical points in the AI lifecycle. This approach, known as Human-in-the-Loop (HITL), recognizes that for the foreseeable future, the combination of human judgment and machine processing power is superior to either one operating in isolation.

What is Human-in-the-Loop?

HITL is a collaborative and iterative approach to machine learning where human experts actively participate in the training, tuning, validation, and operation of AI models. It is a system designed to leverage the complementary strengths of both humans and machines. Machines excel at processing vast datasets and identifying patterns at a scale impossible for humans, while humans provide the nuanced judgment, contextual understanding, ethical reasoning, and real-world knowledge that machines currently lack.

How HITL Works in Practice

The HITL process creates a continuous feedback loop that improves the AI model over time.

- Training and Data Labeling: In the initial phase, human experts provide the “ground truth” data that the model learns from. In a supervised learning context, this involves humans labeling raw data. For example, radiologists might annotate medical images to identify tumors, or fraud analysts might label financial transactions as legitimate or suspicious. The quality of this human-labeled data directly determines the initial accuracy of the AI model.

- Feedback and Fine-Tuning: Once a model is trained, it begins making predictions. In a HITL system, human experts review a sample of these predictions, especially those where the model has low confidence. They correct any errors the model makes. This feedback is then fed back into the system to retrain and fine-tune the model, making it more accurate over time. This iterative process allows the model to learn from its mistakes and adapt to new patterns.

- Exception Handling and Escalation: No AI model is perfect. It will inevitably encounter edge cases, ambiguous inputs, or novel scenarios that were not represented in its training data. A well-designed HITL system is built to recognize these situations. When the model’s confidence in its prediction falls below a certain threshold, it automatically escalates the task to a human expert for a final decision. This ensures that the most difficult or critical decisions are always subject to human oversight, providing an essential safety net.

The Overarching Benefits of HITL

Integrating humans into the loop provides several critical benefits that go beyond simple accuracy improvements.

- Enhanced Accuracy and Reliability: Human oversight and correction directly lead to more accurate and reliable AI models. This is especially crucial in high-stakes domains like healthcare, finance, and autonomous vehicles, where an error can have severe consequences.

- Effective Bias Mitigation: Algorithmic bias is a pervasive challenge. HITL is one of the most powerful tools for mitigating it. By involving a diverse group of human reviewers, organizations can identify and correct biases in the training data or the model’s behavior that the original developers might have overlooked.

- Building Trust and Adaptability: The knowledge that a human expert is overseeing the system’s decisions fosters greater trust among end-users and stakeholders. Furthermore, the continuous feedback loop allows the system to remain flexible and adapt to the complexities and dynamic nature of the real world, making it more robust and resilient over the long term.

The Future of Work: From Replacement to Human-AI Teaming

The HITL concept is evolving into a broader strategic vision for the future of work. The prevailing narrative is shifting away from a simplistic story of AI replacing human workers and toward a more nuanced reality of human-AI collaboration. The future lies in intentionally designing roles and workflows that leverage the complementary strengths of both. In this model, AI handles the data-intensive, repetitive, and analytical tasks, freeing up human workers to focus on activities that require uniquely human skills: strategic thinking, creative problem-solving, empathy, ethical judgment, and complex communication.

This leads to the emergence of Human-Autonomy Teaming (HAT), a paradigm where AI agents are no longer just tools but are treated as collaborative partners. In a HAT model, the human’s role shifts from that of a simple operator to an

“orchestrator.” The human orchestrator is responsible for designing the automation systems, training and fine-tuning the AI agents, handling the most complex exceptions, making value-based judgments, and ensuring the entire system operates within ethical and strategic boundaries. This vision redefines job roles, demanding new skills in data interpretation, AI troubleshooting, and ethical oversight, and positions human-AI collaboration as the central driver of future productivity and innovation.

Part IV: Navigating the Implementation Journey

The transition from a strategic framework to a functioning, value-generating AI automation system is fraught with practical challenges. This part provides a pragmatic guide to navigating the implementation journey, grounding the principles of design and collaboration in the context of real-world applications. By examining concrete case studies and anticipating common pitfalls, organizations can better prepare for the complexities of deploying AI at scale and increase their probability of success.