Section 1: The Paradigm Shift in Automated Support: From Scripted Responses to Conversational Intelligence

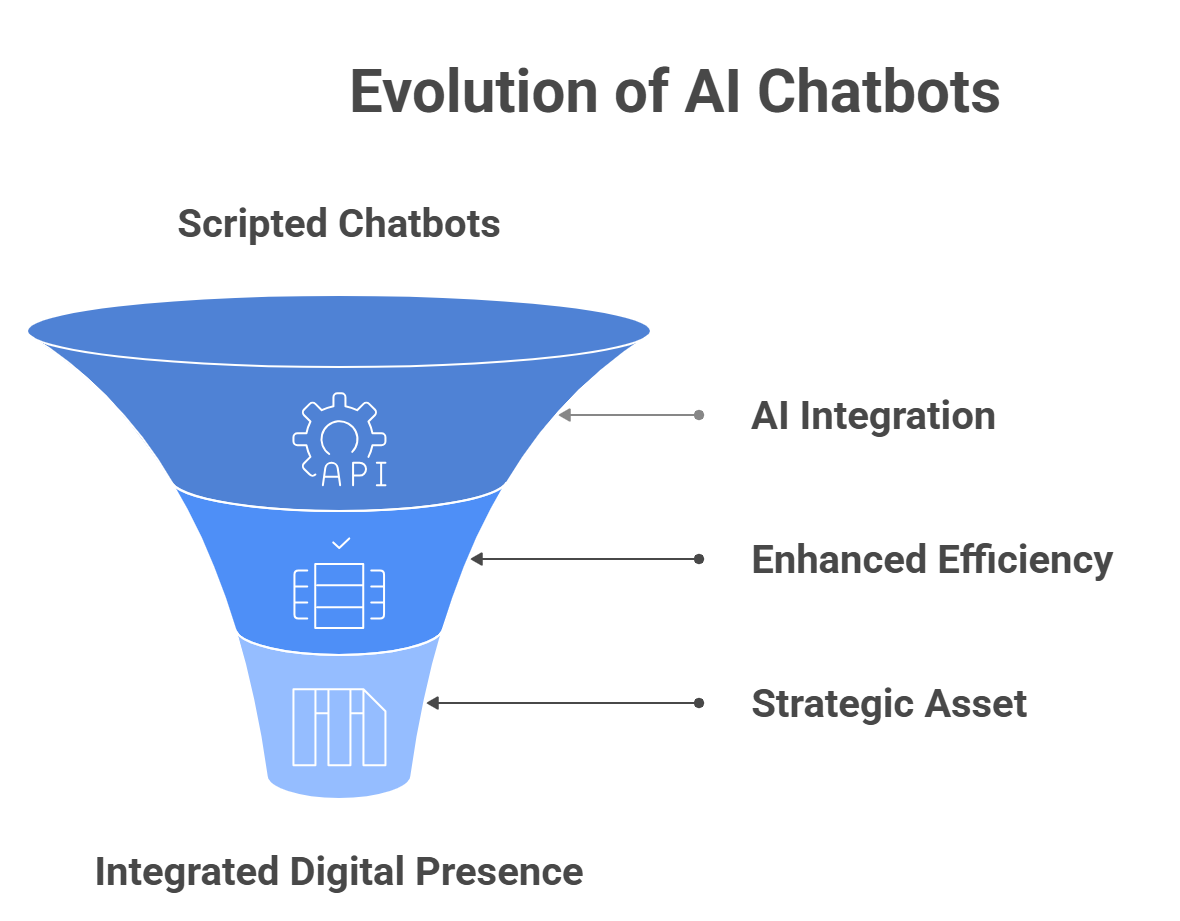

The landscape of digital customer interaction is undergoing a fundamental transformation, driven by the maturation of artificial intelligence. For years, website support was dominated by static FAQs and rudimentary, scripted chatbots that offered limited utility. Today, businesses are increasingly deploying sophisticated AI-powered conversational agents that not only resolve customer issues with unprecedented efficiency but also function as strategic assets for growth. This report provides a comprehensive framework for designing, implementing, and optimizing these AI-powered website support chatbots. It delves into the core technologies, outlines a structured development lifecycle, details essential features, establishes a methodology for performance measurement, and explores the future trajectory of this transformative technology. The analysis demonstrates that the modern AI chatbot has evolved far beyond a simple cost-saving tool into an integrated engine for enhancing customer experience, generating revenue, and building a brand’s intelligent digital presence.

Summary of the article in the form of a podcast

1.1 Differentiating AI-Powered and Rule-Based Architectures

The decision to implement a website chatbot begins with a critical architectural choice between two fundamentally different models: rule-based systems and AI-powered systems. This choice dictates the chatbot’s capabilities, scalability, and ultimate strategic value to the organization.

Rule-Based (Decision-Tree) Chatbots Rule-based chatbots, also known as decision-tree bots, represent the most basic form of chatbot technology. These systems operate on a rigid, pre-defined framework of “if/then” logic. Human agents map out every possible conversation path in a flowchart, anticipating specific customer questions and programming corresponding responses. Interaction is typically guided by buttons and pre-set options rather than free-form text.

The primary limitation of this architecture is its inflexibility. A rule-based chatbot cannot understand context, parse user intent, or handle any query that deviates from its programmed script. If a user types a phrase that doesn’t contain the exact keywords the bot is programmed to recognize, the conversation stalls, leading to user frustration and a likely escalation to a human agent. Furthermore, these bots cannot learn from their interactions; their intelligence is static and can only be improved by a human manually editing the conversational flow. While they can be deployed relatively quickly for simple, repetitive use cases like answering a handful of common questions, they lack the scalability and adaptability required for complex support environments.

AI-Powered (Conversational AI) Chatbots In contrast, AI-powered chatbots represent a more sophisticated and dynamic architecture. These systems are built on a foundation of conversational AI technologies, primarily Natural Language Processing (NLP), Machine Learning (ML), and, more recently, Large Language Models (LLMs). Instead of relying on rigid scripts, AI chatbots are trained to understand the meaning and intent behind a user’s words, regardless of the specific phrasing used.

This ability to decipher free-form conversation allows them to handle a vastly wider and more complex range of inquiries. A key differentiator is their capacity to learn. Through machine learning algorithms, AI chatbots analyze past interactions to continuously improve their accuracy and effectiveness over time, becoming smarter with each conversation without requiring constant manual updates. This learning capability, combined with their ability to understand context and personalize responses, makes them a far more powerful and future-proof solution for businesses aiming to provide a truly intelligent and satisfying customer experience. The initial setup may require more data and training than a simple rule-based bot, but the long-term benefits in terms of scalability, reduced maintenance, and superior performance are substantial.

Table 1: Comparative Analysis: Rule-Based vs. AI-Powered Chatbots

Feature |

Rule-Based Chatbot |

AI-Powered Chatbot |

Core Technology |

Pre-defined “if/then” logic, flowcharts |

Natural Language Processing (NLP), Machine Learning (ML), LLMs |

Conversation Style |

Structured, menu/button-driven, rigid |

Free-form, natural, flexible, human-like |

Context Understanding |

None; limited to keyword matching |

High; understands intent, sentiment, and conversational history |

Learning Capability |

None; static, requires manual updates |

Continuous; learns from every interaction to improve accuracy |

Scalability |

Low; requires manual creation of new flows for each new case |

High; can adapt to new topics and languages with additional data |

Maintenance |

High; requires constant script editing and flow updates |

Low; self-improves through automated learning loops |

Setup Time |

Fast for simple cases |

Longer initial training, but faster to scale long-term |

Typical Use Cases |

Simple FAQs, basic lead capture forms |

Complex customer support, personalized sales, process automation |

Cost Model |

Lower initial development cost, higher long-term maintenance |

Higher initial investment, lower long-term maintenance, higher ROI |

1.2 The Strategic Imperative: Quantifiable Business Benefits of AI Chatbots

The adoption of AI-powered chatbots is not merely a technological upgrade; it is a strategic business decision with profound and quantifiable benefits across operations, customer experience, and revenue growth. The justification for investing in this technology has evolved significantly. Initially, the focus was on operational efficiency and cost reduction. However, as the technology has matured, its role has expanded, transforming the support function from a cost center into a proactive engine for revenue generation and strategic data acquisition. This shift fundamentally alters the ROI calculation and elevates the chatbot from a simple IT project to a core component of a company’s digital strategy.

Operational Efficiency and Cost Reduction The most immediate and measurable benefit of AI chatbots is the significant enhancement of operational efficiency. By automating responses to routine and repetitive queries—such as order status checks, password resets, or refund assistance—chatbots deflect a large volume of tickets from human agents. This automation liberates employees from monotonous tasks, allowing them to concentrate on more complex, high-value customer issues that require creativity and critical thinking.

This efficiency translates directly into cost savings. Chatbots can operate 24/7 without the staffing expenses associated with round-the-clock human support teams. As a business scales and its customer base grows, a single chatbot can handle thousands of conversations simultaneously, accommodating increased interaction volume without a proportional increase in headcount or operational costs. This scalability provides a significant financial advantage over traditional support models or outsourcing. Furthermore, by improving the employee experience and reducing tedious work, chatbots can contribute to lower employee churn and higher job satisfaction within the support team.

Enhanced Customer Experience (CX) and Satisfaction From the customer’s perspective, the primary benefit is immediate, 24/7 access to support. AI chatbots eliminate the wait times associated with phone queues or email responses, providing instant answers to customer inquiries at any time of day. This immediacy is critical in a competitive digital marketplace where customers expect fast solutions.

Beyond speed, AI chatbots enhance the quality of the customer experience. They provide consistent and accurate information every time, drawing from a verified knowledge base, which prevents the inconsistencies that can arise when different human agents provide slightly different answers. Advanced AI capabilities enable a deeper level of service. By integrating with backend systems like a Customer Relationship Management (CRM) platform, chatbots can deliver highly personalized interactions, addressing customers by name and referencing their past purchase history to offer relevant suggestions. The ability to support multiple languages makes a brand more accessible to a global audience, further improving customer satisfaction. This combination of speed, accuracy, consistency, and personalization leads to demonstrably higher customer satisfaction (CSAT) scores and builds brand loyalty.

Revenue Generation and Business Growth A pivotal shift in the strategic role of AI chatbots is their emergence as proactive tools for revenue generation. Modern chatbots are no longer passive responders; they are active participants in the sales and marketing funnel. They can engage website visitors proactively, ask qualifying questions to identify high-potential leads, and schedule product demos or sales calls, effectively automating the top of the sales funnel.

For e-commerce businesses, chatbots act as virtual shopping assistants, providing personalized product recommendations, answering questions about features, and guiding users toward a purchase. By intervening at critical moments, such as when a user shows signs of abandoning their shopping cart, a chatbot can offer assistance or a discount to encourage conversion, directly boosting sales. This capability to generate leads, nurture prospects, and automate cross-sell and upsell strategies transforms the chatbot from an operational expense into a direct contributor to the company’s top-line revenue.

Data Acquisition and Strategic Insights In an era of increasing data privacy regulations and the decline of third-party cookies, AI chatbots have become an invaluable tool for first-party data collection. During natural, conversational interactions, chatbots can gather crucial information about customer preferences, pain points, common questions, and feedback. This data is captured directly from the source and can be fed into a CRM or analytics platform to build rich customer profiles.

The strategic value of this data is immense. It provides a real-time pulse on customer sentiment and behavior, allowing businesses to identify trends, refine product and service offerings, and personalize marketing campaigns with greater precision. By analyzing the questions that users ask, a business can identify gaps in its knowledge base or areas where its products are confusing, providing actionable insights for improvement across the entire organization.

1.3 Core Use Cases in Modern Digital Ecosystems

The versatility of AI-powered chatbots allows for their application across a wide range of business functions and industries, each tailored to specific objectives and user needs.

- Customer Service: This remains the primary and most widespread use case. Chatbots excel at providing frontline support by answering frequently asked questions (FAQs), troubleshooting common technical issues, processing returns and refunds, and providing real-time order tracking information. Their ability to handle high volumes of these routine inquiries allows human support teams to focus on resolving more intricate and sensitive customer cases. Real-world examples demonstrate significant impact; LATAM Airlines, for instance, utilized chatbots to reduce customer response times by 90% and resolve 80% of inquiries without human intervention, while Siemens Financial Services achieved an 86% customer satisfaction score with its AI agent implementation.

- Sales and E-commerce: In the retail and e-commerce sectors, AI chatbots function as sophisticated personal shopping assistants. They can help customers find products based on specific criteria (e.g., “green swimsuit under $50”), compare different options, check inventory levels, and guide them through the checkout process. By engaging proactively with users who linger on product pages or have items in their cart, chatbots can reduce cart abandonment rates and increase conversion by offering timely assistance or promotions.

- Lead Generation and Marketing: Chatbots are powerful tools for the top of the marketing and sales funnel. They can engage website visitors with targeted messages, ask qualifying questions to determine a visitor’s needs and budget, and capture essential contact information like names and email addresses. Based on the conversation, a chatbot can schedule a demo with a sales representative or add the qualified lead directly into the company’s CRM system, streamlining the lead nurturing process.

- Internal Support (HR & IT): The benefits of chatbot automation are not limited to external customers. Many organizations deploy internal chatbots to support their employees. An HR chatbot can answer common questions about company policies, benefits, and time-off requests, and even assist with the onboarding process for new hires. Similarly, an IT support chatbot can help employees troubleshoot common technical problems, such as resetting passwords or configuring software, reducing the burden on the internal helpdesk. Supermarket chain Tesco, for example, saw a 43% increase in employee self-service after implementing a chatbot-powered help center.

- Specialized Industries: The adaptability of AI chatbots has led to their adoption in various specialized industries. In financial services, bots provide secure assistance with account balance inquiries, fund transfers, and bill payments. In healthcare, they can help patients schedule appointments, receive medication reminders, and find information about services. In the real estate industry, chatbots can qualify potential buyers or renters by asking about their preferences, provide virtual property tours, and schedule viewings with agents. These industry-specific applications highlight the technology’s capacity to be trained on specialized knowledge and workflows.

Section 2: The Technological Core: Understanding the Engine of Conversational AI

To design and implement an effective AI chatbot, it is essential to understand the underlying technologies that power its ability to comprehend and generate human language. The intelligence of a modern chatbot is not a single monolithic entity but rather a sophisticated interplay of several fields within artificial intelligence, most notably Natural Language Processing (NLP), Machine Learning (ML), and Large Language Models (LLMs). This section deconstructs these core components, explaining how they work in concert to transform unstructured user input into meaningful actions and coherent responses.

2.1 Natural Language Processing (NLP): The Foundation of Understanding

Natural Language Processing is the foundational branch of AI that grants computers the ability to understand, interpret, manipulate, and generate human language, both in text and speech form. It is the “secret sauce” that bridges the gap between human communication and computer computation, moving interactions beyond rigid commands to fluid, context-aware conversations. Without NLP, a chatbot is merely a glorified flowchart, unable to handle the nuance, ambiguity, and variability inherent in how people naturally talk.

NLP itself is a broad field composed of several key subfields and processes that work together in a pipeline to deconstruct and respond to user input.

Core Subfields of NLP:

- Natural Language Understanding (NLU): NLU is a critical subset of NLP that focuses specifically on machine reading comprehension. Its primary goal is to determine the user’s intent—what the user is trying to accomplish—from their language. NLU algorithms analyze grammatical structure, sentiment, and the relationships between words and phrases to convert the unstructured chaos of human language into a structured, machine-readable format that the chatbot’s logic can process. For example, NLU is what allows a bot to recognize that “I need to change my flight” and “Can I get on a different plane?” are expressing the same fundamental intent.

- Natural Language Generation (NLG): NLG is the counterpart to NLU; it is responsible for generating the chatbot’s response. After the bot’s core logic determines what information to convey, NLG takes that structured data and translates it back into natural, human-like language. Effective NLG is what makes a bot sound conversational rather than robotic. It involves tasks like selecting the right words, structuring sentences correctly, and maintaining a consistent tone and personality.

The NLP Pipeline in Action: When a user sends a message to an NLP-powered chatbot, the input goes through a series of processing steps to be understood:

- Tokenization: The first step is to break down the raw text into its smallest constituent parts, or “tokens.” These tokens can be individual words, phrases, or even punctuation marks. For example, the sentence “I need help with my order #12345” would be tokenized into

["I", "need", "help", "with", "my", "order", "#12345"]. This allows the system to analyze each component individually. - Normalization: Before analysis, the tokens are standardized to reduce complexity. This process, known as normalization, can include converting all text to lowercase, correcting spelling mistakes, and removing punctuation and “stop words” (common words like “the,” “a,” “is” that add little semantic value). This ensures that “Order,” “order,” and “orders” are all treated as the same concept.

- Named Entity Recognition (NER): NER is a crucial process for identifying and classifying key pieces of information—or “entities”—within the text. These entities are the specific data points needed to fulfill a user’s request. In the example above, NER would identify “12345” and classify it as an

OrderNumber. Other common entities include dates, times, locations, names, and product IDs. Extracting these entities is fundamental to providing a relevant, context-specific response.

2.2 Deconstructing the Process: Intent Recognition, Entity Extraction, and Context Management

While NLP provides the foundational tools for language understanding, the practical application within a chatbot revolves around three core processes: identifying what the user wants, extracting the information needed to act, and remembering the conversation’s context.

- Intent Recognition: This is the central task of NLU in a chatbot system. It involves classifying a user’s query into a predefined category of action or goal, known as an “intent”. For example, a retail chatbot might have intents like

TrackOrder,ProcessReturn, orCheckProductAvailability. The system is trained on a large dataset of “utterances”—the various ways a user might phrase a request—for each intent. The machine learning model learns the linguistic patterns associated with each intent, enabling it to correctly classify new, unseen queries. For instance, it learns that “Where is my package?”, “What’s the delivery status?”, and “Track my shipment” all map to the sameTrackOrderintent. - Entity Extraction: Once the intent is recognized, the chatbot needs specific details to fulfill the request. This is where entity extraction comes in. For the

TrackOrderintent, the critical entity is the order number. For aBookFlightintent, the key entities would be theOriginCity,DestinationCity, andTravelDate. The chatbot is trained to identify and extract these specific pieces of information from the user’s input. If an entity is missing, the chatbot’s logic knows to prompt the user for it (e.g., “I can help with that. What is your order number?”). - Context and Session Management: A human conversation is not a series of disconnected questions and answers. Each turn builds upon the last. To mimic this, an intelligent chatbot must maintain context throughout a session. Context refers to the parameters and information gathered during the conversation, while a session is the entire interaction from start to finish, even if it’s interrupted. This “memory” allows the chatbot to engage in natural, multi-turn dialogues. For example, if a user asks, “Do you have it in blue?”, the chatbot uses the context of the previous turn (which was about a specific product) to understand what “it” refers to. This prevents the bot from frustratingly re-asking for information it has already been given and is a hallmark of a well-designed conversational experience.

The relationship between these components reveals a sophisticated architecture. The rise of Large Language Models (LLMs) might suggest that the structured approach of defining intents and entities is becoming obsolete. However, a deeper analysis indicates a synthesis is occurring. LLMs provide unparalleled conversational fluency and flexibility, but the underlying business logic still often relies on identifying a core user goal (intent) and the necessary parameters (entities) to trigger a specific workflow, such as processing a refund or integrating with a CRM. The LLM handles the “front end” of the conversation, using its superior language understanding to flexibly interpret a user’s messy, natural language input. It then distills this input down to a core intent and the relevant entities. This structured data is then passed to the backend business logic or API to execute a task. Therefore, the design process is not an either/or choice. It is about using LLMs to make the user interaction much wider and more intelligent, while still having a solid, structured foundation of intents and entities to drive the backend business processes. This hybrid approach is the key to building chatbots that are both conversationally adept and functionally powerful.

2.3 Architecting with Large Language Models (LLMs): Capabilities and Considerations

The recent and rapid advancement of Large Language Models (LLMs) has marked a new era in conversational AI, fundamentally transforming the capabilities of chatbots. Understanding LLMs and their underlying architecture is now critical for designing state-of-the-art support systems.

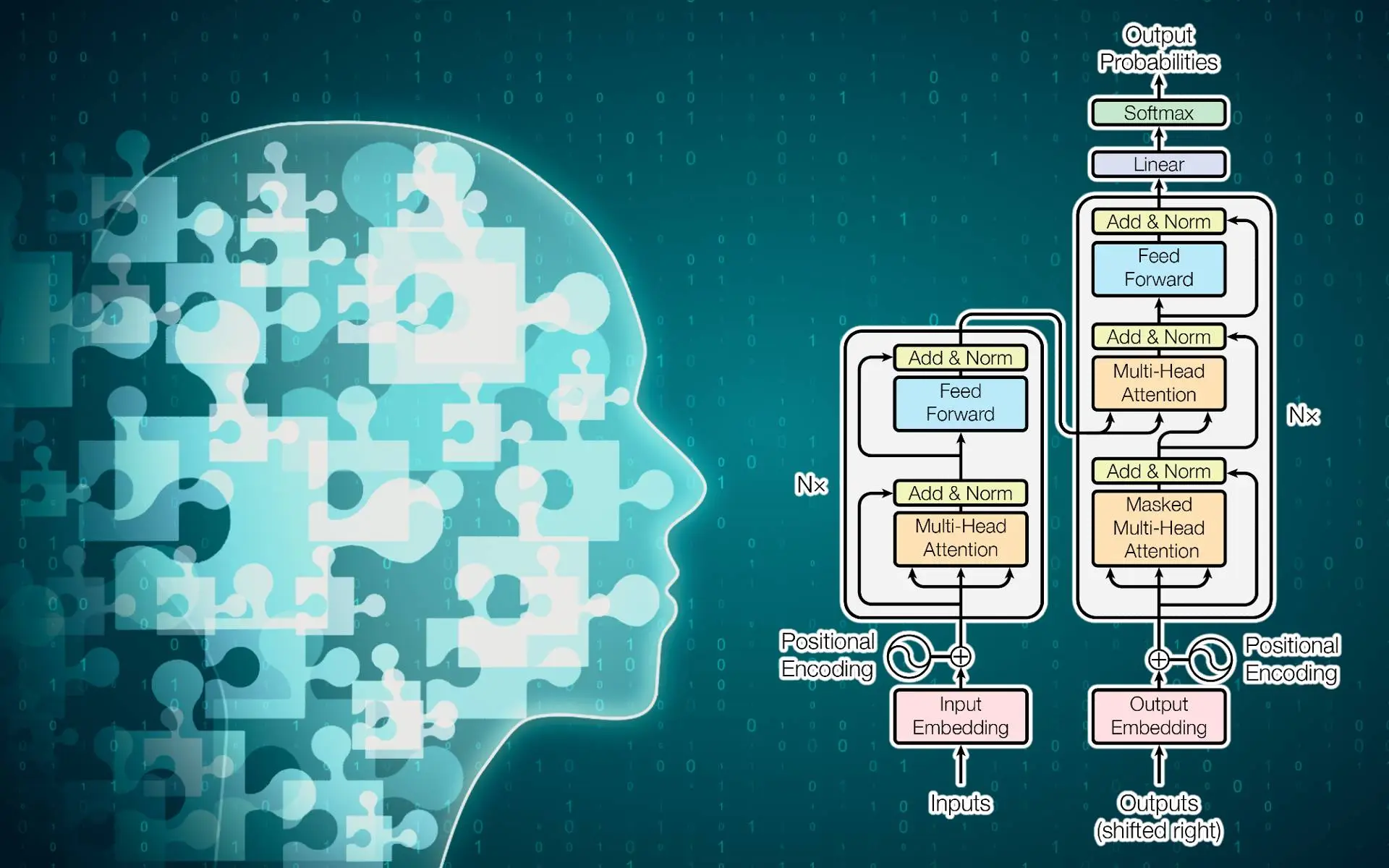

- What are LLMs? An LLM is a highly advanced type of AI program specifically designed to recognize, understand, and generate human-like text. These models are built on a specific type of neural network architecture known as atransformer and are trained on truly massive datasets, often encompassing a significant portion of the public internet, including text, books, and code. Through a process called deep learning, LLMs analyze these vast quantities of data to learn the intricate patterns, grammar, context, and semantic relationships of human language. Their core function is often probabilistic: given a sequence of words, they are exceptionally good at predicting the most likely next word, which allows them to generate coherent and contextually relevant text.

- The Role of Transformer Models: The breakthrough that enabled the power of modern LLMs is the transformer architecture, introduced in 2017. Unlike previous models that processed text sequentially (one word at a time), transformers can process entire sequences in parallel. Their key innovation is a mechanism called “self-attention,” which allows the model to weigh the importance of different words in the input text relative to each other. This enables the model to grasp long-range dependencies and understand context with a level of sophistication that was previously unattainable. For example, in the sentence “The robot picked up the ball and threw it,” the self-attention mechanism helps the model understand that “it” refers to “the ball,” not “the robot.” This deep contextual understanding is the foundation of an LLM’s linguistic prowess.

- How LLMs Transform Chatbots: The integration of LLMs is making chatbot conversations more natural, fluid, and intuitive than ever before. They move beyond the constraints of simple intent classification to handle more complex, ambiguous, and even emotionally nuanced conversations. Many modern chatbots are now architected as “LLM agents”—software that uses a powerful, general-purpose LLM as its conversational “brain” but is customized with specific business logic, data, and constraints to perform dedicated tasks. This allows a chatbot to handle unexpected user questions gracefully and engage in more open-ended dialogues, rather than hitting a dead end when a query doesn’t match a predefined intent.

- Training and Fine-Tuning LLMs: While LLMs like GPT-4 are pre-trained on vast general datasets, they must be adapted to be effective for a specific business. This process is called “fine-tuning,” where the general model is further trained on a smaller, curated dataset of company-specific information, such as product documentation, internal knowledge bases, and past customer support transcripts. This teaches the model the company’s specific terminology, tone of voice, and product details. A common and powerful technique used in this process is Retrieval-Augmented Generation (RAG). With RAG, when a user asks a question, the system first retrieves relevant documents or passages from the company’s private knowledge base. It then provides this retrieved information to the LLM as context along with the user’s question, instructing the LLM to generate an answer basedonly on the provided information. This approach significantly improves factual accuracy and reduces the risk of the LLM “hallucinating” or making up incorrect information, ensuring the chatbot’s responses are grounded in the company’s truth.

2.4 A Comparative Analysis of Leading LLMs for Conversational Applications

The selection of an LLM is a foundational architectural decision that impacts a chatbot’s performance, cost, and capabilities. The market offers a range of models, each with distinct strengths and weaknesses. The choice depends on an organization’s specific goals, technical expertise, and target audience.

- GPT-4o (OpenAI): As the latest iteration from OpenAI, GPT-4o is distinguished by its speed and native multimodal capabilities. It can process and generate responses based on a combination of text, audio, and image inputs, enabling richer and more natural human-computer interactions. Its response time, benchmarked at around 232 milliseconds, mimics human conversational speed. It is also developing the ability to recognize emotion, a key challenge in conversational AI. Best practices for its implementation involve creating continuous feedback loops to refine its emotional intelligence and optimizing infrastructure to handle its diverse input types efficiently.

- Claude 3.5 Sonnet (Anthropic): Developed by Anthropic, the Claude series of models is built around a principle of “Constitutional AI,” which aims to ensure that outputs are helpful, harmless, and accurate. Claude 3.5 Sonnet shows improved performance in understanding nuance, context, and even humor, making it suitable for more sophisticated conversational experiences. The main challenge is balancing its primary directive of being helpful with the need for factual accuracy and real-time processing speeds. Regular model updates and a scalable infrastructure are key to leveraging its strengths.

- Gemini (Google): Google’s Gemini models are deeply integrated across its product ecosystem and are designed from the ground up for multimodality, seamlessly processing text, images, audio, and video. The most powerful variant, Gemini Ultra, performs at the top of many industry benchmarks. The primary challenges associated with Gemini involve the complexities of integrating its multimodal inputs and ensuring robust user privacy, a key concern for Google’s consumer-facing products. Best practices include adopting modular training approaches for flexibility and implementing strict data privacy controls.

- Open-Source Models (Llama 2, Mixtral 8x22B): Open-source models offer greater flexibility and control for organizations with the technical expertise to manage them. Meta’s Llama 2 is designed to be highly performant while requiring lower computational resources, making it adaptable for a wide range of conversational tasks.Mixtral 8x22B, from Mistral AI, is a powerful “Mixture-of-Experts” (SMoE) model that is highly efficient and excels in multilingual and code-generation tasks. Challenges for these models include managing resource constraints (Llama 2) and efficiently handling sparse parameter utilization (Mixtral). Best practices involve using high-quality, curated training data and engaging with the open-source community for support.

- Region-Specific Models (Ernie 4.0): For businesses with a strong focus on specific linguistic markets, region-specific models can offer superior performance. Baidu’s Ernie 4.0 is a highly parameterized model that excels in Mandarin and has strong capabilities in other languages, making it a leading choice for applications targeting the Chinese market. The challenges lie in handling diverse cultural and linguistic nuances. The best practice is to fine-tune the model on business- and region-specific contexts to optimize its performance.

Table 2: Leading Large Language Models (LLMs) for Conversational AI

Model |

Developer |

Key Strengths |

Primary Challenges |

Best Practices for Implementation |

GPT-4o |

OpenAI |

High speed (232ms response), native multimodality (text, audio, image), developing emotional intelligence |

Ensuring accurate emotional understanding, maintaining performance with diverse inputs |

Implement continuous feedback loops to refine emotional intelligence; optimize infrastructure for multimodal data handling |

Claude 3.5 Sonnet |

Anthropic |

Focus on safety and accuracy (“Constitutional AI”), strong understanding of nuance and humor |

Balancing helpfulness with factual accuracy, ensuring real-time processing |

Perform regular updates for contextual understanding; invest in scalable infrastructure for fast processing |

Gemini |

Google |

Excellent native multimodal processing (text, image, audio, video), deep integration with Google products |

Seamless integration of multimodal inputs, maintaining user privacy |

Adopt modular training approaches for flexibility; ensure strict data privacy controls and compliance |

Mixtral 8x22B |

Mistral AI |

High-performance open model, efficient sparse “Mixture-of-Experts” (SMoE) architecture, strong in multilingual and code tasks |

Efficiently managing sparse parameter utilization, ensuring consistent multilingual support |

Implement continuous language-specific training; optimize parameter utilization for the specific use case |

Llama 2 |

Meta |

Open-source, designed for lower computational requirements while maintaining high performance, highly adaptable |

Resource constraints, ensuring robustness and stability in production environments |

Utilize high-quality, curated training data; use an orchestrator to manage multiple models for enhanced robustness |

Ernie 4.0 |

Baidu |

Excels in Mandarin, strong capabilities in other languages, widely used in multilingual Asian markets |

Handling diverse language nuances and optimizing for different cultural contexts |

Customize fine-tuning for specific business and cultural contexts; use Retrieval-Augmented Generation (RAG) for knowledge grounding |

Section 3: The Development Lifecycle: A Strategic Framework for Implementation

Building an enterprise-grade AI chatbot is a complex undertaking that extends far beyond software development. It requires a structured, multi-phase approach that integrates strategic planning, user-centric design, rigorous technical execution, and deep integration with existing business systems. A successful implementation is not the result of a single team’s effort but a carefully orchestrated collaboration between business stakeholders, designers, and technical experts. This section outlines a five-phase lifecycle for developing and deploying a powerful and effective AI support chatbot.

3.1 Phase I: Strategic Definition and Platform Selection

The foundation of any successful chatbot project is a clearly defined strategy. Before any technical work begins, the organization must establish what the chatbot is meant to achieve and how its success will be measured.

- Define Clear Objectives & KPIs: The first step is to articulate the specific, measurable goals for the chatbot. These objectives must be tied to tangible business outcomes. For example, instead of a vague goal like “improve customer service,” a clear objective would be “reduce average customer response time by 50%” or “increase lead conversion from the website by 15%”. Defining these Key Performance Indicators (KPIs) from day one is crucial, as they will guide the entire design and development process and provide the benchmarks for evaluating the chatbot’s performance and ROI post-launch.

- Identify User-Focused Use Cases: With clear goals in mind, the next step is to identify the specific use cases the chatbot will handle. It is critical to prioritize use cases that solve genuine pain points for the customer, not just those that optimize internal processes. To do this effectively, organizations should develop detailed user personas to understand their target audience’s needs, preferences, technical savvy, and potential frustrations. This user-centric approach ensures that the chatbot is designed to be genuinely helpful, which is the ultimate driver of adoption and satisfaction.

3.2 Phase II: Designing the Conversational Experience (UX and Flow Mapping)

This phase focuses on the user-facing aspects of the chatbot: what it says, how it says it, and how it guides the user through an interaction. A poor conversational user experience (UX) can render even the most powerful AI ineffective.

- Map the Conversation Flow: Before using a digital builder, the initial design process should be low-fidelity. Sketching out the primary conversation paths on a whiteboard or with a digital collaboration tool like Miro is a highly effective practice. This process involves creating a chatbot flowchart, which is a visual diagram of the logical steps and decision points in a conversation. The map should begin with the various entry points (e.g., a user clicking a chat widget on the homepage vs. the pricing page) and then branch out based on the different user intents and potential responses.

- Best Practices for Flow Design:

- Simplicity and Focus: Avoid creating monolithic, overly complex conversation flows. If a task is complicated, it is better to break it down into several smaller, more focused sub-flows. This makes the chatbot easier to build, test, and maintain, and provides a clearer, less overwhelming experience for the user.

- Eliminate Dead Ends: Every conversational branch must lead to a logical conclusion, whether that is a resolved query, a completed action, or a handoff to a human agent. A user should never be left at a dead end where the bot simply says “I don’t understand” without offering a way forward.

- Design for Escalation (The “Off-Ramp”): No chatbot can handle 100% of queries. Therefore, designing clear and seamless “off-ramps” to human agents is a critical part of the flow. The chatbot should be designed to recognize explicit requests for human help (e.g., “talk to an agent”) as well as implicit signs of user frustration (e.g., repeated questions, negative sentiment). The handoff process itself should be smooth, transferring the full conversation context to the human agent.

- Guide the Interaction: Especially in complex flows, it is important to guide the user. Using interactive elements like buttons and quick replies can streamline the conversation, reduce the amount of typing required from the user, and prevent them from going off-track. This is particularly important for mobile users, where typing long responses is cumbersome.

- Develop a Chatbot Persona: A chatbot without a personality can feel robotic and unengaging. Crafting a distinct persona—whether it’s professional and direct, friendly and empathetic, or even humorous—can build a stronger connection with users. This persona should be a direct reflection of the brand’s identity and should be tailored to the expectations of the target audience. The tone, vocabulary, and even the use of emojis should be consistent throughout all chatbot interactions.

3.3 Phase III: Data Preparation and Model Training

This phase is the technical heart of building an intelligent chatbot. The performance, accuracy, and overall quality of the AI model are directly dependent on the quality and relevance of the data it is trained on.

- Data Collection: The first step is to gather a large and diverse corpus of relevant data. This data will form the chatbot’s knowledge base and training set. Key sources include historical customer support chat logs and emails, transcripts from call centers, the company’s public-facing FAQ pages, internal product documentation and knowledge bases, CRM data, and even conversations from social media and online industry forums.

- Data Preprocessing and Cleaning: Raw data from these sources is often messy and unstructured. It must be rigorously cleaned and preprocessed before it can be used for training. This involves removing personally identifiable information (PII), deleting irrelevant or repetitive data, correcting spelling and grammatical errors, and standardizing data formats. This step is crucial to prevent the AI model from learning incorrect patterns or biases from the raw data.

- Data Annotation (Intent and Entity Tagging): For chatbot architectures that rely on an intent-based model (including hybrid LLM systems), this is a critical and often labor-intensive step. It involves annotating the cleaned data by labeling each user query (utterance) with its corresponding intent (e.g.,

TrackOrder) and tagging the specific entities within the text (e.g., labeling “12345” as anOrderNumber). It is essential to ensure that the training data is balanced across all defined intents to prevent the model from becoming biased towards the most common queries. - Model Training: With the prepared data, the AI model is trained. In a traditional ML approach, the annotated dataset is used to train a classification model to recognize intents and an extraction model to identify entities. In an LLM-based approach, this phase typically involves fine-tuning the large, pre-trained model on the company’s specific knowledge base and conversational data. The model learns the company’s unique language, products, and processes, enabling it to generate accurate and contextually appropriate responses.

3.4 Phase IV: Testing, Evaluation, and Deployment

Before the chatbot is released to the public, it must undergo extensive testing to ensure it is effective, reliable, and free of major flaws.

- Rigorous Testing: The testing process should be comprehensive, evaluating the chatbot against a wide range of scenarios and user inputs. Key metrics to check during testing include accuracy (does it understand the intent correctly?), response relevance, response time, and goal completion rate (does it successfully complete the tasks it’s designed for?). This involves both automated testing with predefined scripts and manual testing where team members try to “break” the bot with unexpected or ambiguous queries.

- Preview Functionality and Beta Testing: Most modern chatbot platforms offer a preview function that allows designers to interact with the bot and test the conversation flow before it is live. In addition to internal testing, conducting a beta test with a small group of real users is invaluable. Beta testers can provide authentic feedback on the conversational experience and help identify issues that internal teams might have missed.

- Deployment Strategy: Once testing is complete and the chatbot is deemed ready, a deployment strategy is enacted. This involves choosing the channels where the chatbot will be deployed, such as the company website, a mobile app, or third-party messaging platforms like WhatsApp or Facebook Messenger. It is crucial to ensure that the chatbot widget is clearly visible and easily accessible on these channels to encourage user adoption. The deployment should ideally support an omnichannel experience, allowing for a consistent and seamless conversation as users move between different devices and platforms.

3.5 Phase V: Integration with Enterprise Systems (CRM, Helpdesk)

A standalone chatbot has limited value. The true power of an enterprise-grade chatbot is unlocked through its deep integration with other core business systems. This integration transforms the chatbot from a simple conversational interface into a powerful tool for process automation and personalization. However, this is also the phase where the “no-code” promise often meets technical reality. While many platforms offer user-friendly, no-code interfaces for designing the front-end conversation, the back-end integration required to power the most valuable features is a highly technical task. It necessitates a close collaboration between business teams, who define the use cases, and technical teams, who implement the complex connections.

- CRM Integration: Integrating the chatbot with a Customer Relationship Management (CRM) system is arguably the most critical integration. This connection allows the chatbot to access the rich history of customer data stored in the CRM. With this data, the chatbot can deliver true personalization: greeting a returning customer by name, understanding their purchase history, and providing support that is aware of their specific context. The integration is a two-way street; the chatbot can also write data back to the CRM in real-time. It can automatically create new lead records, log every customer interaction, create support tickets, and update contact information, eliminating manual data entry for human agents and ensuring the CRM remains a single source of truth.

- Helpdesk Integration: For support-focused chatbots, integration with the company’s helpdesk or ticketing system is essential. This enables the seamless human handoff process. When a chatbot needs to escalate a conversation, the integration allows it to automatically create a new support ticket in the helpdesk system, assign it to the correct department, and pass along the entire chat transcript and user context to the human agent. This prevents the customer from having to repeat their issue and equips the agent with all the necessary information to resolve it efficiently.

- Technical Considerations: Achieving these integrations is a complex technical challenge. It typically requires working with Application Programming Interfaces (APIs) provided by both the chatbot platform and the enterprise systems. Developers may need to build middleware to act as a bridge between the systems, handling data transformation, mapping fields from the chatbot to the CRM, and managing data synchronization protocols (e.g., real-time updates via webhooks vs. periodic batch updates). Throughout this process, maintaining robust data security and privacy, including encryption and secure authentication, is paramount.

Section 4: Essential Features and Capabilities of an Enterprise-Grade Support Chatbot

A modern, enterprise-grade AI support chatbot is defined by a suite of features that collectively enable it to deliver efficient, personalized, and reliable service. These capabilities can be categorized into foundational, non-negotiable features that form the bedrock of any competent system, and advanced, differentiating features that elevate the chatbot into a truly intelligent and strategic asset. The effectiveness of nearly all these features, however, is not inherent to the chatbot itself but is unlocked and amplified by the depth of its integration with backend enterprise systems.

4.1 Foundational Features: The Non-Negotiables

These are the core capabilities that users and businesses expect from any modern support chatbot. Their absence would signify a critical failure in the system’s design.

- 24/7 Availability: A primary driver for chatbot adoption is the ability to provide round-the-clock support. The chatbot must be available to answer customer queries instantly, at any time of day or night, and across all global time zones, ensuring no query goes unanswered.

- Omnichannel Support: Customers interact with brands across a multitude of digital touchpoints. An effective chatbot must therefore offer omnichannel support, providing a consistent and seamless experience whether the user is on the company website, a native mobile app, or third-party messaging platforms like WhatsApp, Facebook Messenger, or Slack. A truly omnichannel experience allows a user to begin a conversation on one channel and seamlessly continue it on another without losing context.

- Seamless Human Handoff: Recognizing that no AI can resolve every issue, a smooth and intelligent escalation path to a human agent is essential. When a query becomes too complex, the user expresses significant frustration, or they explicitly request to speak to a person, the chatbot must execute a seamless handoff. This process is more than a simple transfer; it involves passing the entire conversation context, chat history, and relevant user data from the CRM to the human agent. This critical step ensures the customer does not have to repeat their problem, which is a major source of frustration, and equips the agent to resolve the issue efficiently.

- Data Privacy and Security: As chatbots often handle sensitive personal and financial information, robust security features are non-negotiable. The platform must ensure data privacy through measures like end-to-end encryption for all conversations, secure authentication protocols, and granular access controls for authorized personnel. Furthermore, the system must be designed to comply with relevant data protection regulations, such as the GDPR in Europe and the CCPA in California, to maintain customer trust and avoid significant legal and financial penalties.

4.2 Advanced Capabilities: The Differentiators

These advanced features distinguish a truly intelligent chatbot from a basic one. They are powered by more sophisticated AI and deeper system integrations, and they are the key to delivering a superior and personalized customer experience.

- AI-Driven Learning and Adaptability: A hallmark of an advanced AI chatbot is its ability to learn and improve over time. Through machine learning, the chatbot should analyze the outcomes of past interactions, identify patterns in user queries, and automatically refine its responses to become more accurate and effective without requiring constant manual reprogramming. This self-learning capability ensures the chatbot evolves alongside customer needs and business changes.

- Sentiment Analysis: Moving beyond literal comprehension, sentiment analysis allows a chatbot to detect the user’s emotional state—such as happiness, frustration, confusion, or anger—from the language, tone, and phrasing they use. By gauging sentiment, the chatbot can tailor its responses to be more empathetic, adjust its tone to de-escalate a tense situation, or recognize when a frustrated user needs to be immediately escalated to a human agent. This emotional intelligence is crucial for managing customer relationships effectively.

- Multilingual Support: For any business with a global or diverse customer base, the ability to communicate in multiple languages is a powerful differentiator. Modern chatbots can be trained to understand and respond fluently in dozens of languages, breaking down communication barriers and making support more accessible and trustworthy for international customers.

- Personalization: This capability goes far beyond simply using a customer’s first name. True, deep personalization is achieved by integrating the chatbot with the CRM system. This allows the bot to access a customer’s entire interaction history, past purchases, and stated preferences. Armed with this context, the chatbot can provide highly tailored product recommendations, offer support that is relevant to the specific products a customer owns, and create a unique, one-to-one experience that fosters loyalty and drives engagement.

- Multimedia and Interactive Elements: To make conversations more engaging and effective, modern chatbots should support more than just plain text. The ability to send and receive multimedia elements—such as images, videos, GIFs, and clickable carousels—can significantly enhance the user experience. For example, a support bot could show a user a video tutorial to solve a problem, or an e-commerce bot could display a carousel of product images with “Buy Now” buttons, making the interaction more dynamic and visually appealing.

4.3 Integration and API Connectivity

The value of the features described above is directly proportional to the chatbot’s ability to connect with and exchange data with other enterprise systems. A chatbot’s features should not be viewed as standalone modules but as capabilities that are unlocked by a robust integration architecture.

- CRM and Helpdesk Integration: As previously detailed, these are the most critical integrations. CRM connectivity powers personalization and sales automation, while helpdesk integration enables streamlined support workflows and seamless agent handoffs. A platform’s native, out-of-the-box integrations with major systems like Salesforce, HubSpot, and Zendesk are a significant advantage.

- API for Custom Integrations: No platform can have a native integration for every tool a business uses. Therefore, a powerful and well-documented Application Programming Interface (API) is an essential feature. An API allows developers to build custom connections between the chatbot and any third-party or internal system. This could include connecting to an e-commerce platform’s database to check real-time inventory, a shipping provider’s system to get live tracking updates, or a company’s internal ERP to retrieve customer data.

- Integration with other AI Technology: The chatbot platform should also be flexible enough to integrate with other specialized AI services. This allows a business to enhance its chatbot with cutting-edge capabilities from providers like Google Dialogflow or Microsoft Azure Cognitive Services, such as advanced voice recognition, predictive analytics, or more powerful language models, without being locked into the chatbot vendor’s native AI alone.

This deep interconnectedness means that when evaluating chatbot platforms, leaders must look beyond a simple checklist of features. The more critical evaluation point is the platform’s integration architecture and API capabilities. A platform with a robust, flexible integration layer is ultimately more powerful and future-proof than one with a long list of siloed, non-integrated features.

Section 5: Measuring Success: Analytics, KPIs, and Continuous Improvement

Deploying an AI chatbot is not the end of the development lifecycle; it is the beginning of a continuous cycle of measurement, analysis, and optimization. A “set it and forget it” approach will lead to a stagnant and increasingly ineffective tool. The key to maximizing a chatbot’s value and ensuring a high return on investment lies in establishing a robust framework for monitoring its performance and using those insights to drive ongoing improvements. This requires selecting the right Key Performance Indicators (KPIs) that align with strategic goals and implementing a systematic feedback loop.

5.1 A Framework for Chatbot Performance Measurement: Key KPIs

The sheer number of available chatbot metrics can be overwhelming. The critical first step is to select a focused set of KPIs that directly reflect the chatbot’s primary business objectives. A chatbot designed for lead generation should not be measured by the same primary metrics as one designed for technical support cost reduction. Tracking the wrong KPIs can lead to optimizing for the wrong behaviors—for example, a sales bot that is too focused on containment might fail to escalate valuable leads to human agents. Therefore, the analytics strategy must be a direct reflection of the business strategy.

The most important KPIs can be grouped into three categories:

User Engagement Metrics: These metrics measure how, and how often, customers are interacting with the chatbot. They provide a high-level view of the bot’s visibility and appeal.

- Interaction Volume & Total Users: The total number of conversations initiated with the chatbot over a specific period. A low or declining number of users may indicate that the chatbot is not visible enough on the website or that there are technical issues preventing access.

- User Engagement Rate: The percentage of users who actively interact with the chatbot (e.g., send a message, click a button) after it has been triggered. This is a crucial measure of the bot’s ability to capture a user’s attention. A good industry benchmark for engagement rate is around 35-40%.

- Retention Rate: The percentage of users who return to use the chatbot multiple times over a given period. A high retention rate suggests that users find the chatbot valuable and helpful.

Conversational Quality Metrics: These KPIs assess the effectiveness and efficiency of the conversations themselves.

- Containment Rate (or Self-Service Rate): This is arguably the most important metric for a support chatbot. It measures the percentage of conversations that are successfully resolved by the chatbot without needing to be escalated to a human agent. A high containment rate is a primary indicator of the bot’s effectiveness and its ability to reduce the workload on the support team.

- Escalation Rate: The inverse of the containment rate, this metric tracks how often the chatbot has to transfer a conversation to a human. While a low escalation rate is generally desirable, it must be balanced against customer satisfaction to ensure the bot isn’t frustrating users by failing to escalate when necessary.

- Average Handling Time (AHT) & Conversation Length: This measures the average time or number of messages it takes for the chatbot to resolve a user’s query. A shorter AHT can indicate efficiency, but it must be analyzed in context. Very short conversations might mean the bot is providing quick answers, or they could indicate that users are abandoning the chat out of frustration.

- Error Rate (or Non-Response Rate): This tracks how frequently the chatbot misunderstands a user’s query or fails to provide any response. A high error rate points to deficiencies in the NLU model or gaps in the knowledge base.

Business Impact Metrics: These metrics connect the chatbot’s performance directly to tangible business outcomes and ROI.

- Goal Completion Rate (GCR): This measures how often the chatbot successfully guides a user to complete a specific, predefined goal, such as scheduling a demo, subscribing to a newsletter, or successfully processing a return. GCR is a powerful metric for measuring the effectiveness of task-oriented bots.

- Customer Satisfaction (CSAT): This is a direct measure of user happiness with the chatbot interaction. It is typically measured by asking the user to rate their experience (e.g., on a scale of 1-5 or with a simple thumbs up/down) at the end of the conversation. CSAT is a critical indicator of the overall quality of the customer experience.

- Conversion Rate: For chatbots involved in sales or marketing, the conversion rate is a vital KPI. It tracks the percentage of chatbot conversations that result in a desired business outcome, such as a completed purchase, a qualified lead, or a sign-up. This metric directly ties the chatbot’s activity to revenue generation.

- Return on Investment (ROI): The ultimate measure of business impact, ROI compares the total cost of the chatbot (platform fees, development, maintenance) against the total value it generates. This value is a combination of cost savings (from deflected tickets and reduced agent time) and new revenue (from conversions and leads).

Table 3: Key Performance Indicators (KPIs) for Chatbot Evaluation

KPI |

Definition |

Formula / How to Measure |

Strategic Importance (What it tells you) |

Containment Rate |

Percentage of interactions handled by the bot without human escalation. |

(Total Conversations - Escalated Conversations) / Total Conversations |

Measures the bot’s efficiency and its ability to reduce human agent workload. A primary indicator of automation success. |

Customer Satisfaction (CSAT) |

The average satisfaction score given by users after an interaction. |

Measured via post-chat surveys (e.g., 1-5 scale, thumbs up/down). |

Directly assesses the quality of the user experience. High CSAT indicates the bot is helpful and easy to use. |

Goal Completion Rate (GCR) |

The percentage of interactions where the user successfully completes a specific, predefined goal. |

(Number of Completed Goals / Number of Conversations with Goal) |

Measures the bot’s effectiveness at performing specific tasks (e.g., booking an appointment, processing a return). |

Conversion Rate |

The percentage of users who take a desired action (e.g., purchase, sign-up) after interacting with the bot. |

(Number of Conversions / Number of Engaged Users) |

Directly links the chatbot’s performance to revenue generation and lead acquisition. Crucial for sales/marketing bots. |

Escalation Rate |

The percentage of interactions that are transferred to a human agent. |

Escalated Conversations / Total Conversations |

Identifies the limits of the bot’s capabilities and highlights areas where the knowledge base or flows need improvement. |

User Engagement Rate |

The percentage of users who interact with the bot after it is triggered. |

(Users Who Interacted / Users Who Saw the Bot) * 100 |

Indicates the effectiveness of the bot’s welcome message and visibility. A low rate suggests the bot is not capturing user attention. |

Return on Investment (ROI) |

The overall financial value generated by the chatbot compared to its cost. |

(Value Gained - Cost of Investment) / Cost of Investment |

The ultimate measure of business success, combining cost savings from automation with revenue generated from conversions. |

5.2 Establishing the Feedback Loop: From Analytics to Actionable Improvement

Measuring KPIs is only useful if the insights are used to drive improvements. A successful chatbot strategy relies on a continuous feedback loop where data is constantly collected, analyzed, and used to refine the system.

- The Continuous Improvement Cycle: The philosophy behind chatbot management should be one of perpetual iteration: Monitor performance, analyze the data to identify weaknesses and opportunities, implement improvements, and then repeat the cycle.

- Data Collection for Analysis: Three primary sources of data fuel this improvement cycle:

- Chat Transcripts: Regularly reviewing the full transcripts of both successful and failed conversations is essential. These logs provide qualitative insights into the specific pain points customers are experiencing, the questions the bot struggles with, and the language users are naturally using.

- Direct User Feedback: Actively soliciting feedback is the most direct way to understand user satisfaction. This can be done through simple post-chat surveys asking for a CSAT score or a thumbs up/down rating, or by offering an optional open-ended question for more detailed comments.

- Analytics Dashboards: The chatbot platform’s analytics dashboard provides the quantitative data. It allows teams to monitor the KPIs defined above in real-time, track trends over time, and drill down into specific metrics to diagnose problems.

- From Insights to Action: The analysis of this data should lead to concrete actions aimed at improving the chatbot’s performance.

- Identify and Fill Knowledge Gaps: If analytics show that many users are asking the same question that the bot cannot answer (a high error rate for a specific topic), this signals a clear gap in the chatbot’s knowledge base. The team can then create new content or a new conversational flow to address this unmet need.

- Refine Conversation Flows: Analyzing metrics like bounce rate or conversation length for specific parts of a flow can reveal where users are getting stuck or dropping off. If a particular flow has a high abandonment rate, it may be too long, confusing, or asking for too much information. The team can then simplify the flow, clarify the language, or break it into smaller steps.

- Retrain the AI Model: The data collected from user interactions, especially the queries that the bot failed to understand, is an invaluable resource for retraining the AI model. By feeding these new utterances back into the training dataset, the NLU model’s accuracy and intent recognition capabilities can be continuously improved, making it smarter and more effective over time.

5.3 Optimizing Performance: A Data-Driven Approach to Refinement

Beyond fixing problems, a data-driven approach can also be used to proactively optimize the chatbot for better performance.

- A/B Testing: To improve metrics like user engagement or goal completion, teams can run A/B tests. This involves creating two versions of a conversational element—such as different welcome messages, different button text, or slightly different conversation flows—and showing each version to a segment of the user base. By comparing the performance of the two versions, the team can identify which one is more effective and adopt it for all users.

- Personalization and Content Optimization: Analytics can reveal different segments of users with different needs. This insight can be used to further personalize the chatbot’s interactions for each segment. Additionally, by identifying the most frequently asked questions, businesses can prioritize optimizing the knowledge base articles and FAQ content related to those topics, ensuring the bot always has the best possible answer to the most common queries.

- Aligning the Team: Chatbot performance is a cross-functional concern. Sharing the analytics dashboards and insights with teams across the organization—including customer support, sales, marketing, and product development—ensures that everyone has a shared understanding of the customer experience. This alignment allows different teams to contribute to the chatbot’s improvement strategy; for example, marketing can help refine the bot’s tone of voice, while the product team can use insights from user questions to inform future product development.

Section 6: The Next Frontier: Future Trends in AI-Powered Website Support

The field of conversational AI is evolving at an accelerated pace, pushing the boundaries of what is possible in automated customer support. The chatbots of today, while powerful, are merely a stepping stone toward a future of more intelligent, integrated, and proactive digital experiences. Understanding the trajectory of this technology is crucial for businesses looking to build a sustainable and competitive advantage. The future is defined by a shift from reactive to proactive engagement, the rise of more human-like interaction modalities, and a redefinition of the website’s role in a brand’s overall AI strategy.

6.1 The Evolution from Reactive to Proactive Engagement

The most significant paradigm shift on the horizon is the move from reactive to proactive chatbots. For the most part, current chatbots wait for a user to initiate a conversation by asking a question or clicking a widget. The future of chatbot support lies in anticipating user needs and proactively initiating helpful interactions before the user even asks.

- How Proactive Engagement Works: This capability is powered by a combination of real-time behavioral monitoring and predictive analytics. The system tracks a user’s actions on the website—such as the pages they visit, the time they spend on each page, their mouse movements, and the items they add to their cart. By analyzing these data patterns, the AI can predict when a user might be encountering a problem or showing purchase intent. It then uses this prediction as a trigger to launch a relevant, contextual conversation.

- Proactive Use Cases:

- E-commerce Conversion: If a user has been lingering on the checkout page for several minutes or moves their cursor toward the “close tab” button (exit intent), a proactive bot can pop up with a message like, “Hi, it looks like you’re having trouble checking out. Can I help?” or offer a last-minute discount code to prevent cart abandonment.

- Targeted Support: If a user repeatedly navigates between a product page and a complex technical specification sheet, the bot can proactively offer to connect them with a technical specialist or provide a link to a detailed user guide, anticipating their confusion.

- Lead Nurturing: For a B2B website, if a visitor has downloaded a whitepaper and then browsed the pricing page, the bot could proactively engage them by asking if they would like to schedule a demo with a sales expert, striking while the user’s interest is highest.

6.2 The Rise of Voice, Multimodality, and Hyper-Personalization

Future chatbot interactions will become richer, more natural, and more deeply personalized, moving far beyond the text-based conversations that are common today.

- Voice-Enabled Chatbots: With the widespread adoption of voice search and smart home assistants, voice is becoming a primary user interface. Future website chatbots will increasingly be voice-enabled, allowing users to speak their queries naturally instead of typing them. Continuous improvements in speech-to-text accuracy and natural language understanding are making these voice-first interactions more reliable and seamless.

- Emotional Intelligence: AI models are rapidly improving their ability to recognize and respond to human emotion. Future chatbots will be able to detect not just the words a user says, but also the sentiment and emotion in their tone of voice or choice of words. This will allow the bot to respond with greater empathy—for example, adopting a more patient and reassuring tone with a frustrated user—which is critical for handling sensitive support situations and building stronger customer relationships.

- Multimodal Interactions: Conversations will break free from the confines of a single mode of communication. In a multimodal interaction, users and bots can exchange information using a combination of text, voice, images, and videos. A user might take a photo of a damaged product and send it to the bot to initiate a return. In response, the bot might send back an interactive video tutorial showing the user how to troubleshoot a technical problem. This ability to communicate across different media will make problem-solving faster and more intuitive.

- Hyper-Personalization: As AI models become more powerful and their integration with enterprise data systems deepens, personalization will evolve into “hyper-personalization.” Websites and the chatbots on them will dynamically adapt their entire interface, content, and conversational style in real-time, based on a deep, individual understanding of each user’s behavior, preferences, and history. This means two different users visiting the same website at the same time could have two completely different experiences, each tailored to maximize relevance and engagement.

6.3 Long-Term Implications for Digital Customer Experience

These technological trends have profound long-term implications for the digital landscape and the role of the website itself. The strategic importance of a well-designed website chatbot is set to increase dramatically, as it becomes a central hub for a brand’s entire AI-driven ecosystem.

- Autonomous Development and Maintenance: AI is already beginning to automate coding and testing tasks in web development. In the future, AI could handle even larger portions of the development and maintenance lifecycle autonomously, from generating initial website designs based on a simple prompt to automatically detecting and fixing broken links or performance issues.

- The Evolving Role of the Human Agent: As AI chatbots become capable of handling an ever-increasing range of customer interactions, the role of human support agents will continue to evolve. Routine, informational, and transactional queries will be almost entirely automated. Human agents will become high-level specialists, focused on handling the most complex, emotionally charged, and high-value customer relationships that require a level of nuance and strategic thinking that AI cannot replicate. They will function as the final escalation point and the human face of the brand for its most important customers.

Conclusion

The journey from rudimentary rule-based scripts to sophisticated, AI-powered conversational agents marks a fundamental evolution in how businesses engage with their customers online. Designing and implementing an effective AI support chatbot is no longer a peripheral IT project but a core strategic initiative with the power to reduce operational costs, enhance customer satisfaction, and directly drive revenue growth.

This report has demonstrated that a successful implementation hinges on a holistic and structured approach. It begins with a clear strategic vision, where business objectives and user-focused use cases dictate the technology and design choices. The technological core, powered by the advancements in Natural Language Processing and Large Language Models, enables a level of conversational fluency and contextual understanding that was previously unimaginable. However, this power is only fully realized through a disciplined development lifecycle that encompasses meticulous data preparation, user-centric conversation design, and, most critically, deep and robust integration with enterprise systems like CRM and helpdesk platforms. It is this integration that transforms a chatbot from a conversational novelty into a true engine of business process automation and personalization.

Furthermore, the deployment of a chatbot is not a final destination but the beginning of a cycle of continuous improvement. By establishing a rigorous framework of performance measurement and leveraging analytics to inform ongoing refinement, organizations can ensure their chatbot evolves in lockstep with customer needs and business goals. The selection of KPIs must be deliberately aligned with the chatbot’s strategic purpose to optimize for the right outcomes.