I. Defining the AI Expert: A Modern Synthesis of Skills and Vision

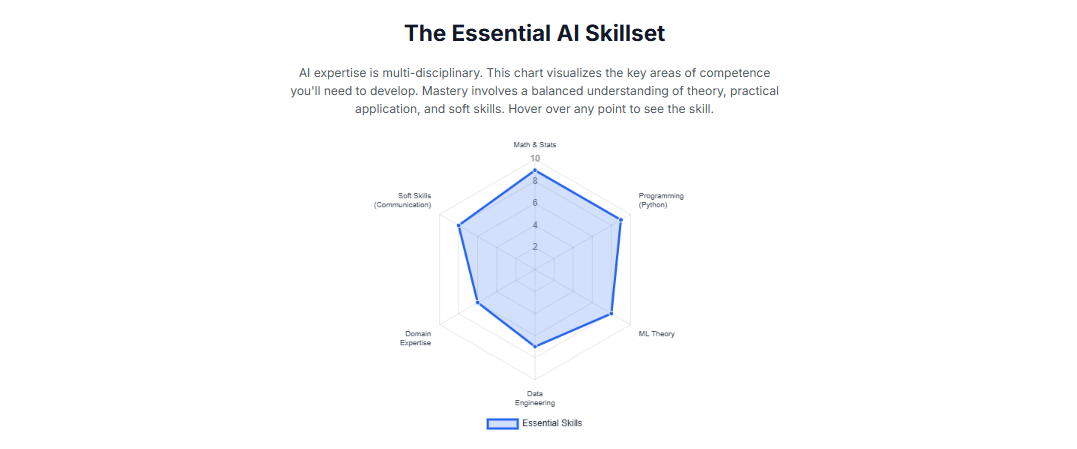

The pursuit of expertise in Artificial Intelligence (AI) requires a comprehensive understanding of what it means to be an expert in a field defined by its relentless evolution.

True mastery in AI today is not merely the sum of technical skills. It is a sophisticated synthesis of deep theoretical knowledge, practical implementation prowess, and strategic business acumen.

This also includes an unwavering commitment to ethical development and continuous learning. The modern AI expert is a multifaceted professional who can navigate the complexities of technology, business, and society.

The Evolving Definition of Expertise

The definition of an AI expert is a moving target, perpetually reshaped by the field’s rapid advancements. In its nascent stages, expertise was synonymous with the ability to construct algorithms from first principles.

Today, the landscape is profoundly different. The modern expert must embody a blend of competencies, acting as a computer scientist, a statistician, a systems architect, and a business strategist.

Expertise is now defined by the capacity to not only build and deploy AI systems. It also includes the ability to conceptualize, strategize, and lead AI initiatives that deliver tangible, measurable value.

This evolution reflects a significant shift in where value is created. The commoditization of foundational models and development frameworks has elevated the expert from a pure technologist to a strategic leader.

The journey to expertise is no longer a linear progression of acquiring technical skills. It is a holistic development process aimed at building a robust, multi-dimensional capability set.

The Three Pillars of Modern AI Expertise

The contemporary AI expert stands upon three foundational pillars, each essential for navigating the complexities of the field and driving meaningful innovation.

Technical Mastery: This is the bedrock of expertise. It encompasses a profound proficiency in the foundational mathematics that govern AI, fluency in relevant programming languages, and a deep understanding of machine learning.

Strategic Application: This pillar represents the ability to translate technical capabilities into real-world impact. It involves identifying critical business problems and designing elegant and effective AI solutions.

It also means bridging the communication gap between technical teams and business stakeholders. This requires domain expertise, strong communication skills, and the ability to collaborate effectively.

Principled Leadership: This pillar distinguishes a true expert from a mere practitioner. It is characterized by a steadfast commitment to the ethical development and deployment of AI.

This ensures that systems are fair, transparent, and accountable. It also involves cultivating a mindset of continuous, lifelong learning to adapt to the field’s breakneck pace of change.

The Shift from “Builder” to “Architect”

A crucial transformation has occurred in the nature of AI expertise. The initial demand was for “builders”—individuals with the deep, specialized knowledge to create algorithms and machine learning models from scratch.

However, the maturation of the AI ecosystem has fundamentally altered this dynamic. The widespread availability of powerful, open-source libraries like TensorFlow and PyTorch has abstracted away much of the low-level coding.

Simultaneously, the integration of AI into core business functions across sectors like finance, healthcare, and manufacturing has created a new, more pressing demand.

Organizations no longer need just individuals who can build a model. They need leaders who can understand a complex business context, identify the right problem, and oversee its integration.

This causal chain—from tool scarcity to tool abundance—has forced a redefinition of the expert’s role. The primary value has moved up the stack from pure implementation to strategic design, oversight, and governance.

The modern AI expert is less of a pure “builder” and more of an “architect.” They must possess a deep understanding of the foundational materials but their value lies in creating the blueprint.

II. The Educational Blueprint: Forging a Path Through Academia

A formal education serves as the cornerstone of an AI expert’s journey. It provides the structured, theoretical knowledge upon which all practical skills are built.

The path is no longer monolithic; a spectrum of options exists, from accelerated diplomas to terminal research degrees. The selection of an educational path is a critical strategic decision.

The Spectrum of Formal Education

The modern educational landscape offers a variety of pathways into the AI field, each catering to different career goals and timelines.

1-Year Diplomas: These programs offer a rapid, skills-focused entry point into the AI ecosystem. They provide a foundational understanding of machine learning, data analysis, and algorithm development.

Graduates are prepared for technical roles such as AI Technician, Junior Analyst, or Assistant Data Engineer.

3-Year Bachelor’s Degrees (BSc/BCA): For those seeking a more traditional academic foundation, Bachelor of Science (BSc) and Bachelor of Computer Applications (BCA) degrees in AI offer a strong starting point.

A BSc in AI typically emphasizes theory and mathematical foundations. A BCA in AI blends software development with AI applications. Both qualify graduates for entry-level positions like Data Analyst.

4-Year Bachelor of Technology (B.Tech): This professional degree provides an engineering-centric approach to AI. The curriculum integrates computer science, data engineering, and machine learning.

There is a strong emphasis on practical projects, model deployment, and ethical AI practices. Graduates are well-prepared for roles such as AI Engineer, Data Scientist, or Automation Specialist.

5-Year Integrated Programs (Bachelor’s + Master’s): For aspirants seeking deep specialization, integrated programs offer a comprehensive path.

These courses provide an in-depth understanding of advanced topics like robotics and intelligent systems design. They position graduates for senior technical leadership or future academic pursuits.

Undergraduate Foundations: Choosing Your Major

While a bachelor’s degree is now the minimum requirement for most entry-level positions in AI, the specific choice of major is a pivotal decision.

Computer Science (CS) / Software Engineering: This remains the most direct and common path into AI. It provides a robust foundation in algorithms, data structures, and software engineering principles.

A CS degree offers the broadest optionality across technology roles and is a strong prerequisite for a career as a Machine Learning Engineer.

Data Science / Statistics / Applied Mathematics: These majors cultivate a deep modeling mindset, grounded in probability, linear algebra, and statistical learning.

This path is ideal for those aspiring to become Data Scientists, Research Engineers, or Quantitative Analysts, providing a strong foundation for research-oriented careers.

Interdisciplinary Fields (Cognitive Science, Philosophy/Ethics): These programs offer a unique, human-centric perspective that is increasingly valuable in product-focused AI roles.

A background in Cognitive Science or Linguistics is excellent preparation for specializing in Natural Language Processing (NLP). A Philosophy or Ethics major provides a strong foundation in logic.

To be competitive for technical roles, these paths must be supplemented with a strong minor or significant coursework in computer science and mathematics.

Domain-Specific Degrees + AI Overlay: An undergraduate degree in a specific domain such as Biology or Finance, combined with a minor in AI, creates a powerful, differentiated skillset.

This path is ideal for applied machine learning roles where deep business context and domain-specific knowledge are critical for creating impactful solutions.

Graduate-Level Specialization: The Expert’s Accelerator

While a bachelor’s degree provides a solid entry point, a master’s degree or a PhD often serves as the catalyst that transforms a competent practitioner into a recognized expert.

Advanced degrees open doors to specialized careers, leadership positions, and roles at the forefront of AI research.

Master’s Degree: A Master of Science in a field like Applied AI or Machine Learning provides advanced theoretical insights and practical skills directly from industry experts.

Specialized master’s programs are now widely available in areas such as Robotics, Computational Linguistics, and Data Science, allowing for deep concentration in a chosen subfield.

PhD: A Doctor of Philosophy is the preferred, and often required, path for those aspiring to be AI Research Scientists. This terminal degree is centered on conducting foundational research.

The PhD journey involves a deep commitment to academic rigor, culminating in the publication of original research in top-tier, peer-reviewed conferences and journals.

Leading Institutions

The choice of institution can be as important as the choice of degree. Top-tier universities are distinguished by their rigorous curricula, world-class faculty, and strong ties to the technology industry.

Institutions such as MIT, Carnegie Mellon University, Stanford University, and the University of California, Berkeley, are consistently ranked among the best for AI.

These universities provide an immersive environment where students are exposed to both the theoretical foundations and practical applications of AI.

III. The Arsenal of an Expert: Mastering Core Technical and Analytical Competencies

Achieving expertise in Artificial Intelligence necessitates the mastery of a vast and diverse arsenal of skills. This ranges from immutable mathematical principles to evolving programming frameworks.

Crucially, this technical prowess must be complemented by a suite of soft skills that enable collaboration, strategic thinking, and leadership.

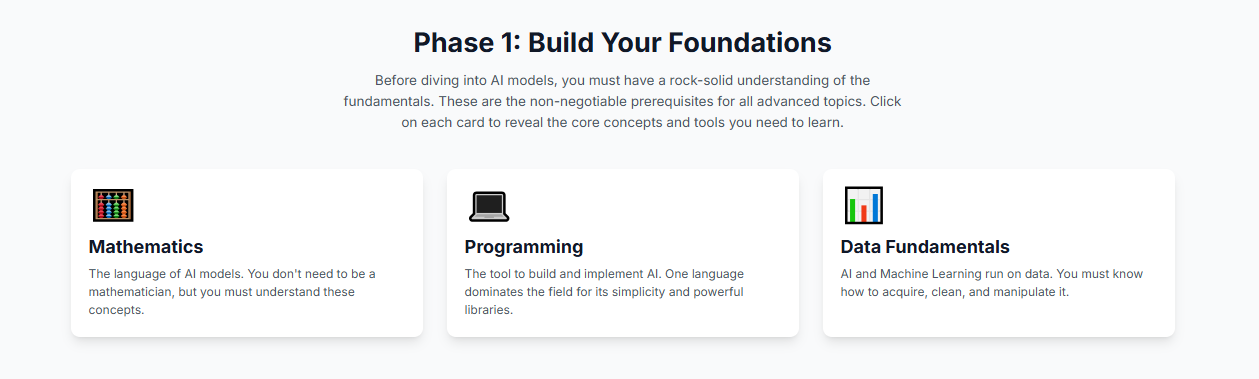

The Mathematical Bedrock

Mathematics is the fundamental language of AI. A deep, intuitive understanding of its core concepts is non-negotiable for any aspiring expert.

Linear Algebra: This is the mathematical foundation for representing and manipulating data in machine learning. AI does not see images or text; it sees arrays of numbers.

Essential concepts include scalars, vectors, matrices, and tensors. These are the fundamental data structures used to represent everything from a single pixel to an entire dataset.

Dot products, matrix multiplication, eigenvalues, and eigenvectors are critical techniques for dimensionality reduction and understanding the underlying structure of data.

Calculus: This is the engine of learning and optimization in AI. Models learn by iteratively minimizing an error function, a process governed by calculus.

Derivatives and gradients measure the rate of change of the error function. The gradient points in the direction of the steepest increase in error, so models learn by moving in the opposite direction.

The chain rule is the mathematical basis for the backpropagation algorithm used to train deep neural networks. Multivariable calculus is essential for optimizing functions with millions of parameters.

Probability & Statistics: This is the framework for reasoning under uncertainty, quantifying confidence, and evaluating model performance. AI models make predictions, which are inherently probabilistic.

Essential topics include basic probability theory, Bayes’ Theorem, and common probability distributions (like Gaussian or Binomial).

Maximum Likelihood Estimation (MLE) is a core statistical method used to estimate the parameters of a model that are most likely to have generated the observed data.

Optimization and Information Theory: These advanced topics are crucial for researchers and experts developing novel algorithms.

Optimization includes a deep understanding of gradient-based algorithms like Stochastic Gradient Descent (SGD) and the properties of convex functions.

Information Theory concepts like Entropy (a measure of uncertainty) and Cross-Entropy (a measure of difference) are fundamental to the loss functions used to train classification models.

Programming and Framework Proficiency

Theoretical knowledge must be paired with the practical ability to implement ideas in code.

Core Programming Languages: Python is the undisputed lingua franca of AI, prized for its simplicity and the vast ecosystem of libraries like NumPy and Pandas.

R remains a powerful tool for specialized statistical analysis and data visualization. For performance-intensive applications, proficiency in C++ or Java is highly valuable.

AI/ML Frameworks: Mastery of at least one major deep learning framework is mandatory. TensorFlow and PyTorch are the industry standards for building, training, and deploying neural networks.

Familiarity with libraries like Scikit-learn for classical machine learning algorithms (e.g., decision trees, support vector machines) is also essential for a well-rounded skillset.

Data Management and Big Data Technologies: AI models are data-hungry. Proficiency in SQL is a fundamental skill for extracting and managing data from relational databases.

For truly massive datasets, knowledge of Big Data frameworks like Apache Spark and Hadoop is required. These tools enable distributed data processing across clusters of computers.

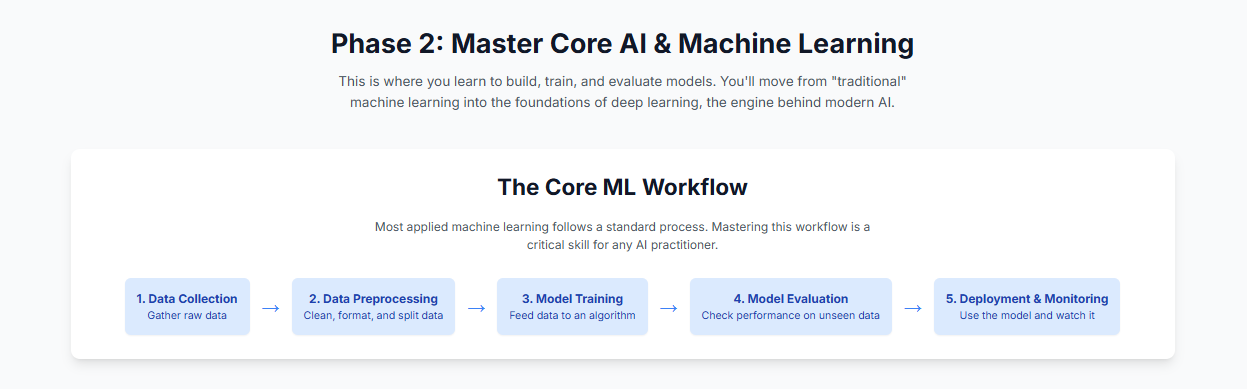

Core AI/ML Technical Disciplines

Beyond foundational skills, an expert must have deep knowledge in the core technical areas of AI.

Machine Learning: This is the central pillar. A comprehensive understanding of the three main paradigms is required: supervised, unsupervised, and reinforcement learning.

Deep Learning: This subfield focuses on artificial neural networks. Expertise in various architectures is critical for tackling complex, unstructured data.

This includes Convolutional Neural Networks (CNNs) for image and video, and Recurrent Neural Networks (RNNs) and Transformers for sequential data like text.

Data Analysis and Visualization: Before any model can be built, data must be understood. This involves the ability to clean, process, explore, and analyze datasets to extract meaningful insights.

Proficiency with visualization tools like Tableau or Power BI, and Python libraries such as Matplotlib and Seaborn, is key to communicating these insights effectively.

Cloud Computing and MLOps: Modern AI development is inextricably linked to the cloud. Expertise in using platforms like Amazon Web Services (AWS), Microsoft Azure, or Google Cloud Platform (GCP) is no longer optional.

This area also includes MLOps (Machine Learning Operations), a set of practices for managing the end-to-end lifecycle of a machine learning model, from data preparation to deployment and monitoring.

The Differentiating Factor: Essential Soft Skills

In the modern AI landscape, technical skills are necessary but not sufficient for achieving true expertise. Soft skills are the differentiating factor.

Communication and Collaboration: AI is a team sport. Experts must be able to articulate highly technical concepts to non-expert audiences, including product managers, executives, and clients.

Problem-Solving and Critical Thinking: Expertise lies not just in applying known solutions, but in creatively designing novel approaches for unprecedented challenges.

Industry Knowledge: An AI model is only as valuable as the problem it solves. Deep knowledge of a specific industry—be it finance, healthcare, or logistics—allows an expert to identify high-value problems.

Continuous Learning and Adaptability: The single most defining characteristic of an AI expert is a relentless commitment to lifelong learning. The field evolves at an astonishing rate.

An expert must cultivate the habit of staying current by reading research, attending conferences, and constantly experimenting with new technologies.

IV. From Theory to Reality: Gaining Essential Practical Experience

Academic knowledge provides the “what” and “why” of Artificial Intelligence, but practical experience is where true mastery is forged.

It is the crucible in which theoretical concepts are tested, refined, and transformed into tangible skills. Building a robust portfolio of evidence is the crucial transition from knowing to doing.

Structured Entry Points: Internships and Early-Career Roles

For students and recent graduates, structured work experiences are the most direct way to apply classroom learning to real-world business problems.

Internships: Roles such as AI Intern, Machine Learning Intern, or Research Assistant offer invaluable hands-on experience. Interns typically assist senior team members in developing models.

These placements provide exposure to industry best practices, large-scale datasets, and the collaborative dynamics of a professional AI team.

Entry-Level Roles: Positions like Junior Data Analyst or AI Support Specialist serve as excellent entry points. A Junior Data Analyst focuses on analyzing datasets to uncover patterns.

An AI Support Specialist role builds deep product knowledge and troubleshooting skills, providing a practical understanding of how AI systems function and fail in the real world.

The Portfolio Engine: Personal and Open-Source Projects

A compelling portfolio of personal and open-source projects is the ultimate demonstration of an individual’s skills, initiative, and passion.

Personal Projects: Creating projects from scratch showcases end-to-end understanding, from problem formulation and data collection to model development and deployment.

Beginner Projects: It is advisable to start with well-defined problems and publicly available datasets. Classic projects include building a Spam Email Detector or a Sentiment Analysis model.

These projects build fundamental skills in data preprocessing, model training, and performance evaluation.

Intermediate Projects: As skills develop, one can tackle more complex problems. Examples include building a Resume Parser or a Fake News Detector using advanced NLP models.

An Object Detection System that identifies objects in images is another strong intermediate project. These demonstrate proficiency in more advanced techniques.

Open-Source Contributions: Contributing to established open-source AI projects on platforms like GitHub is a powerful method for honing skills in a real-world, collaborative environment.

Getting Started: Newcomers can begin by improving documentation, fixing typos, or tackling issues explicitly labeled as “good first issue” or “beginner-friendly.”

Significant Benefits: Open-source contributions are typically peer-reviewed, providing valuable feedback and a public testament to code quality.

This process offers the opportunity to collaborate with and learn from highly experienced developers. It also demonstrates the ability to work within a large, existing codebase.

The Competitive Arena: Data Science Competitions

Data science competitions provide a high-intensity environment for testing and sharpening skills against a global community of peers.

Leading Platforms: Kaggle is the most prominent platform, hosting a vast array of competitions. Other platforms include DrivenData, Topcoder, and AIcrowd.

Key Advantages: Competitions expose participants to real-world problems and datasets, which are often messy and imperfect. They foster an environment of rapid learning.

Analyzing the solutions of top-ranking competitors is an education in itself. A strong performance in a competition is a clear signal of technical proficiency to employers.

The “Experience Flywheel”: A Synergistic Approach

It is a misconception to view these avenues for gaining experience—internships, projects, and competitions—as separate paths. They form a powerful, self-reinforcing “Experience Flywheel.”

The process begins with a strong personal project. This creates a compelling portfolio piece that makes a resume stand out for competitive internship applications.

The skills gained during an internship, such as working with large-scale datasets, provide the expertise needed to effectively tackle a complex Kaggle competition.

A high ranking in a prestigious Kaggle competition serves as a powerful public credential. This can attract the attention of maintainers of major open-source projects.

Finally, making a significant contribution to a widely used open-source library is a definitive demonstration of advanced technical skill and collaborative ability.

This level of proven expertise is highly attractive to hiring managers for top-tier full-time roles and admissions committees for elite PhD programs.

V. Charting Your Specialization: Deep Dives into AI’s Core Disciplines

General knowledge in AI provides a foundation, but true expertise is cultivated through a deep, focused specialization in one of its core subfields.

Mastery requires moving beyond a surface-level understanding to grapple with the core problems and research frontiers that define a particular domain.

Natural Language Processing (NLP)

NLP is the subfield of AI dedicated to enabling computers to understand, interpret, generate, and interact with human language in a valuable way.

Core Tasks and Problems: The fundamental challenges in NLP include machine translation, sentiment analysis, and named entity recognition (NER).

Text classification, such as spam detection, and question answering systems are also core tasks.

Key Techniques: Traditional methods include tokenization and vectorization methods like Bag-of-Words (BoW) and TF-IDF.

Contemporary NLP is dominated by deep learning. The transformer architecture has become the state-of-the-art, forming the basis for Large Language Models (LLMs) like BERT.

Research Frontiers: The leading edge of NLP research is focused on several key challenges.

One major area is developing smaller, more computationally efficient models that can perform on par with massive LLMs.

Researchers are actively investigating the societal biases (e.g., gender, racial) encoded in LLMs and developing methods to mitigate them.

Another focus is on creating effective NLP tools for the thousands of languages that lack the massive datasets available for English.

Interpretability and explainability are critical challenges, moving beyond “black box” models to understand why an LLM makes a particular prediction.

Ensuring that the outputs of generative models are factually accurate and grounded in source material is a major ongoing problem, focused on reducing “hallucinations.”

Computer Vision (CV)

Computer Vision is the field of AI that trains computers to “see,” interpret, and understand information from digital images and videos.

Core Tasks and Problems: The primary tasks in computer vision provide a hierarchical understanding of visual scenes.

Image Classification is the most basic task, assigning a single label to an entire image (e.g., “cat,” “dog,” “car”).

Object Detection is more complex, identifying what objects are in an image and where they are located by drawing bounding boxes around them.

Image Segmentation is the most granular task, classifying each individual pixel in an image to a category (e.g., “road,” “sky,” “person”).

Key Techniques: Deep learning has revolutionized computer vision. The cornerstone is the Convolutional Neural Network (CNN), an architecture specifically designed to process grid-like data.

Popular CNN-based architectures include ResNet for classification, YOLO (You Only Look Once) for object detection, and U-Net for segmentation.

Research Frontiers: The computer vision community is pushing the boundaries in several exciting directions.

Generative models are creating highly realistic images, videos, and 3D content from text prompts, using techniques like GANs and Diffusion Models.

3D Vision and Scene Reconstruction is moving beyond 2D images to reconstruct dynamic 3D scenes, enabling applications in virtual and augmented reality (VR/AR).

Open-World and Continual Learning involves designing models that can operate in dynamic environments and adapt to novel, previously unseen object categories.

Multimodal Learning integrates visual information with other data modalities, most notably text. Vision-Language Models (VLMs) are a major area of research.

Efficient Vision focuses on developing lightweight models that can perform complex vision tasks in real-time on resource-constrained devices like mobile phones.

Reinforcement Learning (RL)

Reinforcement Learning is a paradigm of machine learning where an agent learns to make a sequence of optimal decisions by interacting with an environment.

The agent receives feedback in the form of rewards or penalties for its actions.

Core Concepts: The RL framework is formally modeled as a Markov Decision Process (MDP). It consists of an agent, an environment, states, actions, and a reward function.

The agent’s goal is to learn a policy (a strategy) that maximizes its cumulative reward over time. A central challenge is the exploration-exploitation trade-off.

Key Algorithms: RL algorithms can be broadly categorized into value-based methods (like Q-learning) and policy-based methods (like REINFORCE).

Actor-Critic methods are hybrids that combine both approaches. Deep Reinforcement Learning (DRL) combines these algorithms with deep neural networks.

Research Frontiers: The RL research community is focused on making agents more capable and efficient.

Sample efficiency is a major limitation, as RL requires vast amounts of interaction. Research in model-based RL and offline RL aims to address this.

For complex, multi-step tasks like in robotics, researchers are developing methods for hierarchical RL, where the agent learns a set of basic “skills.”

Generalization and Transfer Learning is a key goal, aiming to train agents that can generalize their knowledge to new, unseen tasks.

Safety and Alignment is a critical area, ensuring that powerful RL agents have behavior that is safe, robust, and aligned with complex human values.

AI Ethics and Governance

AI Ethics is a vital, cross-cutting discipline focused on ensuring that artificial intelligence systems are designed, developed, and deployed in a responsible, fair, and beneficial way.

Core Principles: A consensus has emerged around a set of core principles that should guide AI development.

Fairness and Non-Discrimination: Ensuring that AI systems do not perpetuate or amplify existing societal biases, particularly against marginalized groups.

Transparency and Explainability: The ability to understand and interpret the decisions made by AI systems. Stakeholders should be able to comprehend an AI’s strengths and limitations.

Accountability: Establishing clear lines of responsibility and governance structures for the outcomes of AI systems.

Privacy and Data Protection: Respecting user privacy and ensuring that personal data is handled securely and in compliance with regulations.

Reliability, Safety, and Security: Building robust systems that perform reliably and are secure against malicious attacks.

Key Problems: The field grapples with numerous challenges, including algorithmic bias in hiring, the potential for mass surveillance, and the lack of accountability when autonomous systems cause harm.

Research Frontiers: The AI ethics community is moving from abstract principles to concrete solutions.

Practical governance models are being developed, such as the EU’s AI Act, to determine the most effective models for societal oversight.

Rigorous methods are being developed to audit large language models for fairness, stereotypes, and potential to cause harm.

The “Alignment Problem” is moving beyond a technical challenge to a socio-political one, investigating whose values AI systems should be aligned with.

A growing area of inquiry analyzes how AI shifts power within society and explores mechanisms for ensuring democratic legitimacy and public accountability.

VI. The Professional Trajectory: Navigating AI Career Paths and Advancement

The culmination of education, skills, and experience is a professional career where expertise is applied and refined.

The AI landscape offers a variety of expert-level roles, each with distinct responsibilities and career trajectories. Understanding these paths is crucial for long-term success.

Analysis of Key Roles

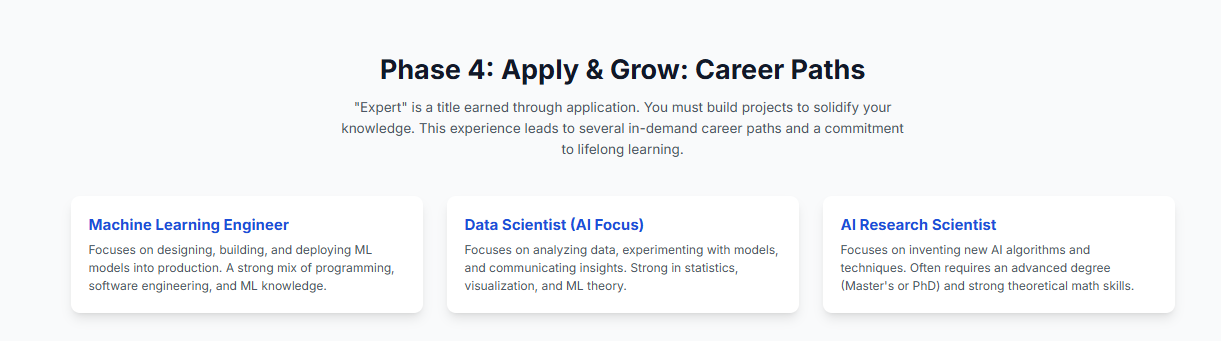

AI Research Scientist: This role is dedicated to pushing the theoretical frontiers of artificial intelligence. The primary objective is to conduct cutting-edge research and develop novel algorithms.

Their work is often foundational, involving experimentation and data analysis. A key responsibility is the dissemination of findings through publications in top-tier academic journals.

This career path demands deep theoretical knowledge, exceptional mathematical skills, and typically requires a PhD in a relevant field.

Machine Learning (ML) Engineer: The ML Engineer serves as the critical bridge between theoretical research and real-world application.

Their primary focus is on designing, building, testing, and deploying robust, scalable, and reliable machine learning systems in production environments.

Responsibilities include data preprocessing, model training, creating data pipelines, and managing the end-to-end lifecycle of ML models using MLOps best practices.

This role requires a strong foundation in both software engineering and machine learning, with proficiency in Python, cloud infrastructure, and ML frameworks.

AI Consultant: The AI Consultant acts as a strategic advisor, helping organizations leverage artificial intelligence to solve business problems and achieve strategic objectives.

Their responsibilities include assessing client needs, identifying opportunities for AI integration, formulating AI strategies, and guiding the implementation of AI solutions.

This role requires a unique blend of deep technical expertise, strong business acumen, and excellent communication and stakeholder management skills.

From Practitioner to Expert: The Advancement Roadmap

Advancing from a competent practitioner to a recognized expert is a deliberate process. It involves moving beyond technical execution to strategic influence and thought leadership.

Deepen Specialization: The first step is to transition from a generalist to a recognized authority in a specific niche. This could be a technical subfield or an application domain.

Cultivate Thought Leadership: Expertise must be visible to be recognized. This involves activities that shape the conversation in your field.

Publishing research, speaking at conferences, writing technical blogs, and contributing to open-source projects are all hallmarks of a thought leader.

Drive Strategic Impact: The transition to expert status is marked by a shift from solving assigned technical problems to identifying and defining the problems that need to be solved.

This requires taking on leadership responsibilities and influencing product or business strategy.

Pursue Continuous Education and Certification: The AI field’s rapid evolution means that learning never stops. Pursuing advanced, industry-recognized certifications can validate expertise.

Credentials such as the IBM AI Engineering Professional Certificate or AWS Certified AI Practitioner signal a commitment to professional development.

VII. The Lifelong Pursuit of Expertise: A Commitment to Continuous Evolution

The journey to becoming an Artificial Intelligence expert is not a finite path with a final destination. It is a continuous, dynamic process of learning, adaptation, and contribution.

In a field characterized by an exponential rate of change, expertise is not a static credential. It is a state of being that must be perpetually maintained and renewed.

Embracing the Pace of Change

The AI landscape is marked by frequent, paradigm-shifting breakthroughs. The state-of-the-art techniques of today often become the standard tools of tomorrow.

This relentless pace means that knowledge and skills have a short half-life. An expert mindset must be one of perpetual curiosity and a proactive embrace of change.

The assumption that one has “arrived” at expertise is the first step toward obsolescence.

A Framework for Staying Current

To maintain a position at the forefront of the field, an expert must adopt a structured and disciplined approach to continuous learning.

Engage with the Primary Research Community: The pulse of the AI field is its academic research. Actively reading and evaluating papers from major conferences is essential.

This practice provides direct insight into the latest algorithmic innovations and theoretical advancements before they become mainstream.

Maintain Hands-On Experimentation: Theoretical knowledge must be paired with practical application. A commitment to hands-on experimentation with new tools and frameworks is crucial.

This could involve replicating the results of a new research paper or fine-tuning a newly released open-source model.

Network, Collaborate, and Teach: Expertise is sharpened and amplified through interaction with other experts. Actively participating in workshops and professional meetups is key.

Collaborating on open-source projects or mentoring junior colleagues forces one to articulate complex ideas clearly, reinforcing one’s own understanding.

The Final Synthesis

True and lasting expertise in AI is the culmination of the entire journey detailed in this guide. It begins with a strong theoretical foundation from a formal education.

It is then built upon by mastering a deep arsenal of mathematical, programming, and analytical skills. This knowledge is galvanized through a portfolio of proven practical experience.

From this broad base, the path narrows into a deep specialization, where one can contribute original work. This expertise then finds its expression in a successful professional trajectory.

However, all these stages are merely the prerequisite. The final, essential element that binds them together is the relentless, lifelong pursuit of knowledge.

The path to becoming an AI expert is demanding, but the reward is the profound capability to architect the future of technology and help shape the future of society itself.