As we step into 2026, the global business landscape is witnessing a fundamental structural shift in Artificial Intelligence.

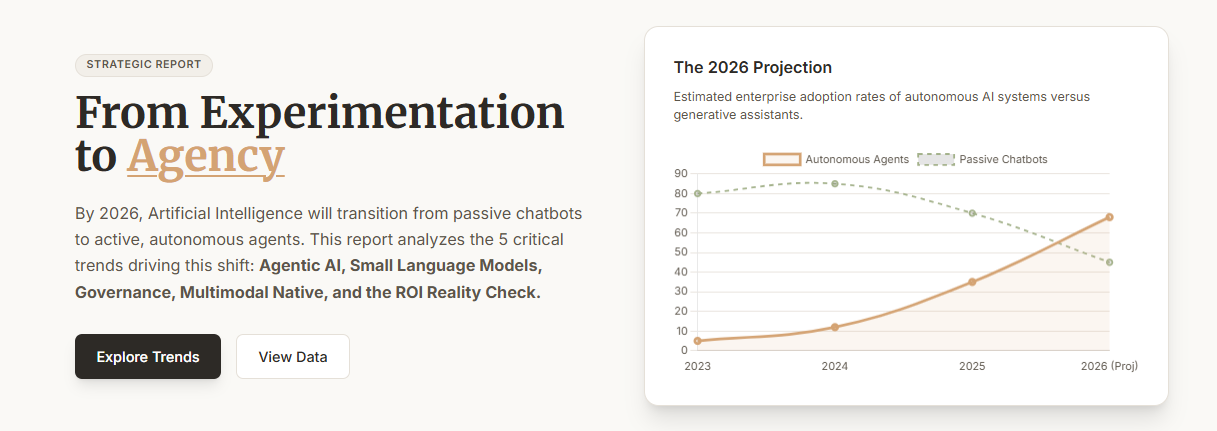

We have officially transitioned from a period of unbridled experimentation to a phase of rigorous industrialization.

The “wow factor” of 2023 and 2024, defined by creative content generation and pilot programs, has faded.

In its place, a “hard hat” era has emerged, prioritizing operational resilience and measurable return on investment (ROI).

The narrative is no longer about what AI can create, such as text or images, but rather what AI can do.

This report analyzes the rise of Agentic AI, the economic shift toward Small Language Models (SLMs), and the critical regulatory frameworks defining this new era.

The Agentic Revolution: From Chatbots to Autonomous Action

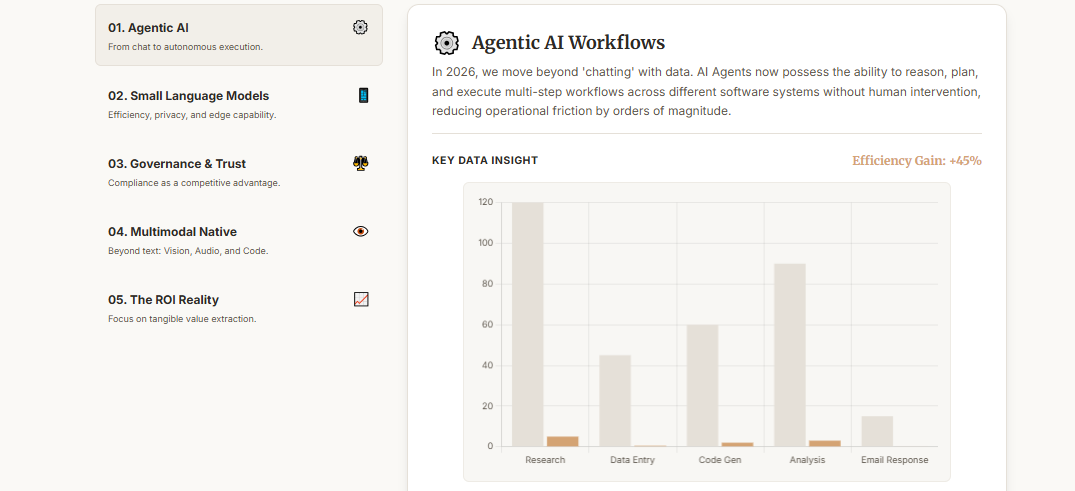

The most transformative trend of 2026 is the evolution of AI from a passive tool to an active agent.

Agentic AI represents a massive leap in capability, moving beyond static prompts to goal-oriented entities.

These systems possess the reasoning capabilities necessary to break down high-level objectives into executable sub-tasks.

For example, instead of just drafting an email, an agent can now “optimize the supply chain for Q3” by actively interacting with ERP systems.

Crucially, this shift is underpinned by interoperability frameworks like the Model Context Protocol (MCP).

MCP has emerged as the “USB-C for AI,” allowing agents to connect seamlessly to databases without brittle, custom code.

This standardization resolves the “model sprawl” that plagued early adoption phases, enabling agents to act as active participants in the enterprise.

Levels of Autonomy in Enterprise Systems

To understand the maturity of AI in 2026, organizations are categorizing deployments by levels of autonomy.

We are witnessing a progression from simple assistive automation to fully autonomous orchestration (“Swarm Intelligence”).

The following table outlines the five distinct levels of AI autonomy observed in the 2026 marketplace:

Level |

Designation |

Description |

Human Role |

Level 1 |

Assistive |

AI recommends actions or summarizes data. |

Primary Actor: The human executes; AI is a tool. |

Level 2 |

Copilot |

AI executes specific tasks via explicit prompts. |

Supervisor: The human directs the workflow. |

Level 3 |

Semi-Autonomous |

AI plans multi-step workflows; halts for exceptions. |

Monitor: Human manages by exception only. |

Level 4 |

Fully Autonomous |

AI sets goals and operates with minimal oversight. |

Auditor: Human governs performance post-facto. |

Level 5 |

Swarm Intelligence |

Specialized agents collaborate to solve complex problems. |

Architect: Human designs the ecosystem. |

The Reality Check: Orchestration Over Automation

Despite the enthusiasm, the transition to Agentic AI faces significant operational hurdles.

Analysts predict that nearly 40% of agentic initiatives may fail by 2027 due to flawed process design.

The primary failure mode is the tendency to automate broken or chaotic processes.

Success in 2026 demands a philosophy of “redesign, don’t automate.”

Leaders must reimagine end-to-end workflows for a silicon-based workforce rather than bolting AI onto human-centric procedures.

This involves moving away from monolithic models toward Multi-Agent Systems (MAS).

In a MAS architecture, an “orchestrator” agent delegates tasks to specialized sub-agents (e.g., a Legal Agent, a Logistics Agent).

This modular approach enhances reliability; if one agent fails, the system self-corrects without collapsing the workflow.

The Infrastructure Reckoning: The Rise of SLMs and Edge Computing

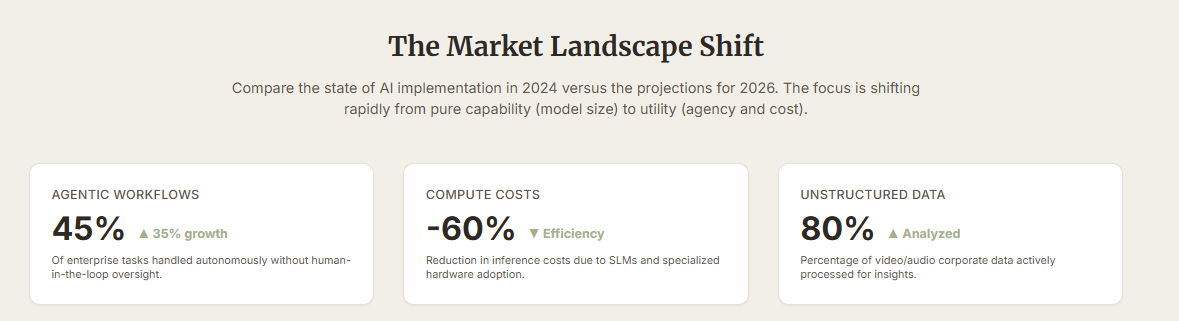

As AI integrates deeper into the enterprise, organizations are confronting an “infrastructure reckoning.”

The “one-model-rules-all” approach, relying on massive General Purpose LLMs, has proven economically unsustainable.

The sheer cost and energy consumption of these models have driven a strategic bifurcation in the landscape.

Enterprises are now prioritizing “inference economics,” where cost-to-serve is a primary constraint.

The Economic Case for Small Language Models (SLMs)

Small Language Models, typically with fewer than 10 billion parameters, offer a pragmatic solution.

These models require a fraction of the memory and power of their massive counterparts.

Using a GPT-4 class model for simple tasks like email summarization is akin to “buying a Ferrari to run daily errands.”

In 2026, the strategic correction involves routing 70% of high-volume workloads to SLMs.

Only the remaining 30% of tasks, which require deep reasoning, are sent to expensive, large models.

Furthermore, Domain-Specific Language Models (DSLMs) are outperforming generalist models in accuracy.

Trained on curated industry data, DSLMs in finance or healthcare reduce hallucinations and improve compliance.

The Edge Computing Revolution

The proliferation of SLMs is intrinsically linked to the “AI PC” and NPU-enabled hardware.

With devices capable of performing over 40 Tera Operations Per Second (TOPS), AI can now run locally.

This shift toward “On-Device AI” offers three critical strategic advantages:

-

Privacy and Sovereignty: Sensitive data never leaves the device, mitigating GDPR and leakage risks.

-

Latency Reduction: Local processing eliminates network lag, essential for real-time robotics and industrial apps.

-

Cost Efficiency: Offloading inference to user devices significantly reduces centralized cloud compute bills.

The prevailing architecture is now “Strategic Hybrid,” balancing cloud scalability with edge efficiency.

The Regulatory Fortress: Surviving the EU AI Act and TRiSM

If 2024 was the year of experimentation, 2026 is unequivocally the year of compliance.

The regulatory landscape has hardened, transitioning from voluntary guidelines to enforceable laws.

The focal point is the European Union’s AI Act, which reaches a critical implementation milestone in August 2026.

This regulation acts as a global standard, making high-risk classifications fully enforceable.

The 2026 Implementation Cliff

For “High-Risk” systems—such as those in HR, credit scoring, or critical infrastructure—compliance is mandatory.

The penalties for non-compliance can reach up to 7% of total worldwide annual turnover.

This has transformed governance from a bureaucratic hurdle into a strategic “license to operate.”

Organizations are scrambling to implement rigorous risk management systems and data governance protocols.

AI TRiSM: The Operating System for Trust

To navigate this minefield, enterprises are adopting the AI TRiSM (Trust, Risk, and Security Management) framework.

TRiSM is not a single tool, but a holistic approach to AI reliability and security.

Effective TRiSM implementation covers four key pillars:

-

Explainability: The ability to trace why an agent made a decision is now a legal requirement.

-

ModelOps: Automating governance checks within the development pipeline (CI/CD for AI).

-

App Security: Defending against adversarial attacks like “data poisoning” and “prompt injection.”

-

Privacy: Using anonymization technologies to ensure data used for inference is compliant.

Gartner predicts that TRiSM controls can eliminate 80% of faulty information in decision-making processes.

Physical AI and the Sustainability Mandate

In 2026, AI is breaking out of the screen and entering the physical world.

This trend, termed “Embodied AI,” integrates intelligence into robots, drones, and the Industrial Metaverse.

Digital Twins have matured from static models to dynamic simulations that mirror operations in real-time.

For instance, logistics networks now utilize “swarm intelligence” to re-optimize delivery fleets instantly during traffic disruptions.

The Green AI Paradox

However, the massive energy demands of these systems have created a sustainability paradox.

AI is both a significant consumer of energy and a critical tool for reducing emissions.

Data centers are under immense pressure to adopt “Green-in AI” strategies.

This includes using algorithmic efficiency to reduce compute power and shifting workloads to renewable energy sources.

Conversely, “Green-by AI” sees AI acting as an enabler of ESG goals.

AI agents are now managing renewable energy grids, balancing supply and demand in milliseconds.

In manufacturing, AI identifies high-emission routes in supply chains, facilitating Scope 3 emissions reporting.

Conclusion: A Strategic Roadmap for the Enterprise

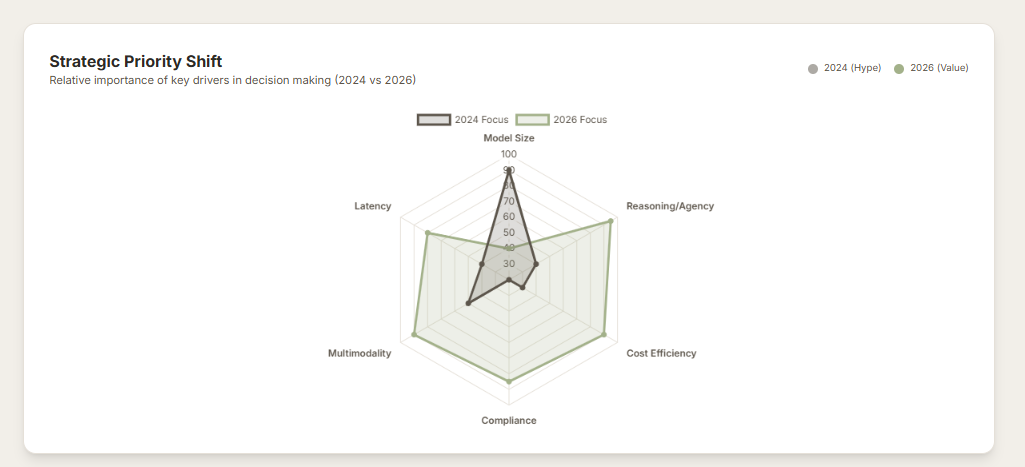

Differentiation in 2026 is no longer defined by access to the most powerful model.

Success is defined by the architecture used to deploy AI safely, efficiently, and at scale.

Leaders must transition from experimentation to engineering discipline.

Strategic Checklist for 2026:

-

Orchestrate Workflows: Redesign processes for human-agent collaboration; do not just automate existing chaos.

-

Right-Size Models: migrate routine tasks to SLMs and edge devices to control costs.

-

Operationalize Governance: Implement AI TRiSM immediately to prepare for the EU AI Act enforcement.

-

Invest in Data: Ensure data is “agent-ready” via semantic layers and standardized protocols like MCP.

-

Prioritize Sustainability: Align AI infrastructure with ESG goals through efficient hardware and workload shifting.

The winners of this era will be those who can tame the complexity of agents and govern the risks of autonomy.